Robots are learning to move like humans by stealing nature’s secrets. Motion capture tech breaks down human movement into precise data points, while machine learning algorithms predict how we walk, run, and gesture. Biomimetic design lets robots mimic insect agility and fluid motion. Sensors, neural networks, and physics simulations transform rigid machines into creatures that can almost make you forget they’re not alive. Want to know how deep this rabbit hole goes?

Motion Capture: Decoding Human Movement

Since the dawn of robotics, scientists have been obsessed with cracking the code of human movement—and motion capture technology might just be their secret weapon.

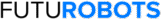

Imagine cameras and sensors tracking every twitch, step, and gesture of the human body like digital detectives. These systems—like Vicon—transform complex movements into precise data points, revealing the subtle language of locomotion.

Researchers aren’t just recording random motions; they’re hunting for those quintessential movements that scream “human”. By reducing body mechanics to dynamic point displays, they’re fundamentally distilling movement to its purest essence.

Think of it like stripping a dance down to its most fundamental steps. Point-light animations prove we can recognize entire stories from just a few strategic markers.

Who knew robots could learn so much from watching us move? Machine learning algorithms continuously evolve robotic vision systems to interpret and mimic human movement with increasing precision.

Biomimetic Design in Robotic Engineering

When nature designs something, engineers pay attention. Biomimetic design isn’t just fancy tech talk—it’s about stealing the coolest movement tricks from creatures that’ve been perfecting locomotion for millions of years.

Industrial robots are getting a serious upgrade by mimicking how insects and spiders navigate terrain. Take the BionicWheelBot: this little marvel can roll and crawl like a flic-flac spider, switching modes based on ground conditions.

Imagine robots that move as smoothly as living things, adapting without complex programming. Researchers at the Tokyo Institute of Technology are cracking this code, developing multi-legged robots that walk with uncanny natural patterns. These advancements are driven by neural network algorithms that enable robots to learn and adapt their movements with unprecedented precision.

Nature’s locomotion secrets: robots learning to glide with intuitive, fluid grace beyond rigid algorithms.

The goal? Make robots feel less like machines and more like dynamic, responsive beings that can work alongside humans without feeling weird or mechanical.

Physics-Based Simulation Techniques

From crawling bio-inspired robots that mimic spider movements, engineers now turn their attention to something even more mind-bending: physics-based simulation techniques that make robots move like they’ve got actual brains.

Imagine algorithms that understand gravity, friction, and inertia better than most humans. These physics-based simulation techniques transform robotic systems from clunky machines into creatures that flow and adapt like living organisms.

By modeling muscle dynamics and joint constraints, engineers can create robots that jump, run, and navigate complex terrain with shocking elegance. Real-time environmental feedback allows these mechanical marvels to adjust movements instantaneously—think of a robot parkour master dancing across unpredictable surfaces.

Sensor fusion and neural networks enable these advanced robotic systems to process environmental data with unprecedented speed and accuracy, further enhancing their adaptive movement capabilities.

Who knew math could make machines move so beautifully? It’s not magic; it’s just seriously smart computational physics.

Advanced Algorithms for Fluid Locomotion

Because nobody wants robots that move like rusty wind-up toys, engineers have been cracking the code of fluid locomotion through some seriously clever algorithms.

Advanced algorithms now mimic nature’s most elegant movers—think insects scuttling or cats prowling—by analyzing complex movement patterns. Machine learning lets robots adapt in real-time, transforming jerky mechanical motions into smooth, almost organic shifts.

Biomimetic principles are the secret sauce here. By studying how creatures navigate tricky terrain, researchers develop control systems that make robots look less like clunky machines and more like living beings.

LIDAR sensors and trajectory planning algorithms help robots sense and respond to their environment, creating movements so natural you might just forget you’re watching a machine.

Reinforcement learning techniques enable robots to practice movement through thousands of digital trials, dramatically improving their locomotion skills and adaptability.

Want robots that move like they’ve got soul? This is how.

Joint Articulation and Weight Distribution

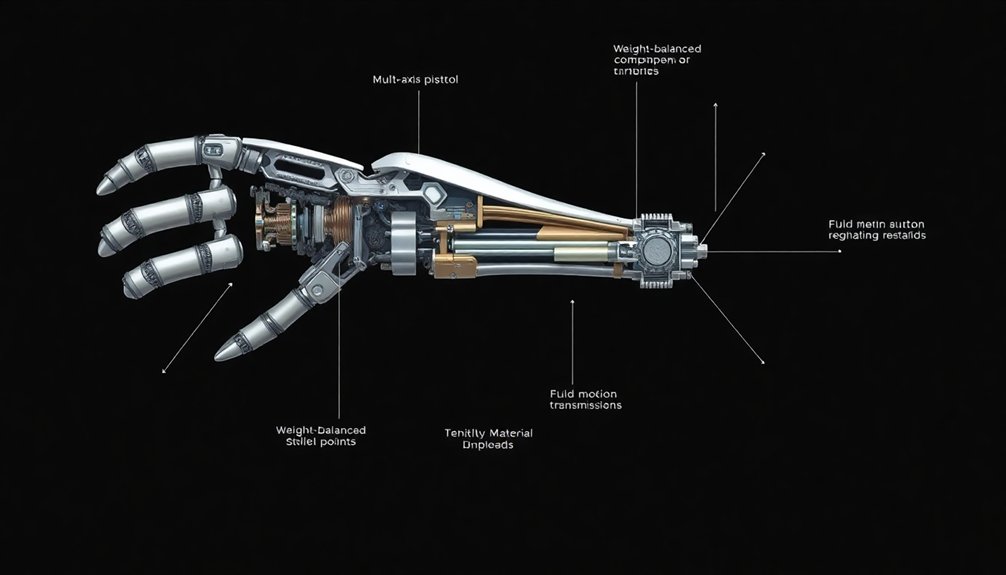

You’ve seen robots move, but have you ever wondered how they pull off those eerily human-like motions? Joint articulation is basically how robots fake being alive, mimicking human biomechanics by strategically bending and twisting like a mechanical dancer with precision-engineered muscles. Proprioceptive sensors enhance robots’ understanding of body positioning, allowing for more nuanced and precise movement that closely mimics natural human motion.

Weight distribution isn’t just some engineering mumbo-jumbo—it’s the secret sauce that keeps these metal performers from toppling over like drunk toddlers, ensuring they can walk, pivot, and navigate complex spaces without becoming an expensive pile of scrap.

Biomechanical Motion Principles

When engineers immerse themselves in the world of robotic movement, they quickly realize that copying human motion isn’t just about slapping some metal and motors together. Biomechanical motion principles demand precision—just look at how a human arm moves, all fluid and nuanced.

You’ll notice robots now use actuators that mimic muscle flexibility, calculating joint angles with laser-sharp kinematic models. Weight distribution becomes critical; a low center of gravity means the difference between a stable machine and one that’ll topple like a drunk toddler.

Sensors like accelerometers and gyroscopes help robots adjust posture in milliseconds, making movements feel eerily natural. Hydraulic and pneumatic systems further enhance this illusion, allowing robots to perform complex motions that’ll make you do a double-take. Pretty cool, right? Soft robotic actuators are revolutionizing how machines replicate the intricate biomechanical movements of living organisms, pushing the boundaries of what artificial systems can achieve.

Weight Transfer Dynamics

Imagine a robot walking across uneven terrain without face-planting—that’s where weight transfer dynamics become a robotic engineering magic trick.

These aren’t just fancy movements; they’re precise calculations of how joints flex and muscles (well, actuators) shift weight. Joint articulation lets robots mimic human motion, creating smooth shifts that look almost natural.

Think of it like a high-stakes dance where every pivot and step is mathematically choreographed.

Sensors constantly measure the robot’s center of gravity, making split-second adjustments that prevent embarrassing tumbles.

By tracking terrain changes and redistributing weight strategically, robots can now walk, climb, and navigate complex surfaces with a grace that would make their clunky ancestors jealous.

Who said robots are stiff? Not anymore. Closed-loop systems enable robots to continuously monitor and correct their movements, ensuring precision and adaptability across diverse environments.

Sensor Technologies Enabling Natural Movement

Because robots aren’t just sci-fi fantasies anymore, sensor technologies are transforming how machines move and interact with the world around them.

Advanced artificial intelligence now lets robots navigate environments like seasoned explorers, using LIDAR and stereo vision to map terrain with laser-like precision.

AI-powered robots roam landscapes with superhuman precision, transforming exploration through cutting-edge sensor technologies.

Imagine robots feeling surfaces through capacitive sensors, detecting emotional cues via microphone inputs, and dodging obstacles with infrared and ultrasound proximity detection.

These aren’t clunky mechanical movements anymore—they’re fluid, adaptive interactions that blur the line between machine and living creature.

Want proof? Check out how proximity sensors help robots adjust movements in milliseconds, creating motion so natural you might forget you’re watching a machine.

Machine learning algorithms continuously refine robotic perception, enabling increasingly sophisticated and nuanced environmental interactions.

The future isn’t just coming—it’s already dancing around you.

Machine Learning in Movement Precision

Let’s face it: robots aren’t learning to move like humans by accident. Machine learning is the secret sauce transforming manufacturing robots from clunky machines to precision performers.

How? By crunching massive datasets of human movement and turning them into robotic magic. Deep reinforcement learning enables robots to transform digital simulations into real-world adaptive behaviors.

- Neural networks predict movement trajectories with uncanny accuracy

- Reinforcement learning lets robots practice movements like a toddler learning to walk

- Motion capture data helps robots mimic human kinematic subtleties

- AI systems adapt robot behavior in real-time based on environmental feedback

Think of it like a digital dance instructor constantly whispering, “No, move your elbow like THIS.”

These algorithms aren’t just programming movements; they’re teaching robots to think, adapt, and flow. The result? Robots that move so naturally, you might just forget they’re machines.

Emotional Intelligence and Movement Dynamics

You might think robots are just cold, mechanical beings, but their movements can actually tell a rich emotional story.

By studying how humans interpret motion, engineers are creating robots that can signal feelings through the way they move—imagine a robot tilting its head with just the right hint of curiosity or sympathy.

These subtle dynamic movements aren’t just programmed tricks; they’re sophisticated translations of human-like emotional intelligence, turning what could be a clunky machine into something that feels surprisingly alive.

Advances in neuromorphic computing now enable robots to develop increasingly nuanced motion patterns that more closely mimic human emotional expression and interaction dynamics.

Emotion Through Motion

While robots might seem like cold, calculating machines, their future lies in mastering the subtle art of emotional communication through movement.

Emotion through motion isn’t just sci-fi fantasy—it’s how human beings actually connect and understand each other. Consider these game-changing insights:

- Advanced sound tech lets robots detect vocal emotional states

- Fluid movements can communicate empathy without a single word

- Biological motion reveals deep emotional intelligence

- Congenitally blind people prove we intuitively read movement dynamics

Your robotic buddy isn’t just processing data; it’s learning to “speak” in physical language.

Think of it like a dance where every gesture tells a story. Robots are becoming less about cold algorithms and more about nuanced interactions that feel weirdly, wonderfully human.

Who knew machines could get so good at reading between the lines—or in this case, between the movements?

Sensing Human Dynamics

From emotional whispers to robotic revelations, understanding human dynamics isn’t just about decoding movements—it’s about mimicking the intricate ballet of human interaction.

Think of sensing technologies as robotic superpowers that translate our complex emotional landscapes into precise mechanical language. Microphones capture vocal nuances, while capacitive sensors read surface textures with near-human sensitivity.

Want to know how robots learn to move like us? They’re basically studying our every twitch and gesture, analyzing the subtle choreography of human-like movement. It’s not just about mimicking; it’s about understanding the cultural and contextual layers that make motion meaningful.

Proximity sensors become these robots’ sixth sense, helping them navigate social spaces with an almost intuitive grace. Who knew machines could be such sophisticated dance partners?

People Also Ask About Robots

What Is the Technology Behind Robots?

You’ll find robot technology combines electric motors, advanced sensors, AI algorithms, and motion capture data to create precise, adaptive movements that mimic human-like motion with increasing sophistication and natural fluidity.

Why Do Robots Move Like That?

You’ve noticed robots move differently because they use advanced sensors, motion capture data, and AI algorithms that analyze and mimic human locomotion, allowing them to generate smoother, more natural trajectories.

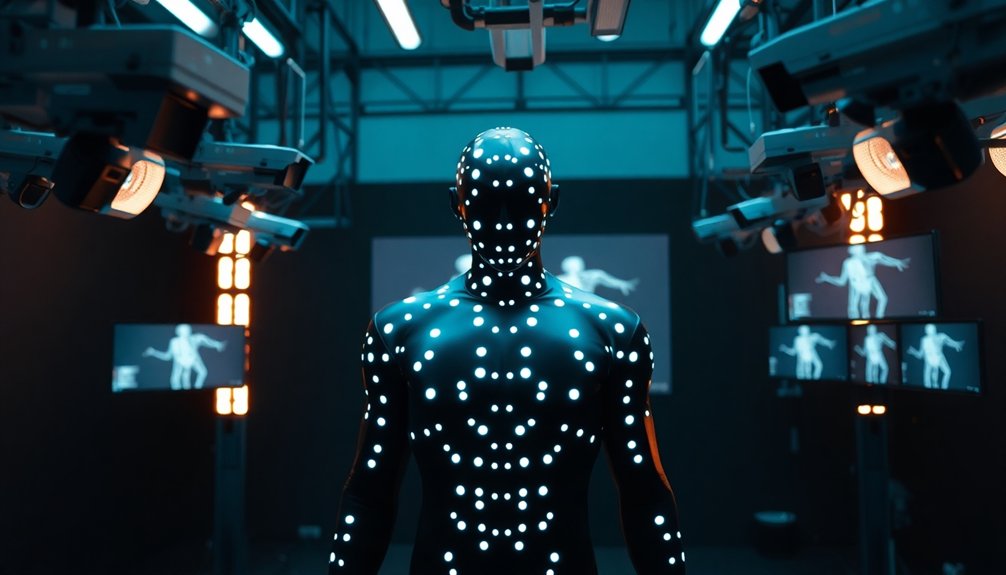

What Is the Science Behind the Robot Hand?

You’ll find robot hands blend advanced actuators, sophisticated sensors, and biomimetic design to simulate human-like movement. Complex algorithms and precision engineering enable these mechanical marvels to grasp, manipulate, and interact with objects intelligently.

What Is the Physics Behind Robots?

You’d think robots are just clunky machines, but they’re actually sophisticated marvels of physics! Kinematics, actuators, and biomimetic design collaborate to create fluid movements, translating complex mathematical principles into seemingly natural, dynamic locomotion.

Why This Matters in Robotics

You’ve hit the jackpot of robotic motion magic. These cutting-edge technologies aren’t just making robots move—they’re teaching machines to dance with human-like grace. From motion capture to biomimetic design, we’re watching technology blur the lines between mechanical and organic. The future isn’t about stiff, clunky robots anymore. It’s about fluid, intuitive movement that’ll make you do a double-take and wonder: Are they machines or distant cousins?