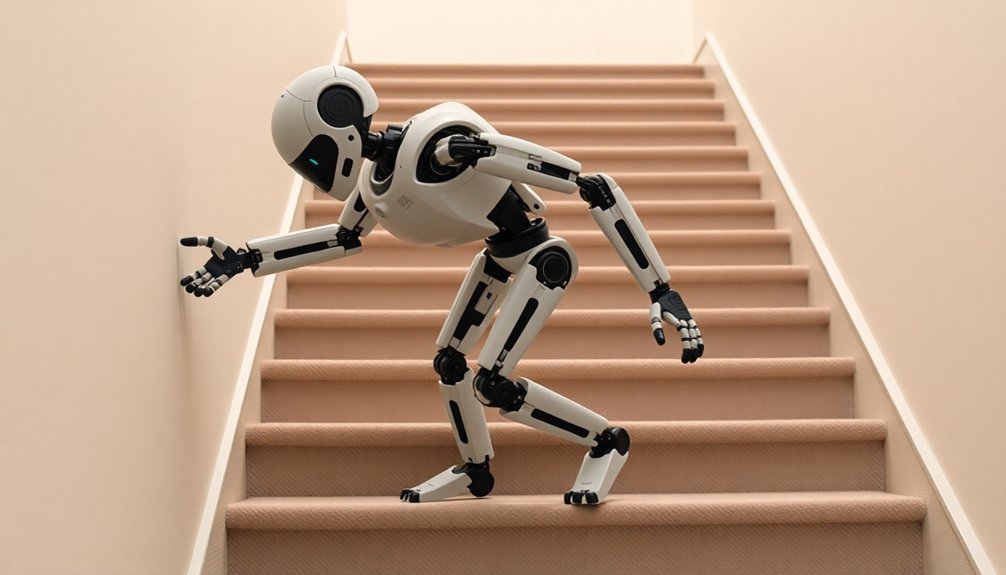

Humanoid robots hit a wall—literally—when facing stairs. Why? Their sensors can’t decode complex surfaces like we do. Those uneven steps, subtle height changes, and random damages are machine perception kryptonite. It’s not just about motors and balance; it’s about understanding spatial context. Our brains transform raw visual data into intuitive movement, while robots get stuck processing pixels. Curious how robots might crack this problem? Stick around.

The Physics of Balance: Why Stairs Are a Robotic Nightmare

Imagine trying to walk up a staircase while balancing a stack of dinner plates on your head, and you’ll start to understand why stairs are a robotic nightmare.

Every step is a complex physics puzzle that demands millisecond-perfect calculations. Robots must constantly adjust their center of mass, manage friction, and dynamically balance across uneven 3D surfaces. Sensor fusion techniques allow robots to integrate multiple data sources, providing critical real-time spatial awareness during complex navigation challenges.

Navigating stairs: a robotic high-wire act of physics, balance, and split-second computational gymnastics.

It’s like performing a high-stakes gymnastics routine while solving calculus problems simultaneously. The challenges aren’t just about movement—they’re about real-time perception and split-second adaptations.

Our robotic friends must process terrain variations, calculate precise foot trajectories, and compensate for potential slips faster than you can blink. In the case of the Darwin-Op robot, researchers found that zero moment point calculations are crucial for maintaining stability on challenging surfaces.

And let’s be honest: most robots would rather face a flat sidewalk than these treacherous vertical obstacles that humans navigate without a second thought. The CL-1 humanoid robot demonstrates remarkable progress in dynamic stair climbing, showing how advanced perception and motion control algorithms can help robots tackle these challenging terrains.

Sensory Limitations: How Robots Struggle to See and Adapt

After conquering the physics-defying challenge of staircases, robots face an even more bewildering obstacle: actually seeing and understanding their environment. Sensor arrays from humanoid robots provide crucial visual information about external environments and internal states, enabling basic perception capabilities. Advanced AI algorithms help robots process complex sensory data from multiple inputs, gradually improving their environmental understanding. Sensor fusion technologies enable robots to combine data from multiple inputs, creating a more comprehensive understanding of their surroundings.

Our mechanical friends might’ve killer motors and precision engineering, but their visual perception? It’s like giving a toddler a microscope. They struggle with depth, lighting changes, and recognizing objects that aren’t perfectly positioned.

Imagine a robot trying to navigate a cluttered living room—it’s basically a high-tech pinball machine bumping into everything. Current vision systems are impressive but limited. They can process images, but genuinely understanding spatial relationships? That’s still science fiction.

Machine learning is slowly improving this, but we’re talking baby steps. Can robots eventually see like humans? Maybe. But right now, they’re more like very expensive, very confused cameras with legs.

AI and Machine Learning: The Quest for Robotic Spatial Intelligence

While robots have conquered physical challenges like maneuvering staircases, their ability to genuinely understand spatial environments remains frustratingly limited. Neural networks and advanced sensors are enabling AI to decode complex 3D environments with unprecedented depth and granularity. Companies are now developing large world models to enhance AI’s spatial comprehension and interaction capabilities.

We’re pushing the boundaries of machine perception, teaching AI to see beyond flat images and authentically comprehend three-dimensional spaces. Sensor fusion techniques like LIDAR and computer vision are now processing sensor data like complex puzzles, helping robots recognize objects, predict movements, and make split-second decisions.

Machine perception evolves: AI transforms flat images into dynamic, intelligent spatial understanding.

But let’s be real: we’re still miles away from human-like spatial intelligence. Current AI can navigate a room, but ask it to understand the emotional context of how a drawer is opened? Total blank.

The challenge isn’t just technical—it’s about recreating the nuanced, intuitive understanding that humans take for granted. We’re making progress, but spatial intelligence remains robotics’ most fascinating frontier.

From Lab to Reality: Real-World Challenges in Stair Navigation

Spatial intelligence might work magic in laboratory simulations, but stair navigation reveals the raw, unfiltered challenges of robotic mobility.

We’ve discovered that real-world stairs aren’t neat little geometric puzzles—they’re messy, unpredictable obstacles that laugh at our precise algorithms. Neural network algorithms facilitate advanced learning strategies that could potentially bridge this mobility gap.

Uneven heights, damaged steps, and complex terrain transform a simple climbing task into a robotic nightmare.

Current humanoid robots stumble where humans stride effortlessly, struggling with balance, dynamic perception, and adaptive movement. Tien Kung robot’s breakthrough demonstrates that some humanoid robots can now navigate complex outdoor stairs, offering a glimpse of potential progress. Healthcare infrastructure limitations pose significant challenges to widespread robotic deployment, revealing the complex integration requirements for autonomous mobility.

It’s not just about calculating step dimensions; it’s about reading terrain in real-time, adjusting weight distribution instantly, and maintaining stability through constant micro-adjustments.

The gap between lab prototypes and street-ready robots? It’s a canyon, and stairs are just the beginning.

The Future of Robotic Mobility: Breaking Through Current Technological Barriers

Because the world of robotics moves faster than a caffeinated cheetah, we’re standing at the precipice of a mobility revolution that’ll make our current robots look like clunky toddlers. Manufacturing Adaptability demonstrates how humanoid robots are becoming increasingly versatile in industrial environments. Mecanum Wheel Technology enables robots to navigate complex environments with unprecedented 360-degree movement capabilities. Workforce Displacement Trends suggest that these technological advancements are reshaping employment landscapes across multiple industries.

Our robotic future is taking shape through:

- AI-powered learning that lets robots adapt like Swiss Army knives

- Advanced motion systems mimicking human-like movements

- Real-time processing that turns complex algorithms into fluid actions

- Modular platforms enabling rapid customization

We’re watching humanoid robots transform from science fiction fantasies into practical tools. Boston Dynamics’ Atlas isn’t just doing cartwheels; it’s rewriting what’s possible in robotic mobility.

Sure, challenges remain—balance, environmental adaptation, cost—but we’re making quantum leaps.

Robotic frontiers beckon: technical hurdles can’t halt our breathtaking leap into machine intelligence.

Imagine robots seamlessly maneuvering through warehouses, assisting in healthcare, transforming industrial automation. The next decade won’t just improve robots; it’ll revolutionize how we think about machine intelligence.

People Also Ask

Why Can’t Humanoid Robots Simply Copy Human Stair-Climbing Movements?

We can’t simply copy human stair-climbing because robots lack our intricate balance, sensory feedback, and precise limb coordination. Our complex physiology and dynamic movement adaptation are incredibly challenging to replicate mechanically.

How Much Does a Stair-Climbing Robot Actually Cost?

We’ve found stair-climbing robots aren’t cheap, with prices ranging from $1,309 for the Dreame X50 Ultra to potentially thousands more for specialized models like ROSA BETA, depending on technology complexity and payload capacity.

Are Stairs More Challenging Than Other Terrain for Robots?

We saw Atlas stumble on stairs during a test, revealing their complexity. Stairs challenge robots more than flat terrain due to discrete steps, precise foot placement, and dynamic balance requirements that test robotic navigation and stability algorithms.

Could a Child Teach a Robot to Climb Stairs?

We believe children could help robots learn stair climbing by providing intuitive feedback and demonstrating natural movement. Their adaptive learning styles and playful interactions might reveal innovative solutions to complex robotic navigation challenges.

Will Robots Ever Climb Stairs as Naturally as Humans?

Rome wasn’t built in a day, and neither will perfect stair-climbing robots be. We’re making significant strides in humanoid robotics, integrating AI, advanced sensors, and machine learning to tackle complex locomotion challenges with increasing sophistication and precision.

The Bottom Line

Like tightrope walkers maneuvering a razor’s edge, robots are inching closer to mastering complex mobility. We’ve seen incredible progress, but stairs remain our technological Everest. As machine learning and sensor technologies evolve, we’re not just building better robots—we’re redefining the boundaries of artificial intelligence. The journey’s just beginning, and each stumble brings us closer to a future where robots move as naturally as we do.

References

- https://www.globaltimes.cn/page/202502/1328601.shtml

- https://www.iotworldtoday.com/robotics/humanoid-robot-climbs-stairs-navigates-autonomously-in-new-video

- https://motion.cs.illinois.edu/drc/index.html

- https://www.youtube.com/watch?v=aqCmX5dMYHg

- https://en.clickpetroleoegas.com.br/engenheiros-suicos-desenvolveram-um-robo-de-resgate-que-sobe-escadas-com-incrivel-eficiencia-que-promete-revolucionar-as-operacoes-de-resgate-em-emergencias/

- https://www.youtube.com/watch?v=Z-ho7_sUkp0

- https://mediatum.ub.tum.de/doc/1363589/1363589.pdf

- https://digitalcommons.usf.edu/etd/9485/

- https://www.youtube.com/watch?v=MPhEmC6b6XU

- https://www.basic.ai/blog-post/intelligent-humanoid-robots-vision-perception