Reinforcement learning turns robots from dumb machines into curious learners. By constantly experimenting and failing, robots develop new skills through intelligent trial-and-error. They receive rewards for successful actions and penalties for mistakes, gradually refining their strategies. Imagine a robot learning to walk by falling dozens of times, then suddenly mastering precise movements. Neural networks help them process complex inputs, predict outcomes, and adapt quickly. Stick around, and you’ll see how robots are becoming tomorrow’s problem-solvers.

Understanding Reinforcement Learning Fundamentals

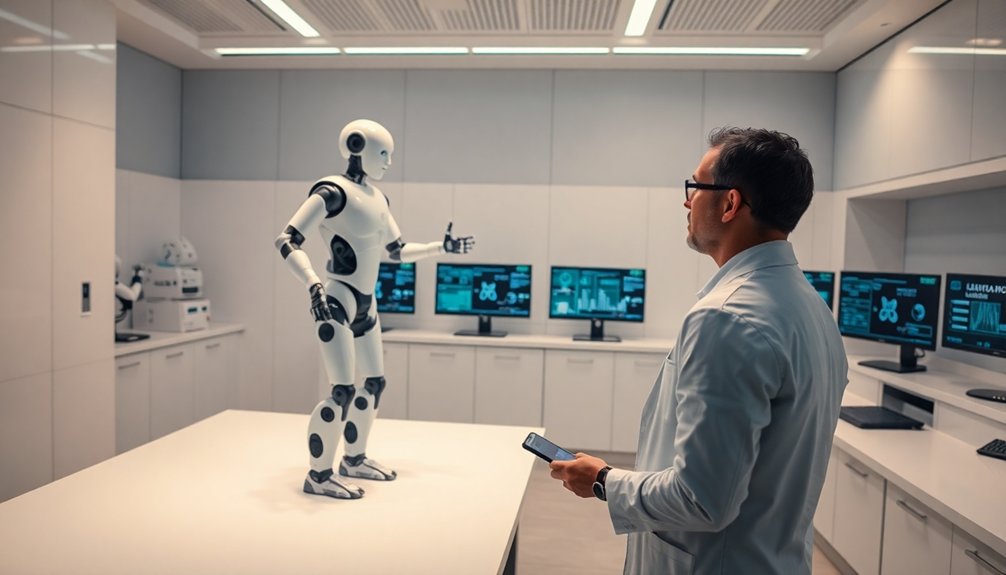

The robot’s brain: a curious landscape of trial and error. Reinforcement learning isn’t just fancy tech—it’s how machines learn like curious kids poking at the world. Neural networks transform robots from rigid machines into adaptive learners that can process complex environmental inputs.

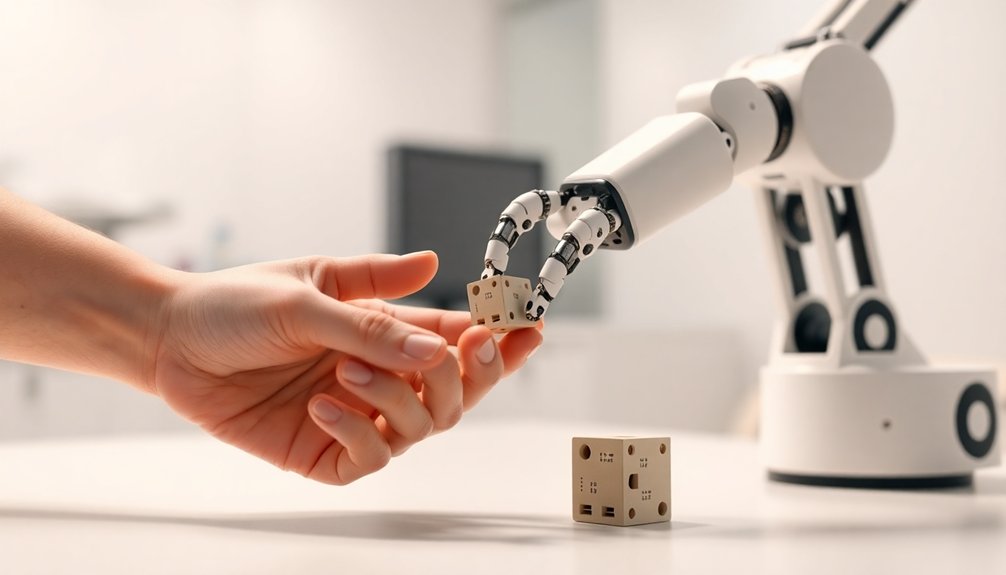

Imagine a robot trying to grasp a cup, failing, then adjusting its grip based on instant feedback. That’s robot learning in action. The learning process isn’t about programmed perfection, but continuous adaptation.

A mechanical student stumbles, recalibrates, learns—transforming each misstep into a precise dance of adaptive intelligence.

Your mechanical student watches, acts, receives rewards or penalties, and gradually develops smarter strategies. It’s like teaching a very precise, slightly awkward pupil who never gets tired.

The magic happens in those repeated attempts: each failure isn’t a dead-end, but a data point. Will the robot eventually master that delicate cup? Spoiler alert: probably.

And that’s the beautiful, slightly weird promise of reinforcement learning.

Key Components of Machine Learning Strategies

Building on our robotic exploration of learning through trial and error, machine learning strategies aren’t just mathematical mumbo-jumbo—they’re the secret sauce that transforms robots from clunky metal workers into adaptable problem-solvers. Deep reinforcement learning enables robots to evolve through sophisticated neural networks that can learn complex behaviors across diverse environments.

Reinforcement learning lets robots learn by testing actions, receiving rewards, and refining their approach. Think of it like a video game where each successful move earns points, but in the real world.

Exploration and exploitation become essential strategies: robots must boldly try new actions while also leveraging what they already know works. It’s a delicate dance of risk and reward.

Value functions help robots evaluate action quality, guiding them toward smarter decisions. Through clever function approximation, robots can generalize skills across different scenarios, becoming more flexible and intelligent with each interaction.

How Robots Learn Through Experience

When you peek under the hood of robotic learning, you’ll find it’s less like programming and more like training a really smart puppy.

Reinforcement learning lets robots:

- Adapt through trial and error, just like humans learn

- Receive instant feedback on their performance

- Gradually improve skills without constant human intervention

Robots absorb experiences like sponges, transforming YouTube videos and supervised training into complex motor skills. They observe their actions, get rewarded or penalized, and recalibrate strategies.

Robots: digital learners soaking up knowledge, refining skills through intelligent trial, error, and strategic adaptation.

Deep learning algorithms help them navigate unpredictable environments, solving problems independently. Imagine a robot watching a backflip video and then—boom—executing that precise movement.

It’s not magic; it’s intelligent adaptation. As robots become more autonomous, they’re proving that learning isn’t just a human superpower. They’re basically mechanical students, constantly tweaking their approach to nail the perfect performance. NVIDIA GPU technologies enable advanced robotic simulations that accelerate learning through thousands of simultaneous training iterations.

Reward Systems and Decision-Making Processes

Imagine robots playing a complex gambling match where every action could win or lose points. That’s reinforcement learning in a summary. These mechanical learners navigate decision-making through constant trial and error, with reward systems acting like a high-stakes scorecard.

When a robot successfully completes a task, it gets a metaphorical gold star; when it fails, it receives a digital slap on the wrist.

The magic happens in how robots transform these rewards into smarter strategies. They’re not just blindly following instructions—they’re calculating risk, analyzing outcomes, and gradually refining their approach.

Think of it like a video game where each mistake teaches you something new. Q-learning helps robots assess action quality, balancing between exploring wild new moves and leveraging proven techniques. It’s learning, but with robotic precision.

Real-World Applications in Robotics

Reward systems aren’t just theoretical playground experiments—they’re rapidly transforming how robots interact with our messy, unpredictable world.

Reinforcement learning has pushed robots from clunky machines to nimble learners capable of mastering complex tasks with startling precision.

- Imagine robots learning cartwheels from YouTube videos

- Watching machines nail Jenga assembly like pros

- Robots flipping eggs with surgical accuracy

These aren’t sci-fi fantasies—they’re happening right now.

By combining human intervention with algorithmic learning, robots are becoming increasingly adaptable.

They’re not just following programmed instructions; they’re learning, adjusting, and improving in real-time.

Want a robot that can assemble motherboards or navigate unpredictable manufacturing environments?

Reinforcement learning is making that possible, turning once-rigid machines into dynamic problem-solvers that can learn and grow just like we do.

Neuromorphic computing is revolutionizing how robots process information, enabling more sophisticated and energy-efficient learning mechanisms.

Challenges in Teaching Robotic Skills

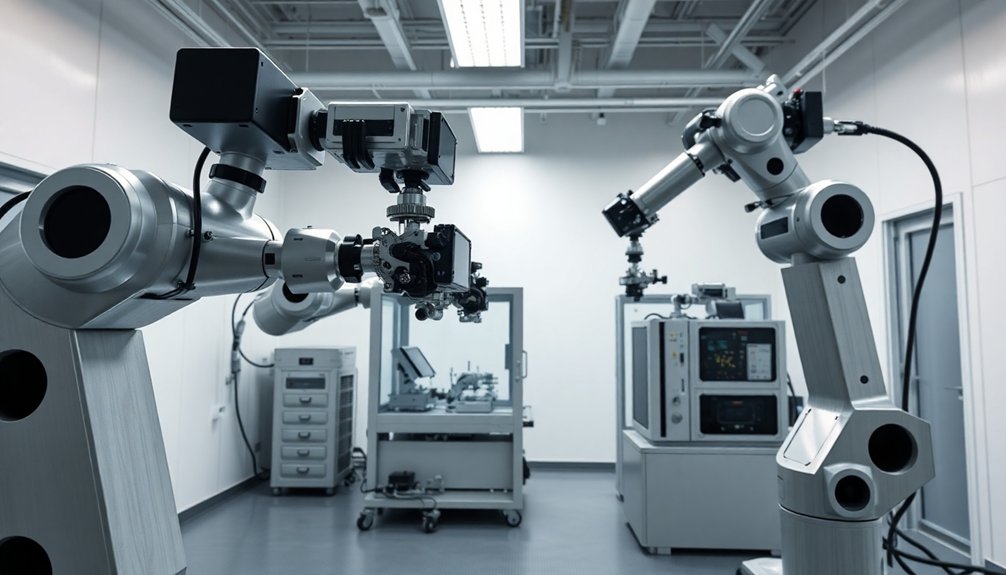

Imagine spending hours watching a robot fumble with a simple object, dropping and recovering repeatedly. Learning techniques for robotic systems demand immense patience and manual intervention. Machine learning algorithms enable robots to process sensory inputs and dynamically adjust their motor skills during complex learning scenarios.

You’ll witness robots struggling to translate human demonstrations into actionable movements, especially when interpreting complex YouTube videos with unpredictable motion patterns. Real-world learning means embracing failures as opportunities, but each mistake requires extensive recalibration. It’s like teaching a clumsy toddler, except this toddler weighs 200 pounds and costs more than your car. Welcome to the fascinating, frustrating world of robotic skill acquisition.

Advanced Techniques in Machine Learning

You’ve heard about machine learning’s wild ride, right? It’s like robots are basically growing up, learning from their mistakes just like you did when you first tried riding a bike – except these mechanical learners can rack up thousands of “falls” in seconds, rapidly evolving their skills through pure experience.

The coolest part? Modern machine learning techniques aren’t just mimicking human learning – they’re potentially surpassing our own ability to adapt, process information, and solve complex problems in ways we’re only beginning to understand.

Machine Learning Evolution

When machines started learning, nobody expected they’d become the quick-study students of the technological world. Reinforcement learning transformed robots from clunky automatons into adaptive learners capable of mastering complex skills.

- Robots now explore environments like curious children, making mistakes and improving.

- Machine learning algorithms generate strategies through trial and error.

- Unsupervised techniques allow robots to discover skills independently.

Teaching machines isn’t about programming every single action anymore. It’s about creating intelligent systems that can:

- Predict outcomes.

- Adjust behaviors.

- Learn from feedback.

Imagine a robot that learns manipulation techniques faster than a human apprentice, simply by experiencing countless simulated scenarios. Sensor fusion and adaptive control enable robots to rapidly process environmental data and refine their learning strategies.

Reinforcement learning isn’t just programming—it’s creating technological minds that can think, adapt, and grow. Who’s teaching who now?

Learning Through Experience

If robots could talk, they’d probably tell you that learning isn’t about memorizing—it’s about experiencing. Reinforcement learning lets robots train themselves through epic trial-and-error adventures, where every mistake becomes a lesson.

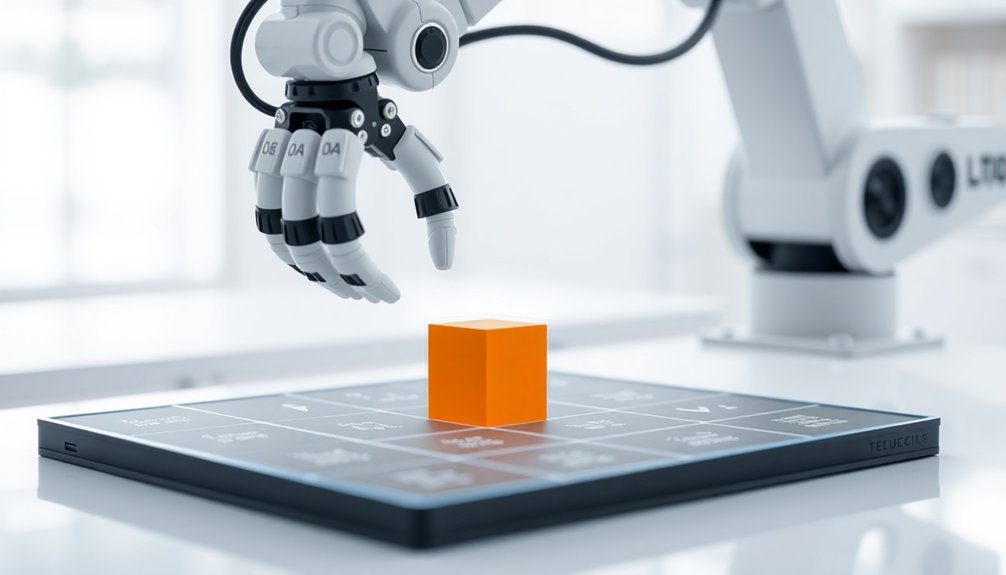

Imagine teaching machines to flip eggs or navigate complex environments by letting them experiment and earn rewards for smart moves. These robots aren’t just following pre-programmed instructions; they’re developing genuine skills by watching and mimicking human actions.

Deep learning algorithms transform robots from rigid machines into adaptive learners. They can reconstruct motions from single video demonstrations, gradually requiring less human supervision.

Think of it like teaching a curious child—except this “child” can potentially solve problems faster than you can blink. Robots trained through experience become increasingly sophisticated, turning complex challenges into playgrounds of continuous improvement. Humanoid robots are now evolving to integrate advanced learning techniques that allow them to adapt and grow beyond traditional programming limitations.

Case Studies of Successful Robotic Training

You’ve probably wondered how robots go from clumsy machines to precision performers, right?

At UC Berkeley, researchers cracked this code by teaching robots tricky skills like Jenga whipping and motherboard assembly with mind-blowing accuracy.

Imagine a robot that can flip an egg without breaking it or sort random manufacturing parts with near-perfect precision – that’s not sci-fi anymore, that’s today’s reality of AI-powered robotic training.

Jenga Whipping Mastery

While most people imagine robots as clumsy mechanical beings struggling with basic tasks, UC Berkeley’s recent Jenga whipping experiments shatter that stereotype completely. Their reinforcement learning approach transformed robots from awkward machines into precision skill masters, proving training data isn’t just numbers—it’s potential.

- Robots learned Jenga whipping with a jaw-dropping 100% success rate

- Human trainers used specialized mice to guide robotic movements

- Complex tasks became playgrounds for mechanical learning

The secret sauce? Interactive training that lets robots learn through trial and error, with humans providing strategic corrections.

Motherboard Assembly Precision

When most people picture robotics, they imagine clunky machines fumbling with screwdrivers and dropping tiny parts—but UC Berkeley’s groundbreaking motherboard assembly research just rewrote that narrative.

Their reinforcement learning system transformed robots from bumbling nerds into precision instruments that can dance through complex assembly tasks. By letting robots learn from human demonstrations and real-world mistakes, researchers cracked the code of adaptive machine learning.

Want proof? These mechanical prodigies now nail 100% accuracy in delicate tasks like positioning microscopic motherboard components.

The learning system doesn’t just mimic human actions—it improves upon them, turning robotic training from a clumsy imitation game into a sophisticated skill-building workshop.

Who knew robots could be such quick studies?

The Role of Human Intervention in Robot Learning

Because robots aren’t born knowing how to do stuff, humans have become critical teachers in the emerging world of machine learning. UC Berkeley researchers developed a groundbreaking training system that lets humans guide robots in real-time, teaching them complex tasks like flipping eggs and manipulating timing belts. Digital twin technology provides a virtual training environment that allows robots to practice and learn advanced skills without physical risks.

- Humans provide instant corrections during robot learning

- Robot accuracy improves with each intervention

- Training reflects real-world complexity and challenges

This approach transforms learning from rigid programming to dynamic interaction. By offering feedback, humans help robots shift from clumsy novices to precise performers.

Initially, robots need constant supervision, but they gradually become more independent. The result? Machines that can learn, adapt, and execute tasks with remarkable 100% accuracy – proving that the right teaching method can turn even the most awkward robot into a skilled apprentice.

Performance Metrics and Evaluation Methods

You’ll want to know how scientists actually measure whether robots are learning or just faking it, right?

Accuracy measurement techniques are the robot world’s version of a report card, tracking how precisely machines can mimic human skills and complete complex tasks like perfectly flipping an egg or steering through a tricky assembly line.

Performance evaluation metrics aren’t just nerdy numbers—they’re the critical gateway that determines whether a robot gets to graduate from the lab to real-world jobs, separating the truly intelligent machines from the glorified mechanical toys.

Accuracy Measurement Techniques

If you’ve ever wondered how we actually measure a robot’s learning prowess, buckle up for an exploration into accuracy measurement techniques.

- Accuracy rates aren’t just numbers—they’re the report card for teaching a robot new tricks.

- Robots must demonstrate consistent performance across multiple scenarios.

- Real-world competency trumps laboratory perfection every single time.

Measuring a robot’s learning isn’t about complex algorithms; it’s about tracking how quickly and precisely they nail tasks. We compare their performance against traditional methods, looking for speed and precision.

Some advanced systems now hit 100% accuracy on complex challenges, which sounds impressive but also slightly terrifying. Feedback mechanisms help track learning evolution, showing how robots become more autonomous with less human intervention.

The goal? Creating machines that can learn, adapt, and execute tasks with minimal supervision—turning sci-fi fantasies into industrial realities.

Skill Acquisition Benchmarks

When robots learn new skills, it’s not just about raw data—it’s about measuring their performance like a strict but quirky coach sizing up an athletic rookie.

Skill acquisition isn’t a pass/fail game; it’s a nuanced dance of precision and adaptability. You’ll want to track how quickly robots master tasks, noting accuracy rates and consistency across different challenges.

Performance metrics become your robotic report card: How many attempts does it take to nail a skill? How fast can they pivot between complex scenarios?

UC Berkeley’s research shows top systems can hit 100% accuracy, but the real magic is in their ability to learn and adjust on the fly.

It’s like watching a mechanical athlete train—sometimes stumbling, sometimes soaring, but always pushing boundaries.

Performance Evaluation Metrics

Since robots aren’t just fancy tin cans with algorithms anymore, performance evaluation metrics have become the ultimate reality check for machine learning.

- How fast can a robot actually learn?

- Can it adapt to unpredictable scenarios?

- Will it consistently nail complex tasks?

Performance metrics aren’t just numbers—they’re the difference between a robot that’s impressive and one that’s genuinely useful.

When a robot learns through reinforcement learning, these metrics become its report card. We’re talking precision, speed, and adaptability that make traditional training methods look like prehistoric relics.

Imagine a robot flipping eggs with surgical accuracy or maneuvering timing belt complexities without breaking a sweat. That’s not science fiction—it’s what happens when human intervention meets cutting-edge training techniques.

The result? Robots that don’t just perform tasks, but master them with jaw-dropping consistency.

Future Potential of Adaptive Robotic Systems

Because robots are about to get seriously smart, the future of adaptive robotic systems looks less like sci-fi fantasy and more like your next workplace reality.

Reinforcement learning is transforming robotic control from rigid programming to dynamic skill acquisition. Imagine robots that learn like curious toddlers—exploring, failing, and improving without constant human babysitting.

Robots evolve from programmed puppets to self-learning explorers, mastering skills through curious, autonomous experimentation.

These adaptive systems will soon master complex tasks, from delicate motherboard assembly to unpredictable outdoor challenges, by independently developing strategies through trial and error.

The game-changing twist? These aren’t just industrial machines anymore. They’re becoming versatile problem-solvers capable of continuous self-improvement.

Deep reinforcement learning means robots will adapt faster, think more creatively, and potentially outperform humans in precision and persistence.

Want a glimpse of the future? It’s learning, right now, one algorithmic experiment at a time.

Ethical Considerations in Autonomous Machine Learning

As robots evolve from programmed machines to self-learning entities, we’re stepping into murky ethical territory that’s more complex than a sci-fi movie plot.

Reinforcement learning transforms robots from rigid tools to adaptive learners, but at what cost?

- Who’s responsible when an autonomous robot makes a mistake?

- Can we truly predict the ethical boundaries of machine decision-making?

- How do we guarantee transparency in algorithmic learning processes?

Ethical considerations aren’t just academic debates—they’re real-world challenges.

Accountability becomes tricky when robots learn by trial and error in dynamic environments.

We’re not just programming machines; we’re fundamentally teaching them to think independently.

The potential for unintended consequences is massive, and our regulatory frameworks are struggling to keep pace with technological acceleration.

It’s a high-stakes game of technological roulette, where the chips are human safety and societal trust.

Manufacturer responsibilities are emerging as a critical factor in defining the ethical landscape of autonomous machine learning.

People Also Ask About Robots

What Is the Purpose of Reinforcement Learning in Machine Learning?

You’ll use reinforcement learning to help machines learn and improve through trial and error, allowing them to make intelligent decisions by receiving rewards or penalties based on their actions in complex environments.

What Are the Potential Applications of Reinforcement Learning in Autonomous Vehicles?

You’ll see reinforcement learning help autonomous vehicles navigate complex environments, optimize routes, adapt to changing conditions, and make split-second decisions for safer driving by learning from continuous interactions and feedback.

How Does Reinforcement Learning Improve the Performance of AI Models Like Chatgpt?

Practice makes perfect! You’ll see RL helps ChatGPT learn from interactions, refining its responses through trial and error. It adapts to user feedback, predicting ideal communication strategies and enhancing the model’s conversational intelligence dynamically.

What Is the Role of Reinforcement in Learning?

You’ll find reinforcement drives learning by providing feedback that rewards or penalizes actions, helping you adapt strategies, refine decision-making, and optimize performance through continuous trial and error in dynamic environments.

Why This Matters in Robotics

Like a curious child learning to ride a bike, robots are now mastering skills through trial and error. You’ll witness machines transforming from clumsy apprentices to agile performers, guided by intelligent algorithms that turn mistakes into wisdom. The future isn’t about replacing humans, but creating collaborative partners who learn, adapt, and surprise us. Buckle up—the robotic revolution isn’t just coming, it’s already dancing at our doorstep.