Table of Contents

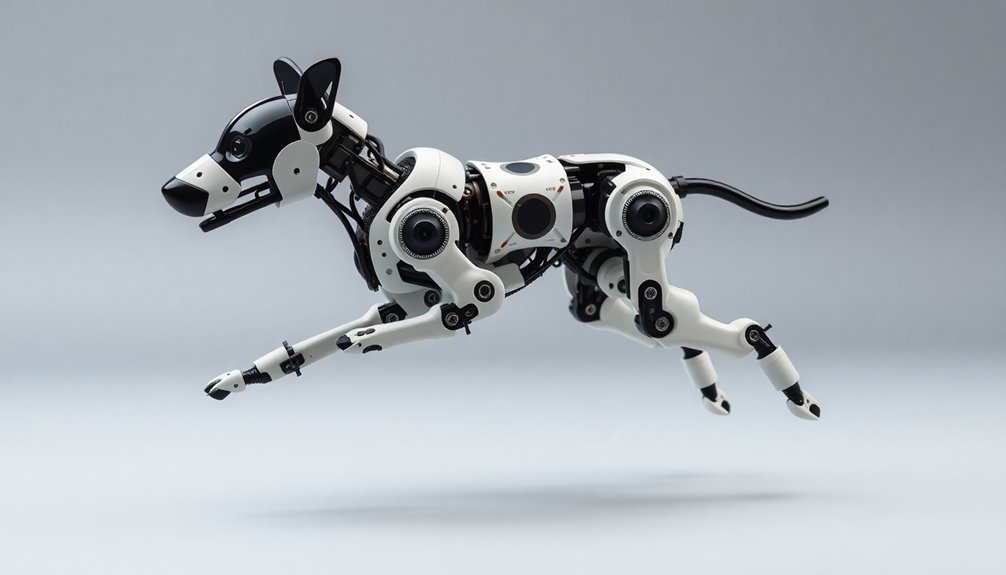

Ever wondered why robot dogs walk like nervous interns on coffee overdrive? It’s because their movement is a wild computational wrestling match against gravity. Sensors, algorithms, and physics are constantly doing micro-calculations to keep these mechanical pups upright. They’re basically performing high-stakes mathematical gymnastics with every step, transforming complex equations into something that almost—but not quite—looks like natural walking. Curious about their secret robotics ballet?

Why Robot Dogs Look Like They’re Speed Walking Through Molasses

While sci-fi might’ve us imagining robot dogs zipping around like lightning, the reality is far more awkward. Our robotic canine companions are stuck in a perpetual state of “hurried but hesitant” due to sensor limitations that mess with their walking mojo. The Chebyshev lambda linkage mechanism inherently creates a unique walking pattern that deliberately mimics an imperfect biological gait. Sensor technologies play a crucial role in detecting ground contact and making rapid balance adjustments. These machines can’t instantly adapt to terrain changes, so they move like they’re maneuvering an invisible obstacle course. Feedback mechanisms are so primitive that each step feels calculated, not fluid.

Think of a nervous intern speed-walking through an important meeting—that’s basically a robot dog’s entire locomotion strategy. Their hardware is basically saying, “Don’t fall, don’t fall” with every cautious movement.

The result? A walking style that’s part determination, part uncertainty, and totally hilarious to watch.

The Physics of Robotic Leg Mechanics and Stability Constraints

We’ve been obsessed with making robot legs move like living creatures, but the physics of leg motion control is way more complicated than just mimicking a dog’s swagger. Quadruped robotic systems leverage complex impedance control algorithms to dynamically modulate joint stiffness and adapt to terrain uncertainties. Balancing force dynamics means understanding how each mechanical limb transfers weight, absorbs shock, and maintains stability across unpredictable terrain – it’s like teaching a metal creature to dance on shifting ground. Our robotic companions aren’t just walking; they’re constantly performing complex computational gymnastics to prevent face-planting in spectacular fashion. Feedback loops enable these robotic systems to continuously monitor and adjust their movements, transforming sensor data into split-second decisions that maintain stability and precision.

Leg Motion Control

Robot dogs aren’t just fancy toys—they’re complex mechanical marvels dancing on the razor’s edge of physics and engineering. Their leg motion control is a symphony of precision, where leg actuator synchronization becomes an art form. Quadruped robot sensors enable these machines to process intricate environmental data with unprecedented computational speed, translating raw terrain information into fluid, adaptive movements.

We carefully coordinate joint movements like a choreographer directing dancers, ensuring each robotic limb moves with calculated grace. Sensor fusion techniques help these mechanical systems integrate multiple data sources to optimize movement strategies and enhance navigational precision. The secret? Advanced algorithms that continuously compare sensor feedback with target data, allowing these mechanical canines to adapt their stride in milliseconds. Central Pattern Generators provide a neurological blueprint for rhythmic muscle contractions, mimicking the natural locomotion mechanisms found in biological systems.

Imagine a robot dog sensing uneven terrain and instantaneously recalibrating its leg positioning—that’s not sci-fi, that’s real-time engineering magic. By mimicking biological movement patterns, we’ve created machines that don’t just walk, but practically think their way through complex environments.

Balance Force Dynamics

Gravity: the ultimate party crasher for robotic mobility. We’ve cracked the code of robot dog balance by turning physics into our playground. Our balance optimization strategies transform mechanical challenges into smooth, adaptive movement. Laser gyroscope technology enables precise balance detection and real-time terrain adjustment. Sensor fusion techniques allow these robotic companions to integrate multiple sensory inputs for even more sophisticated environmental understanding.

| Sensor Type | Input | Stability Impact |

|---|---|---|

| Gyroscope | Orientation | Dynamic Adjustment |

| Force Sensors | Leg Loading | Weight Distribution |

| Vision Systems | Terrain Mapping | Predictive Correction |

Force distribution isn’t just science—it’s an art form. We map every potential foot placement like tactical chess moves, ensuring our robot dogs can traverse terrain that would make traditional robots weep. Whether it’s a steep slope or an icy surface, these mechanical pups adapt faster than you can say “good boy.” By prioritizing stability over raw speed, we’ve engineered machines that move with uncanny precision—part calculated engineering, part controlled chaos.

Algorithmic Complexity Behind Each Calculated Footfall

When you dig into how robot dogs actually walk, the algorithmic complexity behind each footfall becomes mind-blowingly intricate—like a digital ballet choreographed by microscopic mathematical wizards.

Footfall synchronization isn’t just about moving legs; it’s a real-time dance of dynamic feedback where sensors measure every microscopic detail. We’re talking millisecond calculations that decide whether a robot stumbles or glides smoothly.

Each leg becomes a precision instrument, constantly adjusting swing trajectories, ground contact duration, and force absorption. The algorithms don’t just move—they predict, correct, and optimize with lightning speed. Neural network policies enable robots to adapt dynamically to complex terrain variations.

Imagine a brain living inside each mechanical limb, making split-second decisions about terrain, balance, and momentum. It’s not walking; it’s computational poetry in motion.

These continuous adjustments mirror the spinal cord’s neural network of organic movement, where reflexive learning occurs in real-time through constant sensor feedback and instantaneous corrections.

In just 20 minutes of reinforcement learning, robots can develop remarkably adaptive walking capabilities, transforming mechanical limitations into fluid, intelligent motion.

Balancing Act: How Precision Trumps Natural Locomotion

From the microscopic mathematical wizardry of algorithmic footfalls, we’ve arrived at an even more mind-bending challenge: how these mechanical marvels actually stay upright. Robot agility isn’t just about moving; it’s about conquering gravity with sensor feedback that would make a gymnast jealous. Reaction wheel actuators have revolutionized robotic balance by creating a dynamic stabilization mechanism that allows machines to recover from unexpected movements with unprecedented precision.

| Robot Skill | Human Equivalent |

|---|---|

| Balance Beam | Olympic Gymnastics |

| Rapid Learning | Child’s First Steps |

| Terrain Adaptation | Mountain Goat Agility |

| Precision Movement | Surgical Robotics |

We’re watching machines transform from clunky prototypes to precision dancers, their computational brains calculating stability faster than you can blink. They don’t just walk—they strategize each step with mathematical poetry, turning improbable movement into an art form. Who needs biological evolution when you’ve got engineering this smart?

Engineering the Unnatural: Computational Gait Planning Challenges

We’ve spent years making robots move like dancers trapped in mechanical bodies, wrestling with the mind-bending challenge of computational motion complexity. Quadruped kinematic models provide the mathematical foundation for translating joint angles into precise, coordinated leg movements that simulate natural animal locomotion. Discrete-time Control Barrier Functions enable robots to dynamically adjust motion parameters, preventing potential collisions and ensuring safe navigation through complex environments.

Algorithmic balance control isn’t just about preventing these metal creatures from face-planting; it’s about teaching them to walk with a precision that makes natural locomotion look like a clumsy rehearsal.

Our robot dogs are learning to navigate terrain with computational choreography that would make a biomechanics professor weep tears of joy and algorithmic wonder.

Computational Motion Complexity

Because teaching robots to walk naturally sounds simple in theory, the computational challenges behind robot locomotion are mind-bendingly complex. Motion planning isn’t just about moving from A to B—it’s a mathematical chess match where every joint and angle becomes a potential roadblock.

Computational models transform walking into a high-stakes optimization problem, where robots must simultaneously navigate physical space, avoid obstacles, and maintain stability.

Imagine trying to choreograph a dance where each movement requires solving a multidimensional puzzle in real-time. That’s what robot locomotion demands.

We’re basically teaching machines to improvise walking without tripping over their own algorithmic feet, juggling computational complexity with physical constraints. Who said robot walking was easy?

Algorithmic Balance Control

Computational motion complexity suddenly gets real when robots try to walk like living creatures. We’re fundamentally teaching metal dogs to dance—and not just any dance, but a ballet of sensors, feedback loops, and split-second adjustments. Our robotic friends navigate this challenge through precise sensor calibration that would make a Swiss watchmaker proud.

| Sensor Type | Balance Function |

|---|---|

| Encoders | Joint Position Control |

| IMUs | Orientation Tracking |

| Cameras | Environmental Mapping |

| LiDAR | Obstacle Detection |

| AI Models | Movement Adaptation |

Think about it: these machines are learning to walk by constantly failing, recalibrating, and trying again—just like a toddler, but with considerably more computing power. They’re transforming engineering challenges into an art form of mechanical movement, one awkward step at a time.

From Biomimicry to Mathematical Precision: Decoding Robotic Movement

When we peek under the hood of robotic movement, something fascinating emerges: these mechanical canines aren’t just mindless metal, but intelligent systems that learn and adapt like living creatures.

Bioinspired designs are revolutionizing robotic evolution, transforming how these machines understand movement. By mimicking animal locomotion through complex algorithms and sensor networks, robot dogs can now navigate terrain like furry, four-legged athletes with mathematical precision.

Robotic evolution unfolds through nature’s choreography: algorithmic elegance translating animal grace into mechanical precision.

They stumble, learn, and recover in milliseconds—a dance of computational reflexes that would make Darwin raise an eyebrow. Central pattern generators simulate neural networks, allowing these machines to decode movement almost intuitively.

It’s like watching nature’s blueprint get rewritten in code, with each iteration bringing us closer to understanding how movement itself is a form of intelligence.

Terrain, Speed, and the Delicate Dance of Quadruped Control Systems

Imagine a robot dog dancing across rocky terrain, its metal legs calculating each step with the precision of a ballet dancer on a tightrope.

We’re witnessing terrain adaptation and speed optimization in action, where quadruped robots transform from clunky machines to graceful movers. Their secret? Complex control systems that make walking look ridiculously easy.

- These robotic canines use sensors that map terrain faster than you can blink

- Gait alterations happen smoother than a jazz musician’s improvisation

- Impedance control lets them absorb shocks like superhero shock absorbers

- Virtual forces guide their movements with mathematical ninja precision

Think walking is simple?

Try doing it while balancing on unpredictable ground, switching speeds, and never losing your cool. That’s a robot dog’s daily challenge.

People Also Ask

Why Can’t Robot Dogs Walk as Smoothly as Real Animals?

We struggle to replicate animal biomechanics in robotic gait because our engineering can’t yet match the natural fluidity of biological locomotion, with sensor limitations and complex movement dynamics hindering smooth walking.

How Much Energy Do Quadruped Robots Consume While Moving?

We’re burning through batteries like a quadruped robot on a caffeine rush! Our energy efficiency depends on terrain, gait selection, and motion dynamics, consuming considerably more power than wheeled counterparts.

Do Robot Dogs Get Tired Like Biological Creatures Do?

We don’t experience robot fatigue like living creatures. Our energy efficiency algorithms manage power consumption, allowing us to operate continuously without traditional exhaustion, though battery levels do determine our active duration.

Can Robot Dogs Learn to Adapt Their Walking Style?

We’ve learned that robot dogs can masterfully adapt their walking style through advanced algorithms, mimicking biological reflexes to enhance robotic agility and walking adaptability across varied terrains with remarkable precision.

What Makes Robot Dogs Walk so Awkwardly Compared to Pets?

We struggle with natural movement due to rigid robot design and complex movement algorithms that can’t fully replicate the fluid, instinctive locomotion of biological creatures, causing our distinctive mechanical gait.

The Bottom Line

We’ve watched robot dogs move like they’re speed-walking through an invisible emergency, and now we get why. It’s not awkwardness—it’s mathematical precision. Pure computational choreography turns mechanical limbs into calculated dance. Weirdly, the more robotic they look, the smarter the engineering behind their movement. Just like a chess player thinking ten moves ahead, these machines aren’t stumbling—they’re strategizing with every single step.

References

- https://www.hackster.io/472607/quadruped-robot-gait-planning-and-application-761df2

- https://www.mdpi.com/2076-3417/13/12/6876

- https://studios.disneyresearch.com/wp-content/uploads/2019/03/Control-of-Dynamic-Gaits-for-a-Quadrupedal-Robot.pdf

- https://alife-robotics.co.jp/members2010/icarob/Papers/GS8/GS8-5.pdf

- https://www.leadingauthorities.com/speakers/video/meet-spot-robot-dog-can-run-hop-and-open-doors-marc-raibert

- https://www.instructables.com/Fluffy-Walking-Robotic-Dog-With-Tail-Wagging-Neck-/

- https://www.eng.yale.edu/grablab/pubs/Kanner_ACCESS2017.pdf

- https://www.frontiersin.org/journals/robotics-and-ai/articles/10.3389/frobt.2022.874290/full

- https://www.eng.yale.edu/grablab/pubs/Kanner_JMR2013.pdf

- https://www.worldscientific.com/doi/full/10.1142/S0219843605000351