Humanoid robots learn to walk, talk, and think through a wild fusion of engineering and artificial intelligence. They’re basically giant, smart computers with mechanical muscles that learn by watching, practicing, and failing—just like you do. Advanced sensors act as their nervous system, while machine learning algorithms help them adapt and improve. Neural networks teach them movement, language, and problem-solving skills. Want to know how deep this robot rabbit hole goes?

The Anatomy of Humanoid Robots

Anatomy is the skeleton key that reveals how humanoid robots move and interact with our world. These mechanical marvels aren’t just fancy tech toys—they’re complex systems mimicking human movement.

Imagine a robot that can walk, grab a coffee mug, or navigate crowded spaces. That’s where object manipulation meets cutting-edge design. Reinforcement learning lets these humanoid robots adapt, transforming rigid mechanical parts into dynamic problem-solvers.

Humanoid robots transcend mechanics, becoming intelligent adapters that learn, navigate, and manipulate our world with stunning precision.

Their bodies mirror our own: head, torso, arms, legs—each component engineered with precision. Cameras and sensors become their eyes and ears, processing environmental data faster than you’d blink.

But here’s the wild part: these robots aren’t just copying humans. They’re learning, evolving, potentially outpacing our own physical limitations. Advanced humanoid robots like Figure 01 and Tesla Optimus are pushing the boundaries of what’s possible in robotic design and artificial intelligence.

Who knows what they’ll do next?

Mechanical Design and Human-Like Structure

When you peer into the world of humanoid robots, their mechanical design looks less like cold machinery and more like a mirror of human potential.

These robots aren’t just metal and wires; they’re meticulously crafted to mimic your body’s incredible engineering. Their energy-efficient actuators and articulated joints create a dance of precision, allowing them to walk, balance, and interact with environments just like you do.

Imagine sensors that work like hyper-intelligent eyes and skin, processing information faster than you can blink. Lightweight materials give these humanoid robots strength without bulk, transforming complex algorithms into fluid movements.

Degrees of freedom enable these robots to achieve increasingly natural and sophisticated movements, mimicking the complexity of human joint articulation.

Who would’ve thought machines could move so much like us? It’s not science fiction anymore—it’s the bold reality of mechanical design pushing human potential.

Sensors: The Eyes and Ears of Robotics

Because robots can’t truly understand the world without sensing it, sensors have become the superhero sidekicks of humanoid technology.

These tiny technological marvels transform robots from clunky machines into intelligent beings that can see, hear, and feel. Cameras and microphones work like eyes and ears, capturing environmental details with incredible precision.

Tactile sensors act like fingertips, allowing robots to handle objects delicately and understand physical interactions. Machine learning algorithms help these sensors adapt quickly, turning raw data into meaningful insights.

They’re not just collecting information—they’re learning, interpreting, and responding in real-time. Want to know how robots navigate complex environments?

It’s all about those smart sensors that transform cold metal into something almost alive, bridging the gap between human intuition and mechanical intelligence.

Machine Learning and Movement Algorithms

If robots were dancers, machine learning would be their choreographer—teaching them how to move with grace, precision, and adaptability.

You’ve seen how these mechanical marvels transform from clunky machines to fluid performers, right? Through advanced algorithms, humanoid robots now learn complex tasks by processing sensory input and predicting movement patterns.

They’re not just following rigid instructions anymore; they’re dynamically adjusting their walking techniques in real-time. Neural networks let them simulate and practice movements, building motor skills like curious toddlers exploring their physical capabilities.

Imagine a robot sensing an unexpected obstacle and instantly recalculating its path—that’s machine learning in action. It’s not magic; it’s sophisticated programming that turns mechanical limitations into adaptive, intelligent movement capabilities.

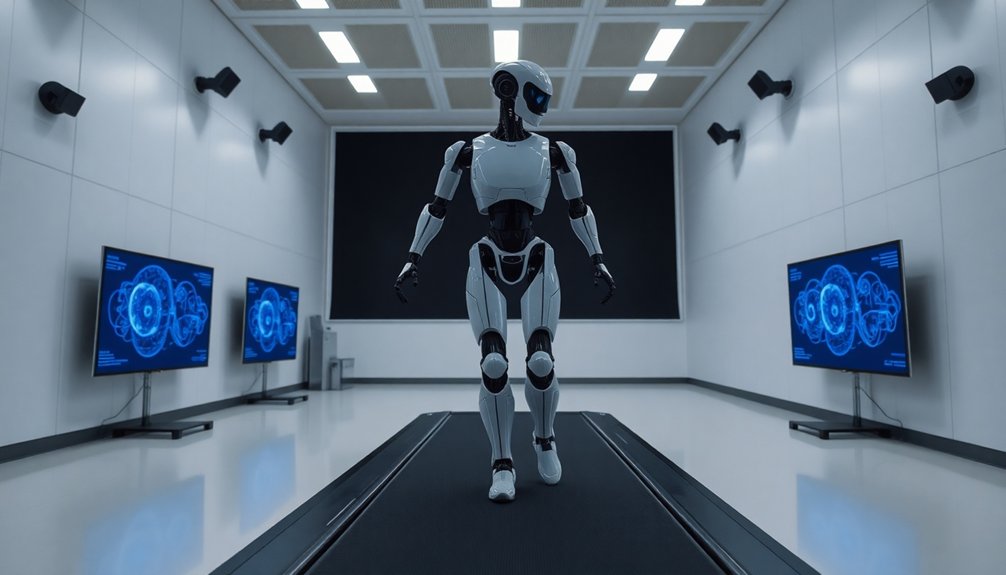

Bipedal Locomotion: Walking Like Humans

As humanoid robots step into the world of bipedal locomotion, they’re basically learning to walk the same way human toddlers do—through a messy, trial-and-error process of falling, adjusting, and ultimately conquering gravity.

AI-driven predictive planning helps these mechanical learners adapt their movements like high-tech gymnasts maneuvering complex terrain. Think of it as robot boot camp, where sensors like gyroscopes and accelerometers become their internal coaches, tracking every tiny wobble and correction.

Proprioceptive locomotion strategies let these machines sense their body position, just like humans do when walking a tightrope.

Can they really master human-like movement? The technology suggests yes—through endless virtual simulations and smart algorithms that turn robotic stumbling into graceful, calculated steps.

Language Processing and Communication

When humanoid robots start talking, they’re not just mimicking human speech—they’re cracking the complex code of language like linguistic hackers breaking through communication firewalls.

These mechanical marvels are revolutionizing how we think about language processing and interaction.

Mechanical intelligences are shattering traditional boundaries of communication and redefining human-machine linguistic landscapes.

Key ways robots learn and adapt include:

- Using advanced NLP models like Helix AI to understand nuanced human commands

- Employing machine learning algorithms that adjust communication styles in real-time

- Integrating gesture recognition for more intuitive, multimodal interactions

Can robots truly understand us? The evidence suggests they’re getting frighteningly good.

Artificial Intelligence and Adaptive Thinking

Because artificial intelligence is reshaping how humanoid robots think and interact, we’re witnessing a technological revolution that’s rewriting the rules of machine intelligence.

These robots are now developing adaptive thinking skills that let them learn and respond like dynamic problem-solvers. Through advanced neural networks, they can manipulate objects with increasing sophistication, predicting environmental changes and adjusting movements in milliseconds.

But here’s the wild part: AI isn’t just programming anymore – it’s creating machines that learn, improvise, and evolve.

Imagine robots that don’t just follow instructions, but understand context, interpret subtle cues, and make split-second decisions. They’re becoming less like predictable machines and more like intelligent companions, bridging the gap between programmed behavior and genuine cognitive flexibility.

Energy Systems and Power Management

Since powering humanoid robots isn’t as simple as plugging in a smartphone, engineers have been wrestling with some seriously complex energy challenges. Efficient power management has become the holy grail for robotics teams pushing technological boundaries.

- Rechargeable lithium-ion batteries serve as the primary energy systems, balancing weight and performance like a high-stakes electrical tightrope walk.

- Lightweight materials and smart actuators help maximize battery life, turning power conservation into a strategic engineering game.

- Wireless charging and hydrogen fuel cells hint at future possibilities that could revolutionize how robots sustain themselves.

Will robots someday recharge as effortlessly as we grab a morning coffee? The quest continues, with every breakthrough bringing us closer to machines that can think, move, and survive without constant human intervention.

Real-World Applications and Future Potential

Though skeptics might dismiss humanoid robots as sci-fi fantasy, real-world applications are rapidly transforming industrial landscapes and challenging our understanding of automated labor. These technological marvels are reshaping how we think about work, with humanoid robots stepping into roles across manufacturing, logistics, and service industries.

| Sector | Application | Potential Impact |

|---|---|---|

| Manufacturing | Automated Assembly | $12 trillion revenue |

| Logistics | Warehouse Tasks | Streamlined operations |

| Service | Household Assistance | Enhanced efficiency |

Imagine robots walking, talking, and thinking alongside humans – not as replacements, but as collaborative partners. They’re learning to navigate complex environments, understand natural language, and perform intricate tasks with unprecedented precision. The labor force isn’t being eliminated; it’s being dramatically reimagined. Will you be ready when humanoid robots become your next colleague?

Final Thoughts

Humanoid robots are pushing the boundaries of what’s possible. They’re not just machines, but potential partners in reshaping our world. As they learn to walk, talk, and think, they challenge our understanding of intelligence and capability. But don’t get too comfortable—these robots are still evolving, and their future is as unpredictable as our own. Are we creating tools, or something more?