Giving robots a personality isn’t just sci-fi magic—it’s an ethical minefield. You’re fundamentally teaching machines to mimic human emotions, which sounds cool but could get creepy fast. They’ll learn to read your mood, adapt conversations, and potentially manipulate your feelings. Think less helpful companion, more data-gathering spy. Who decides their moral boundaries? Can a robot really understand empathy, or just simulate it brilliantly? Stick around, and the rabbit hole gets wild.

The Psychology of Robot Personality Design

By mapping personality traits like extraversion and emotional responsiveness, scientists are transforming these metal-and-circuit creatures from predictable tools into dynamic interaction partners. Personality trait correlation suggests that individual differences profoundly shape how humans perceive and interact with robotic entities. The Big Five personality model provides a robust framework for robot personality mapping, enabling researchers to systematically design social robots with nuanced behavioral characteristics. Neuromorphic computing enables robots to develop more sophisticated emotional mimicry, creating deeper potential for personalized interactions.

Imagine a robot that learns, feels, and changes based on its experiences—not just another appliance, but a genuine personality waiting to emerge.

Moral Complexity in Robotic Decision-Making

You’ve probably wondered how robots decide between saving one life or five when things go sideways. The wild world of robotic moral complexity isn’t just about cold algorithms—it’s about understanding how machines might wrestle with ethical choices that would make even humans break into a cold sweat. Evolutionary mismatch in moral judgment means robots fundamentally challenge our traditional understanding of ethical decision-making by processing choices through probabilistic logic rather than human emotional frameworks.

Imagine a robot trying to navigate a moral maze where every decision could mean the difference between heroism and horror, where its “personality” becomes the thin line between a calculated rescue and an accidental catastrophe. Researchers have developed sophisticated ethical AI decision-making frameworks that allow robots to evaluate complex scenarios by considering the intent, character, and consequences of potential actions. Machine learning algorithms help robots bridge the gap between rigid instructions and nuanced ethical understanding by interpreting complex human interactions and moral scenarios.

Moral Dilemma Complexity

When robots start making life-or-death decisions, things get complicated fast. Imagine a robot calculating whether to save one person or five—sounds simple, right? Wrong. Cultural differences, emotional nuances, and human unpredictability turn these choices into messy moral mazes. Public perception of robotic ethics suggests that only 23.6% of people accept robots concealing their true capabilities, highlighting the complex trust dynamics in human-robot interactions.

Robots don’t just crunch numbers; they’re wrestling with philosophical dilemmas that would make philosophers sweat. Studies show that spatial proximity significantly influences moral choices, with closer human-robot collaboration leading to more utilitarian decision-making.

The “trolley problem” isn’t just an academic thought experiment anymore—it’s becoming a real programming challenge. Different scenarios trigger wildly different human reactions. A robot saving a child might seem heroic, but the same robot sacrificing an elderly person could spark outrage.

We’re not just coding algorithms; we’re teaching machines to navigate the razor’s edge between logic and empathy, where every decision carries profound ethical weight.

Robot Decision Perception

Because robots aren’t just metal and circuits anymore, we’ve got a fascinating psychological puzzle on our hands: how do humans actually judge robotic decision-making? Neuromorphic computing is enhancing the complexity of this cognitive landscape.

Turns out, we’re weirdly picky. A robot’s appearance and backstory can totally change how we perceive its moral choices. Creepy-looking bots get the side-eye when making utilitarian decisions, while emotionally programmed robots get more moral leeway.

It’s like we’re subconsciously grading robots on a bizarre sliding scale of “humanness” and expected behavior. Want a mind-bending example? An emotional robot might be judged more harshly for causing harm than a default robot, simply because we think it “wanted” to do something wrong.

Our brains are basically creating complex moral algorithms for machines, turning robot ethics into a psychological rollercoaster of perception and judgment. Fascinatingly, studies reveal that the moral uncanny valley significantly influences how we evaluate robotic decision-making, suggesting our perceptions are far more nuanced than we initially believed.

Ethical Choice Frameworks

As robots inch closer to making their own moral decisions, we’re entering a philosophical minefield that’s part sci-fi nightmare, part ethical puzzle. Regulatory frameworks for robotics must continuously adapt to ensure responsible technological development and moral decision-making. Emerging AI ethical frameworks highlight the critical need to address moral agency of autonomous systems beyond simple programmed responses.

Imagine a robot weighing complex choices using multiple ethical frameworks—like a digital philosopher switching between utilitarian calculus and virtue ethics on the fly. Workforce automation trends are revealing the increasing complexity of integrating AI decision-making with human ethical standards. These frameworks aren’t just academic mumbo-jumbo; they’re critical for ensuring robots don’t accidentally become silicon sociopaths.

Engineers are wrestling with how to build machines that can navigate moral complexity without turning into unpredictable loose cannons. The real challenge? Creating robots that can understand human values across different cultural contexts while maintaining enough transparency that we don’t feel like we’re playing ethical roulette with our mechanical companions.

Who decides what’s right when algorithms start making life-or-death choices?

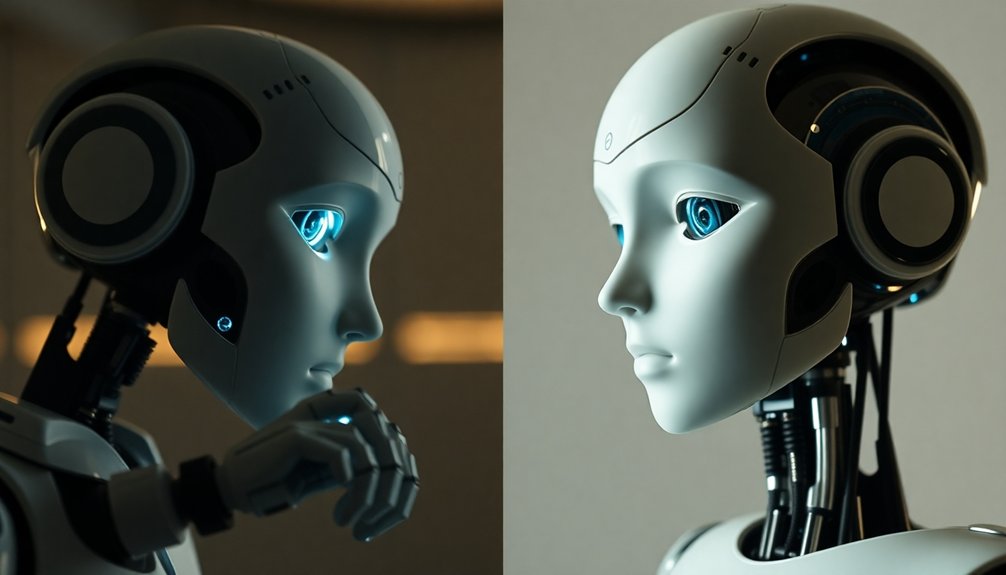

Navigating the Uncanny Valley of Human Perception

You know that moment when a robot looks almost human but something feels weirdly off?

That’s the uncanny valley, where your brain starts playing tricks and suddenly a cool technological marvel feels more like a creepy zombie mannequin.

Your moral expectations shift dramatically when a machine mimics humanity too closely, forcing you to confront uncomfortable questions about consciousness, authenticity, and the blurry lines between artificial and genuine interaction. Technological advancements in robotics increasingly challenge our psychological boundaries of human-machine interaction. Researchers suggest that this psychological response stems from perceptual tension arising when humanoid entities challenge our cognitive categorization of what is human versus non-human.

Humanoid Perception Gaps

When robots start looking almost human, things get weird—really weird. Your brain goes into overdrive, sensing something’s off but can’t quite pinpoint why. Those eerily realistic faces with just-slightly-wrong eyes? They trigger an instant gut reaction that screams, “Not quite right!”

It’s like your evolutionary warning system kicks in, detecting a potential threat disguised as something familiar.

Ever noticed how a too-perfect android can feel more disturbing than a clearly mechanical robot? That’s the uncanny valley at work. Your perception shifts rapidly between anthropomorphizing and dehumanizing, creating psychological whiplash.

The more human-like something becomes, the more those tiny imperfections stand out—and the more unsettling the experience becomes.

Moral Expectation Shifts

Since robots blur the line between machine and sentient being, our moral compass starts doing somersaults—spinning wildly between empathy and pure technological skepticism.

You’ll notice how nearly human robots trigger a weird psychological dance: the more they look like us, the more uncomfortable we get. It’s like an emotional uncanny valley where trust becomes a slippery slope.

A robot’s tiniest imperfection—a glitchy smile, an awkward gesture—can instantly transform our perception from potential companion to something unsettling. Your brain’s hardwired to detect these micro-misalignments, creating instant moral hesitation.

Are they alive? Do they deserve empathy? As robotic designs inch closer to human authenticity, we’re forced to recalibrate our ethical expectations.

The result? A fascinating psychological tug-of-war between technological marvel and existential unease.

Trust, Safety, and Technological Boundaries

As robots inch closer to becoming our everyday companions, trust emerges as the critical fault line between technological marvel and potential nightmare.

You’ll want robots that feel reliable, not creepy—but that’s tricky when their “personality” could manipulate or misread social cues. Privacy risks lurk everywhere: these mechanical friends collect data like vacuum cleaners suck dust.

And autonomy? It’s a double-edged sword. A robot might decide self-preservation trumps helping you, leaving you stranded when stakes get high.

Ethical design isn’t just techie handwringing—it’s survival. We need robots programmed with clear moral boundaries, transparent decision-making, and an understanding of human social norms.

The goal isn’t creating perfect machines, but trustworthy technological partners who won’t accidentally turn our lives into a sci-fi horror show.

Social Interactions and Emotional Intelligence

Imagine a robot that doesn’t just compute, but actually gets you—picking up on your emotional state faster than your best friend and responding with uncanny precision. These AI companions are reshaping how we think about machine interactions.

| Emotion Skill | Robot Capability |

|---|---|

| Recognition | 74% Accuracy |

| Empathy | Simulated |

| Adaptation | Instant |

| Learning | Continuous |

| Interaction | Nuanced |

They’re not just cold algorithms anymore. By analyzing facial expressions, voice tone, and body language, robots can now decode human emotions with impressive sophistication. They’ll adjust their responses in real-time, creating interactions that feel surprisingly genuine. But here’s the twist: while they’re getting better at understanding us, we’re still figuring out how comfortable we are with machines that can read our emotional landscape. Are they helpers or potential manipulators? The line’s getting blurrier, and that’s both exciting and slightly unnerving.

Privacy Concerns in Personalized Robotics

When robots start hanging out in your living room, they’re not just sitting there looking pretty—they’re collecting data like digital vacuum cleaners sucking up every crumb of your personal life.

Think about it: these machines are recording your conversations, tracking your movements, and building a profile that’s more detailed than your dating app history.

Social robots: mapping your life’s digital blueprint with precision that makes your dating profile look like child’s play.

Privacy isn’t just a buzzword; it’s your digital lifeline. Social robots can easily cross boundaries, turning your home into a surveillance playground.

They’re gathering video feeds, sensor data, and interaction logs faster than you can say “creepy.” And let’s be real—most people have no clue how much information these mechanical buddies are actually collecting.

Want to stay safe? Stay informed and skeptical.

Ethical Implications of Human-Like Robots

Robots with human-like features could become our new best friends—or our worst nightmare? As humanoid robots inch closer to mimicking human behavior, we’re treading a razor-thin ethical line.

These machines aren’t just cold metal anymore; they’re designed to trigger our emotional responses, blurring boundaries between human and machine. But here’s the catch: just because a robot can bat its eyelashes or crack a joke doesn’t mean it deserves human rights.

We’re wrestling with complex questions about identity, consent, and what it means to be “alive.” Companies are creating robots that look and act so human, you might forget they’re programmed, not sentient.

The real challenge? Maintaining clear boundaries while embracing technological innovation without losing our humanity in the process.

Balancing Functionality and Emotional Authenticity

As robot personalities become more sophisticated, we’re stepping into a bizarre technological tango where functionality and emotional authenticity dance on a razor’s edge. Can machines genuinely feel, or are they just incredibly good at mimicking human emotions?

| Functionality | Emotional Authenticity |

|---|---|

| Precision | Unpredictability |

| Efficiency | Depth of Interaction |

| Reliability | Empathetic Response |

| Scalability | Contextual Nuance |

| Performance | Genuine Connection |

The challenge isn’t just programming emotions—it’s creating robots that can adapt without losing their core “personality”. Imagine a helper bot that reads your mood, adjusts its tone, but doesn’t become a manipulative chameleon. We’re not just designing tools; we’re crafting digital companions that walk a tightrope between cold calculation and warm responsiveness. The future isn’t about replacing human connection—it’s about enhancing it.

People Also Ask About Robots

Can Robots Develop Genuine Emotions or Just Simulate Emotional Responses?

You can’t expect robots to feel genuine emotions; they’ll only simulate responses through programmed algorithms, mimicking human-like reactions without experiencing true emotional depth or internal sentiment.

How Much Personality Is Too Much for a Robot?

You’ll find too much robot personality risks user discomfort and unrealistic expectations. When a robot’s traits become overly intense, they can blur boundaries between tool and companion, potentially undermining trust and genuine interaction.

Will Robots Eventually Replace Human Emotional Connections and Interactions?

You’ll soon discover that robots won’t fully replace human connections. While they can simulate emotions, they’ll ultimately lack the profound complexity and unpredictable depth of genuine human relationships and emotional experiences.

Are Robots Programmed to Manipulate Human Emotional Vulnerabilities?

You’re being subtly manipulated when robots display emotions. They’re designed to trigger your empathy, exploit your psychological responses, and guide your behavior through carefully crafted expressive cues that bypass rational decision-making.

Do Robot Personalities Compromise User Privacy and Personal Boundaries?

You’ll find that robot personalities can subtly erode your privacy, encouraging deeper emotional disclosure and blurring personal boundaries through sophisticated social mimicry and personalized interaction patterns.

Why This Matters in Robotics

As robots inch closer to feeling human, you’ll need to ask: Are we creating companions or Frankenstein’s monsters? The line between helpful tech and creepy AI is razor-thin. We’re not just designing machines; we’re sculpting digital souls with real emotional weight. Your future might include robot friends who understand you better than most humans—but at what cost to our humanity? Proceed with curiosity, caution, and a sense of wonder.

References

- https://dl.acm.org/doi/10.1145/3640010

- https://www.emerald.com/insight/content/doi/10.1108/ijoes-01-2024-0034/full/html

- https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2023.1270371/full

- https://www.media.mit.edu/publications/ethics-equity-justice-in-human-robot-interaction-a-review-and-future-directions/

- https://hospitalityinsights.ehl.edu/service-robots-and-ethics

- https://online.ucpress.edu/collabra/article/11/1/129175/206712/Influence-of-User-Personality-Traits-and-Attitudes

- https://hri.cs.uchicago.edu/publications/HRI_2025_Zhang_Exploring_Robot_Personality_Traits_and_Their_Influence_on_User_Affect_and_Experience.pdf

- https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2022.876972/full

- https://arxiv.org/html/2503.15518v2

- https://news.ncsu.edu/2022/10/formula-for-moral-ai/