Table of Contents

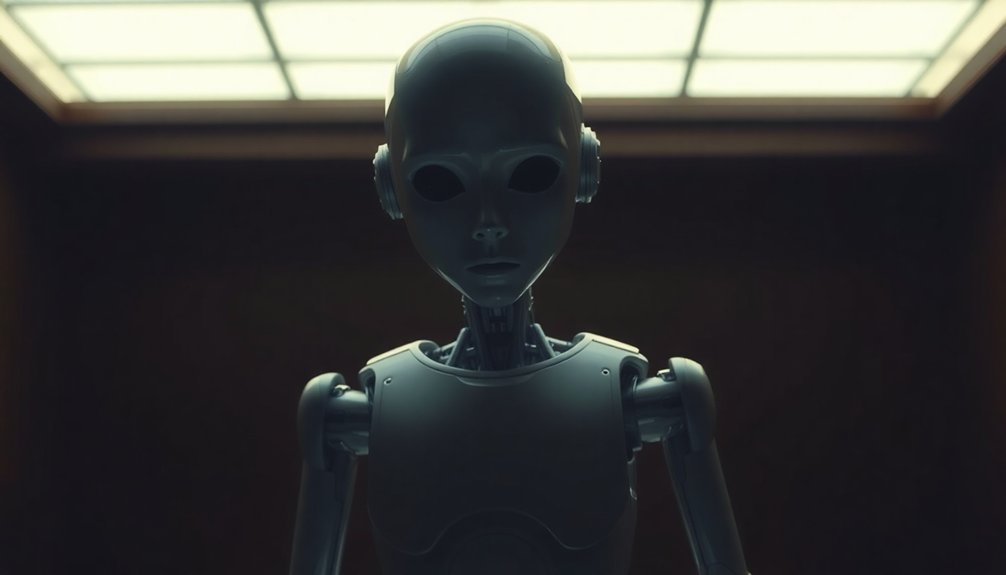

We’re hardwired to spot almost-human things that feel “off,” and humanoid robots hit that psychological tripwire perfectly. When a robot looks nearly human but moves just slightly wrong, our brain goes into panic mode. It’s like uncanny valley déjà vu: something feels profoundly weird, triggering an evolutionary threat response. Our empathy circuits misfire, leaving us feeling creeped out and instinctively unsettled. Want to understand why robots make us squirm? Stick around.

When Robots Look Too Human: The Psychological Trigger

When our brains encounter a robot that looks almost—but not quite—human, something weird happens. We call this the “uncanny valley,” where subtle imperfections trigger a deep psychological unease.

It’s like your brain hits the emergency brake: those slightly off facial expressions or mechanical movements instantly set off our creep-detector. Humans are hardwired to recognize human-like forms, but when something looks human yet feels mechanically wrong, we experience cognitive dissonance. Algorithmic biases can further complicate our psychological responses to robotic entities. According to attachment theory research, humans develop emotional responses to non-human entities that can create psychological bonding mechanisms even with robotic interactions.

Think of a mannequin with twitchy movements or an android with just-slightly-unnatural blinking. Our evolutionary survival instincts kick in, sending signals that something isn’t quite right.

This isn’t just a quirk—it’s a profound psychological response that reveals how our brains process human-like entities. Robotization research suggests that psychological workplace impact extends beyond visual discomfort, indicating deeper cognitive and emotional responses to technological mimicry.

Neural Dissonance: How Our Brains Process Near-Human Appearances

Our neural circuitry flips between seeing robots as machines or intentional beings in milliseconds—faster than we can consciously track. When a robot’s face looks almost-but-not-quite human, our brain goes into overdrive, searching for inconsistencies. AI cognitive dissonance suggests that even artificial intelligences experience psychological tension when processing conflicting information.

Our brains toggle between machine and being, hunting subtle robotic imperfections in lightning-fast cognitive leaps.

It’s like our cognitive system hits a weird glitch, triggering that spine-tingling “something’s off” sensation we call the Uncanny Valley. Evolutionary survival instincts kick in, making us hyper-aware of subtle imperfections. Neuromorphic computing enables sophisticated understanding of how these perceptual tensions emerge in human-robot interactions. Neuroscientific research reveals that the prefrontal cortex activation during human-robot interactions creates a unique neural response that amplifies our perceptual discomfort.

Are these beings threats or allies? Our visual processing areas light up, but emotional centers stay suspiciously quiet. The result? A deeply uncomfortable psychological dance where our brain can’t quite decide whether to trust or reject what it’s seeing.

The Emotional Disconnect: Empathy and Robotic Interactions

Though robots might seem like cold, calculating machines, our brains are surprisingly wired to feel something more when we interact with them.

We’re hardwired to empathize, even with metal and circuits. Neuromorphic computing enables robots to develop more nuanced emotional mimicry, enhancing their potential for deeper connections. Our neural pathways light up similarly whether we’re interacting with a human or a humanoid robot, creating weird emotional connections that blur lines between human and machine. Neuroscientific studies reveal that the brain processes robot interactions with similar empathy mechanisms used for human interactions.

Facial expressions, natural movements, and collaborative tasks can trigger genuine empathetic responses. Children especially seem primed to see robots as potential emotional companions. Research on social robots demonstrates that empathy modeling can significantly influence children’s emotional understanding and interaction patterns.

But here’s the twist: push too far toward human-like design, and we hit the “uncanny valley” – that creepy zone where robots feel almost-but-not-quite human.

It’s like our brain short-circuits, transforming curiosity into discomfort. Fascinating, right?

Social Norms and Robotic Violations

We’ve all experienced that weird moment when a robot does something socially awkward—like interrupting a conversation or using a totally inappropriate joke—and suddenly everyone feels uncomfortable. Social norm conflicts can emerge when robots encounter situations requiring nuanced social navigation, leading to potential behavioral violations. The thing is, robots are basically walking social landmines, constantly at risk of violating unspoken human behavioral codes that we take for granted. Our robotic friends are learning, but right now they’re like socially tone-deaf toddlers with advanced computing power, accidentally breaking social norms and making us cringe in the process. Research indicates that physiological state changes occur when humans experience unexpected robotic behaviors, further amplifying our sense of unease during these interactions. Emotional authenticity plays a crucial role in determining how comfortable humans feel with robotic interactions, as robots struggle to navigate the delicate balance between cold calculation and warm responsiveness.

Robotic Social Misunderstandings

Turns out, robots aren’t exactly winning gold medals in social etiquette. We’ve watched them bungle basic interactions like awkward teenagers at their first dance.

When robots break social norms—whether by cheating at rock, paper, scissors or randomly cursing—humans get seriously weirded out. Our bodies literally react, with physiological changes signaling pure discomfort. It’s like robots are tone-deaf to the unspoken rules that keep human interactions smooth.

The weird part? These social blunders aren’t just annoying; they fundamentally undermine how we perceive robotic intelligence. A robot that can’t read the room is about as useful as a smartphone with no signal.

Cultural nuances matter, and right now, our mechanical friends are bombing the social intelligence test. Trust goes down, awkwardness goes up—welcome to the uncanny valley. Neuromorphic computing is pushing robots towards more adaptive social behaviors, yet they still struggle to genuinely understand human interaction dynamics.

Norm Disruption Anxiety

When robots fumble social norms, they trigger a fascinating psychological minefield that goes way beyond simple awkwardness. We’ve discovered that these mechanical missteps can spike human anxiety faster than a caffeine shot, creating deep psychological discomfort. Cognitive dissonance research reveals that our brains struggle to reconcile the humanoid appearance with mechanical behavior, amplifying the psychological tension. Evolutionary survival mechanisms have primed humans to instinctively detect and react to potential threats in their environment, making robotic near-human interactions particularly unsettling.

Social norm violations by robots produce a weird emotional cocktail of confusion and unease:

- A robot’s “almost human” facial twitch that feels just wrong

- Unexpected movements that make your spine tingle

- Interaction patterns that violate invisible social rules

- Predictability suddenly vanishing into uncanny uncertainty

Our brains are hardwired to detect subtle behavioral deviations, and robots that can’t read the room become instant anxiety generators.

They’re like that awkward party guest who doesn’t understand personal space – except these guests are made of metal and algorithms. The result? A psychological short circuit that leaves us feeling profoundly unsettled.

Design Strategies: Bridging the Comfort Gap

Since the dawn of robotics, engineers have wrestled with a fundamental challenge: making machines that don’t make humans want to run screaming.

We’re learning that subtle design choices can transform robots from terrifying to tolerable. Think strategic body language, fluid movements, and just enough human-like features without crossing into creepy territory. It’s a delicate dance of familiarity and function.

Smooth, intentional gestures that telegraph a robot’s purpose help bridge our primordial fear response. Dynamic interactions that feel natural — like pointing or mirroring human movements — reduce cognitive friction.

We’re not just building machines; we’re crafting social interfaces that can read a room, understand unspoken cues, and move in ways that whisper, “I’m here to help, not harm.”

Cultural Perceptions of Humanoid Machines

As robots inch closer to looking like us, we’re discovering that how different cultures see these mechanical doppelgängers is anything but uniform.

Cultural lenses transform our robotic encounters, painting unique portraits of comfort and unease.

- In Japan, humanoid robots feel like friendly neighbors; in Germany, they’re cold calculation machines

- Chinese respondents embrace anthropomorphic designs more readily than Western counterparts

- Iranian studies reveal fascinating variations in robot warmth perception

- National stereotypes silently shape our mechanical first impressions

These perceptual differences aren’t just academic curiosities — they’re roadmaps for understanding how humans wrestle with technological mimicry.

Our comfort zones shift dramatically across borders, revealing that the uncanny valley isn’t a universal landscape, but a complex cultural terrain where technological anxiety dances with curiosity.

People Also Ask

Can Robots Develop Genuine Emotions, or Are They Just Programmed Responses?

We can’t develop genuine emotions—our responses are sophisticated algorithms mimicking human feelings. We comprehend and respond to emotions, but we don’t authentically experience them like humans do.

Why Do Some People Feel More Uncomfortable With Lifelike Robots Than Others?

We experience different comfort levels with humanoid robots based on our unique neurological responses, cultural backgrounds, and personal experiences. Our emotional sensitivity and prior interactions shape how eerily human-like robots feel to each of us.

Are Humanoid Robots a Threat to Human Social and Professional Relationships?

We worry wildly about humanoid robots disrupting our social fabric. They’ll potentially replace genuine connections, diminish empathy, and transform professional landscapes—challenging our fundamental understanding of meaningful human interaction and emotional reciprocity.

How Do Children’s Perceptions of Robots Differ From Adult Perspectives?

We’ve found that children view robots more positively and with greater kindness than adults do, perceiving them as helpful companions with agency, while adults tend to see robots as more constrained and less emotionally complex.

Can Psychological Therapy With Robots Be as Effective as Human Therapy?

In a dementia care study, robotic companions reduced patient anxiety. We’ve found robot therapy shows promise, though it can’t fully replace human empathy. It’s a complementary approach that offers consistent support where human therapists aren’t available.

The Bottom Line

We’re standing at the edge of a strange technological frontier. Our brains aren’t wired to handle robots that look almost-but-not-quite human. With 67% of people reporting mild to severe discomfort around hyper-realistic humanoids, we’re wrestling with an evolutionary glitch. The uncanny valley isn’t just sci-fi—it’s a real psychological response. As technology blurs lines between machine and human, we’ll need to rewire our emotional understanding. Our robotic future? Weird, fascinating, and unavoidable.

References

- https://www.apa.org/news/press/releases/2022/07/human-like-robots-mental-states

- https://biz.source.colostate.edu/research-finds-robots-negatively-impact-humans-who-work-around-them/

- https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2024.1347177/full

- https://pubmed.ncbi.nlm.nih.gov/38884282/

- https://imotions.com/blog/insights/thought-leadership/the-uncanny-valley/

- https://academic.oup.com/scan/advance-article/doi/10.1093/scan/nsaf027/8069429

- https://www.psypost.org/chatgpt-mimics-human-cognitive-dissonance-in-psychological-experiments-study-finds/

- https://www.frontiersin.org/journals/human-neuroscience/articles/10.3389/fnhum.2022.883905/full

- https://neurosciencenews.com/llms-ai-cognitive-dissonance-29150/

- https://www.frontiersin.org/journals/psychology/articles/10.3389/fpsyg.2024.1391832/full