Voice commands turn robots from dumb machines into smart sidekicks through a wild tech magic show. Your spoken words hit a mic, get digitized, and transformed by neural networks that break down sounds into meaningful chunks. Machine learning algorithms translate those acoustic signals into precise movements—turning “move forward” into actual robotic steps. Curious how a few words can control a machine? Stick around, and you’ll see the future unfold.

Audio Signal Capture and Preprocessing

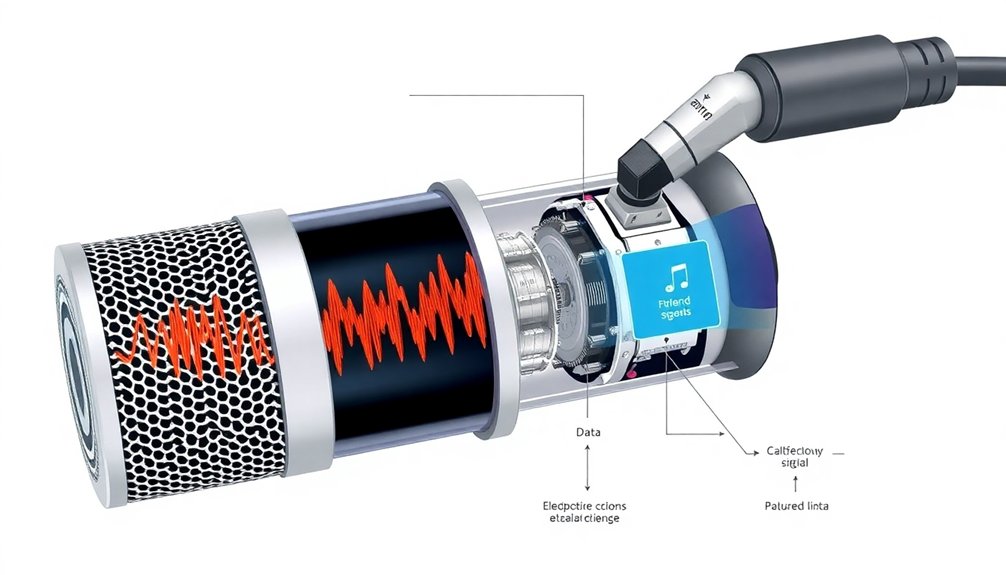

When robots need to understand human speech, they start by turning sound into something they can actually process. Audio signal capture begins with a microphone—basically a tiny translator converting sound waves into electrical whispers.

These analog signals then go through a digital makeover, getting digitized by an analog-to-digital converter that transforms messy, wavy sound into crisp binary language robots understand.

But wait, raw audio isn’t perfect. Preprocessing is where things get interesting. Noise reduction kicks out background sounds like your neighbor’s lawn mower, while filtering smooths out audio imperfections.

Think of it like noise-canceling headphones, but for robot ears. The goal? Crystal-clear audio signals that let robots parse even mumbled commands with robotic precision.

Speech-to-Text Conversion Techniques

When robots need to understand your voice, they first break down sound waves into microscopic audio fingerprints through sophisticated acoustic signal processing techniques.

Your spoken words get transformed into tiny sonic building blocks called phonemes, which are like the DNA of human speech – unique, intricate patterns that machines decode with remarkable precision.

You might think teaching robots to understand language is rocket science, but it’s really about translating the complex music of human communication into a digital language machines can comprehend.

Acoustic Signal Processing

Ever wondered how robots magically turn your spoken words into actionable commands? Acoustic signal processing is like a translator for sound waves. When you bark an order, your voice hits the robot’s microphone and gets transformed from messy analog squiggles into crisp digital data.

Think of it as turning a wild, unreadable scribble into neat, machine-friendly text. The secret sauce? Fast Fourier Transform breaks down your voice into frequency components, while Mel-Frequency Cepstral Coefficients extract the juicy phonetic details.

It’s like a digital sound detective pulling apart each syllable. Deep learning models—specifically LSTM networks—then work their magic, connecting these sound fragments into meaningful commands. Suddenly, your robot isn’t just hearing noise; it’s understanding exactly what you want.

Phoneme Recognition Methods

Decoding human speech isn’t just rocket science—it’s sound science with a technological twist. Phoneme recognition breaks down spoken language into tiny sound units that robots can understand. Think of it like audio Lego pieces that snap together to form words.

Your robot’s microphone captures sound waves, converting them into digital signals faster than you can blink. Advanced algorithms then extract key acoustic features, using smart language models to predict likely word sequences. Long Short-Term Memory networks help connect these phoneme puzzle pieces across longer speech distances, making voice recognition eerily accurate.

The magic happens through sophisticated decoding techniques that minimize errors, transforming raw sound into precise text commands. It’s not perfect, but it’s pretty close—and getting smarter every day.

Natural Language Processing Fundamentals

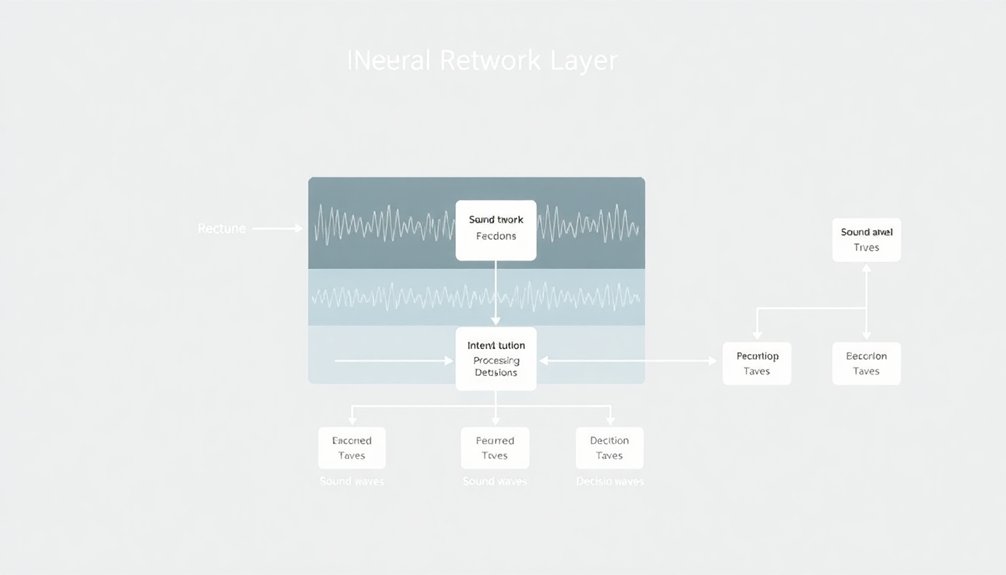

When you break down sound waves, you’re basically teaching robots to eavesdrop on human conversations like digital linguists with superhuman hearing.

Language pattern recognition lets these mechanical listeners transform random acoustic vibrations into meaningful sequences, sorting through the chaos of human speech to extract coherent commands.

Neural networks enable robots to process complex linguistic inputs, converting auditory signals into precise computational instructions that guide their subsequent physical movements.

Sound Wave Deconstruction

Because robots aren’t mind readers (yet), they rely on some seriously cool sound wave magic to understand human speech. Your voice travels through the air as vibrating sound waves, which a microphone enthusiastically captures and transforms into digital data. Machine learning algorithms enhance the robot’s ability to process complex sensory inputs like audio signals.

But how exactly does this voice recognition wizardry work?

- Sound waves get chopped into tiny phonetic pieces

- Acoustic models map these audio signals like linguistic treasure maps

- Advanced algorithms predict the most likely word sequences

Imagine your robot buddy dissecting your spoken command like a linguistic surgeon, breaking down each syllable and comparing it against massive language databases.

LSTM networks help process these complex audio signals, ensuring your robot understands whether you’re saying “move forward” or “grooveonder” – because precision matters when you’re talking to machines that can actually move.

Language Pattern Recognition

Through the magic of Natural Language Processing (NLP), robots transform human gibberish into crystal-clear instructions. Your rambling voice commands get dissected by sophisticated algorithms that break down sounds into meaningful patterns. Humanoid robot technologies leverage advanced sensing and AI capabilities to interpret complex voice interactions.

Ever wondered how a robot understands you? It’s all about those tiny sound units called phonemes and smart acoustic models that translate audio signals into comprehensible language.

LSTM networks work like linguistic detectives, tracking context across word sequences and catching nuanced meanings most machines would miss.

Deep learning techniques let robots recognize complex language structures, turning your casual “Hey, grab that wrench” into precise mechanical actions. Voice recognition isn’t just listening—it’s intelligent interpretation, parsing your words with computational precision that would make a linguistics professor weep with joy.

Command Intent Translation

If you’ve ever barked an order at a robot and wondered how it magically transforms your mumbling into precise mechanical action, welcome to the wild world of Command Intent Translation.

Decoding algorithms work overtime to refine your speech into actionable instructions, turning raw language into robotic marching orders.

- Your jumbled “Move that thing over there” becomes a precise coordinate translation

- Acoustic models break down audio signals into phonetic puzzle pieces

- LSTM networks maintain context, catching nuanced language patterns you didn’t even realize you used

Sophisticated NLP techniques parse through syntactic structures, predicting likely word sequences and extracting core meanings.

The result? Robots that don’t just hear you, but genuinely understand what you want them to do. It’s part science, part magic—and totally cool.

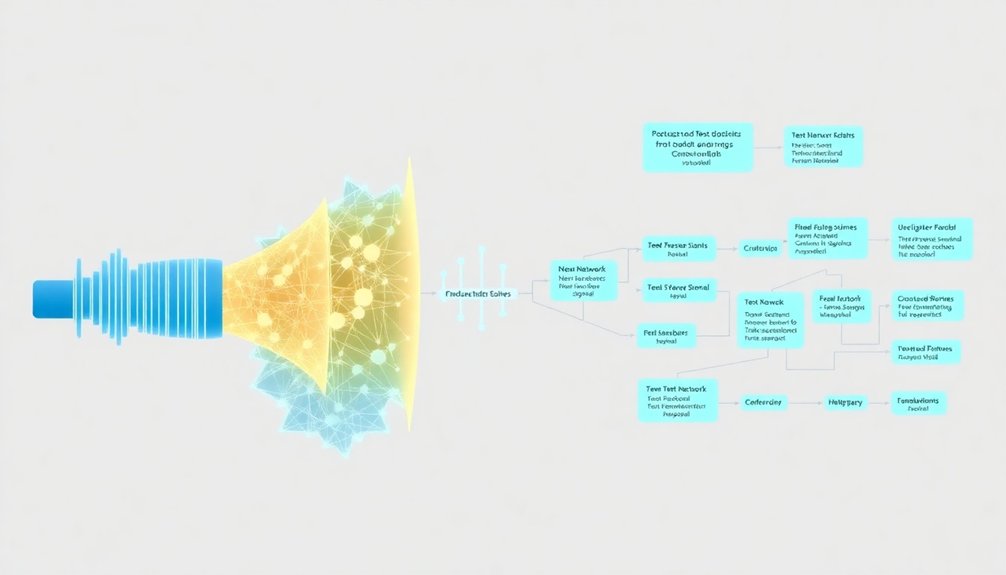

Command Intent Recognition Algorithms

Acoustic and language models team up like detective partners, where one tracks sound patterns while the other guarantees grammatical coherence.

Think of it as a real-time translation service that understands not just what you’re saying, but what you actually mean.

Training these algorithms requires massive, diverse datasets that capture everything from Silicon Valley tech-speak to rural drawls, guaranteeing robots can understand virtually anyone’s command.

Semantic Understanding and Context Analysis

When robots start listening, they’re doing way more than just hearing words—they’re decoding the entire linguistic puzzle. Semantic understanding isn’t just about processing speech; it’s about diving deep into context and intent.

- Acoustic models break down sound waves like linguistic detectives.

- Machine learning algorithms parse complex commands with ninja-like precision.

- LSTM networks track language dependencies like memory champions.

Imagine a robot that doesn’t just hear “grab the red ball” but understands the nuanced meaning behind your request. These smart systems analyze surrounding cues, previous interactions, and subtle linguistic hints to interpret commands accurately.

They’re not just recording words; they’re constructing meaning.

Robot Command Mapping Mechanisms

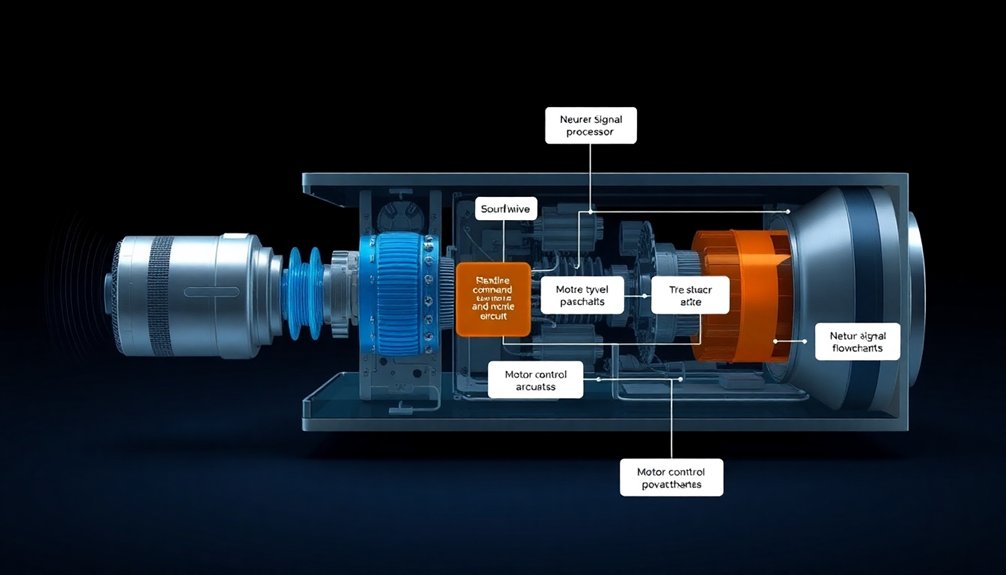

After unraveling how robots decode linguistic puzzles, we’ve got a bigger challenge: turning those understood commands into actual robot actions.

Robot command mapping mechanisms are like a digital translator between human speech and machine movement. Think of it as a complex flowchart where each word triggers specific algorithmic pathways.

Decoding human language into robotic choreography: a digital translation where words become precise mechanical symphonies.

When you say “move forward,” the system doesn’t just hear words—it translates them into precise motor instructions, calculating exact joint angles, wheel rotations, and force requirements.

These mechanisms transform abstract language into concrete robotic behaviors, breaking down vocal instructions into granular computational steps.

It’s like teaching a machine to not just listen, but to truly understand and execute with mechanical precision. Your voice becomes a remote control, transforming spoken intentions into robotic reality—no joystick required.

Cognitive architectures enable robots to strategize and evaluate potential actions before executing a command, adding an intelligent layer to voice-activated movement.

Action Execution and Feedback Systems

Because robots aren’t just fancy toys waiting to do your bidding, action execution and feedback systems transform voice commands into actual, real-world movements that are equal parts science and magic.

When you speak a voice command, the robot’s action server springs into life:

- Your words get parsed through predefined order structures

- Specific executors match and translate commands into precise movements

- Feedback mechanisms confirm or report errors in real-time

The magic happens when acoustic models convert sound waves into actionable instructions, routing them through a complex network of robotic decision-making.

Imagine telling your robot to “grab that wrench” and watching it interpret, plan, and execute with surgical precision. Each movement is a choreographed dance between your voice, the robot’s programming, and its environmental awareness—proving that communication isn’t just human, it’s mechanical too.

Error Handling and Continuous Learning

Robots aren’t mind readers—yet—which means their voice command systems need serious backup when things go sideways. Error handling is the digital safety net that catches misheard commands before they turn into epic fail moments. When a robot mishears “turn left” as “burn light”, sophisticated algorithms kick in, prompting you to repeat or rephrase your instructions.

Continuous learning transforms these robotic assistants from clunky machines into adaptable companions. Machine learning techniques help them recognize your unique speech patterns, accent quirks, and communication style.

Think of it like training a really smart puppy—each interaction teaches the robot something new. Feedback loops let robots learn from past mistakes, constantly refining their understanding and execution of commands. The result? A smarter, more responsive robotic buddy that actually gets what you’re saying.

People Also Ask About Robots

How Does a Robot Voice Work?

You’ll capture sound through a microphone, convert it to digital signals, process the speech using advanced recognition tech, translate spoken words into text commands, and then execute precise robotic movements based on your instruction.

What Is the Use of Voice Control in a Robot?

You’ll find voice control empowers robots to understand and execute your commands seamlessly. It allows you to navigate, manipulate objects, and retrieve information hands-free, making complex robotic interactions intuitive and accessible for anyone.

How to Make a Robot With Voice Control?

You’ll need a microcontroller, voice recognition module, and programming language. Connect the module, train voice commands, and configure your robot’s responses. Test and refine the system until it accurately understands and executes your spoken instructions.

How Do Talking Robots Work?

In the age of steam engines, you’ll find talking robots convert your speech to text through acoustic analysis, then process commands via neural networks, interpreting phonemes and translating vocal instructions into precise robotic movements.

Why This Matters in Robotics

Voice commands aren’t just sci-fi fantasy anymore—they’re how robots actually understand and execute complex tasks. Sure, you might worry robots will misinterpret everything. But advanced AI is breaking those barriers, translating human speech into precise mechanical actions with remarkable accuracy. The future isn’t about perfect robots, it’s about robots that learn, adapt, and collaborate with us in increasingly intelligent ways.