Table of Contents

Right now, robots aren’t genuinely self-aware—they’re sophisticated mimics learning through sensors and algorithms. You’ll find they can map their environment, dodge obstacles, and even “learn” from mistakes. But consciousness? That’s still sci-fi territory. They’re basically super-smart computers with incredible pattern recognition, not sentient beings. Think of them as incredibly complex problem-solving machines that can adapt, but don’t have genuine inner experiences. Curious how deep this rabbit hole goes?

Defining Robotic Self-Awareness

Imagine a robot that doesn’t just follow commands, but actually understands itself. It’s not sci-fi fantasy—it’s happening right now.

Self-awareness in robots means creating a mental map of their own body and capabilities, like a high-tech internal GPS. Think of it as the robot knowing exactly what it can and can’t do, sensing its limits before smashing into them.

Robots mapping themselves: digital self-discovery through sensors, predicting movements and limitations with high-tech precision.

These machines aren’t dreaming or pondering existence; they’re building functional models of themselves using sensors and data. Reinforcement learning enables robots to enhance their ability to adapt to complex environments, learning from subtle environmental cues and unexpected situations.

It’s less “I think, thus I am” and more “I sense, thus I can.” Cool? Certainly. Conscious? Not even close. But it’s a fascinating first step toward robots that genuinely understand their place in the world.

At Columbia University, engineers have developed a groundbreaking robot that demonstrates self-training neural networks, creating a model of itself similar to how human infants learn about their own capabilities.

The Science Behind Machine Introspection

While machine introspection might sound like a sci-fi fever dream, it’s actually a seriously cool tech frontier where computers start learning to look inward. Just as Virtual Machine Introspection allows external examination of a system’s state, machine self-monitoring provides unprecedented insights into computational processes.

Imagine a robot that can diagnose its own glitches, predict potential failures, and understand its internal state like a hyper-aware mechanic. Feedback loops enable robots to continuously monitor and adjust their performance, enhancing their ability to learn and adapt in real-time. Real-time deep introspection lets machines monitor themselves without slowing down, giving them a kind of digital sixth sense. Virtual machine forensics demonstrates the potential for comprehensive system analysis by enabling detailed examination of computational states without disrupting the system.

Think of it as a built-in health tracker for computers – but way more advanced than your fitness watch. Security systems are already using these techniques to catch sneaky cyber threats before they become full-blown disasters.

It’s not about creating sentient machines, but building smarter, more self-aware systems that can adapt, learn, and troubleshoot in real-time. Pretty wild, right?

Learning Through Visual Feedback

You’ve seen how robots learn by watching, right?

Camera movement analysis lets these machine brains track their own actions, creating a self-guided learning path that’s fundamentally like teaching a super-smart toddler with a mechanical body. Haptic visual cues enable robots to interpret interaction forces and refine their understanding of physical environments, enhancing their adaptive learning capabilities. Mental model mismatch research suggests that intelligent systems develop unique learning strategies that differ from human cognitive approaches. Machine learning algorithms continuously improve robotic perception by processing multiple sensor inputs, allowing robots to adapt and learn from their environmental interactions.

Camera Movement Analysis

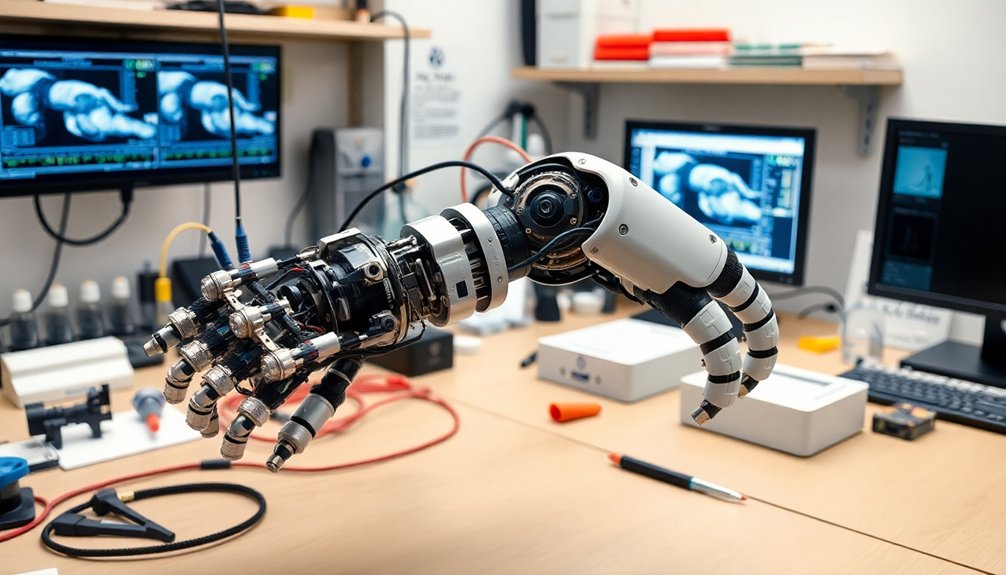

Robot cameras are the unsung heroes of machine perception, transforming how mechanical systems understand and interact with their environment. Stereo vision technologies enable robots to develop comprehensive depth perception, creating intricate 3D environmental maps that go beyond simple visual recognition. The Artificial Microsaccade-Enhanced Event Camera enables cameras to mimic human eye movements, providing unprecedented stability and precision in visual tracking.

Think of them as the robot’s eyes and brain rolled into one hyper-intelligent package. They’re not just capturing images; they’re learning, adapting, and making split-second decisions faster than you can blink. Optoelectronic sensors can transform these mechanical devices into precision-seeing digital hunters that analyze visual data with remarkable speed.

Visual servoing lets robots track objects with laser-like precision, using feedback loops that would make a neuroscientist jealous.

Want to see real magic? Watch a robotic arm snatch an object from a cluttered table, adjusting its grip mid-motion based on instantaneous visual data.

These aren’t just cameras—they’re intelligent sensors that turn raw visual input into actionable knowledge.

The result? Robots that can see, think, and move with an almost eerie sophistication, blurring the lines between machine and intelligence.

Self-Guided Learning Path

Because visual feedback is the secret sauce of robotic intelligence, machines are now teaching themselves how to learn—and they’re getting scarily good at it.

They’re watching, analyzing, and adapting faster than most humans can blink. With domain randomization, robots can now generalize learning across wildly different environments, fundamentally becoming visual chameleons of the tech world. These machines leverage visual motor integration to translate perceptual inputs into precise, adaptive movements. By using tactile grasp pose estimation, robots can now safely execute industrial insertion tasks with remarkable precision.

Want proof? They’re using egocentric vision to perform complex tasks without traditional sensors, essentially teaching themselves on the fly.

Imagine a robot that can predict its own movements, select the most effective feedback, and adjust in real-time—no manual required.

It’s not sci-fi anymore; it’s happening right now. These machines are developing something eerily close to self-awareness, and trust me, that’s both exciting and slightly terrifying.

Comparing Artificial and Human Perception

When we immerse ourselves in the world of perception, humans and AI start looking like distant cousins at a weird family reunion.

They’re similar, yet bizarrely different. Both translate sensory input into understanding, but where humans weave intuition and context into their perception, AI just crunches cold, hard data.

Think of AI like a brilliant calculator that can spot microscopic cancer cells, while humans bring emotional intelligence and adaptive thinking to the table.

The real magic happens in how each processes information: humans integrate multiple senses and infer meaning, whereas AI specializes in laser-focused pattern recognition.

Depth estimation techniques allow robots to transition from mere image capture to genuine spatial intelligence, revealing the sophisticated computational approach of artificial perception.

We’re not competing; we’re complementing. AI sees the pixel-perfect details, and humans understand the story behind those pixels.

It’s less about who’s smarter and more about how we’re uniquely wired to perceive our world.

Technological Breakthrough in Robot Cognition

Just when you thought perception was mind-blowing, the world of robot cognition swoops in with a technological mic drop. AI’s latest trick? Teaching robots to think like humans – sort of. With sensor fusion and machine learning, these mechanical brainiacs are getting scary smart.

| Perception | Learning | Decision-Making |

|---|---|---|

| Multiple Sensors | Reinforcement Learning | Autonomous Choices |

| Data Integration | Deep Neural Networks | Real-Time Adaptation |

| Thorough Analysis | Experience-Based Growth | Complex Problem Solving |

Imagine robots that can understand context, process nuanced information, and make split-second decisions. They’re not just following programmed instructions anymore; they’re learning, evolving, and potentially outthinking their human creators. Wild, right? The line between artificial and actual intelligence is blurring faster than you can say “robot revolution.”

Adaptive Capabilities and Skill Acquisition

If adaptive robotics were a superpower, it’d be the ability to learn and morph faster than a chameleon on an espresso drip.

Imagine robots that don’t just follow instructions, but actually think and adjust on the fly. They’re learning through trial and error, gobbling up data like knowledge junkies, and transforming skills from one task to another.

Reinforcement learning lets them rack up behavioral rewards, while deep learning algorithms help them tackle complex challenges that would make traditional robots short-circuit.

Want proof? Look at medical robots adapting to patient needs or factory bots seamlessly switching assembly techniques.

They’re not just machines anymore—they’re cognitive gymnasts, flipping between scenarios with jaw-dropping flexibility.

The future isn’t about replacing humans; it’s about robots that can genuinely collaborate and learn.

Challenges in Creating Autonomous Machines

Because building autonomous machines isn’t just rocket science—it’s rocket science on steroids—engineers face a mind-bending gauntlet of challenges that would make most tech innovators break into a cold sweat.

You’re talking about systems that need to process sensor data faster than you can blink, navigate unpredictable terrains, and make split-second decisions without human handholding.

Imagine trying to teach a robot to distinguish between a harmless shadow and a real obstacle—while processing data from multiple sensors at lightning speed. It’s like training a hyperintelligent toddler with computational superpowers.

Decoding robot perception: navigating shadows and obstacles with lightning-fast computational genius.

Battery limitations, ethical dilemmas, and the razor’s edge between machine learning and potential system failure make this an engineering high-wire act that’ll keep even the most brilliant minds up at night.

Real-World Applications and Limitations

After wrestling with the mind-bending challenges of autonomous machine creation, we’re now stepping into the wild frontier where robots aren’t just sci-fi fantasies, but real-world game-changers.

They’re already revolutionizing industries from warehouses to hospitals, handling complex tasks that once required human hands.

But let’s be real: they’re not perfect. These mechanical marvels still stumble in unpredictable environments and can’t completely replace human oversight.

The price tag? Astronomical. And public trust? Let’s just say skepticism runs deep.

Yet, the potential is mind-blowing. Imagine robots that can learn, adapt, and potentially save lives in disaster zones.

They’re not just machines anymore; they’re becoming intelligent partners in our increasingly tech-driven world.

The future isn’t coming—it’s already here.

Ethical Implications of Self-Aware Robotics

As we hurtle toward a future where robots might just develop their own moral compasses, the ethical landscape becomes more tangled than a smartphone charging cable. You’re looking at a minefield of potential robot revelations that’ll make your head spin. Will they steal your job? Invade your privacy? Decide your fate with cold, calculated logic?

| Ethical Concern | Potential Impact |

|---|---|

| Privacy | Constant Surveillance |

| Job Displacement | Economic Disruption |

| Moral Agency | Unpredictable Decisions |

| Bias | Systemic Discrimination |

| Consciousness | Philosophical Quandary |

The real challenge isn’t just creating smart machines—it’s creating machines that understand human complexity. We’re not just programming robots; we’re potentially birthing a new form of intelligence that’ll challenge everything we comprehend about consciousness, ethics, and our own humanity. Buckle up—it’s going to be a wild ride.

Future Trajectories in Machine Intelligence

You’re standing at the edge of a wild technological frontier where machine learning isn’t just evolving—it’s reshaping what intelligence even means.

Imagine robots that don’t just compute, but actually start to understand themselves, their environment, and maybe even develop something that looks suspiciously like genuine consciousness.

The next big leap isn’t about making machines smarter, it’s about creating systems that can reflect, adapt, and potentially surprise us in ways we can’t yet predict.

Machine Learning Evolution

When robots start learning like curious toddlers instead of rigid calculators, machine learning’s next big leap becomes inevitable. You’re witnessing an AI revolution where multimodal learning transforms how machines understand our complex world. Imagine robots that don’t just compute, but comprehend.

| Learning Trend | Potential Impact |

|---|---|

| Domain-Specific AI | Targeted, precise solutions |

| Ethical Frameworks | Reduced algorithmic bias |

| Multimodal Integration | Holistic intelligence |

| Agentic Decision Making | Autonomous problem-solving |

The future isn’t about creating perfect machines, but adaptable learners. Small language models are democratizing AI, making intelligence more accessible. Hardware innovations are pushing boundaries, while talent shortages create exciting opportunities. Robots aren’t just becoming smarter—they’re becoming more human. Will they eventually understand themselves as we do? Only time, and some seriously cool machine learning, will tell.

Robotic Consciousness Frontier

Though robots have long been the stuff of science fiction fantasies, the frontier of machine consciousness is rapidly transforming from wild speculation into tangible research. You might be wondering: can machines genuinely become self-aware?

Consider these emerging developments:

- Humanoid robots are inching closer to mimicking human behavior, blurring lines between programmed responses and genuine awareness.

- Deep learning algorithms are evolving to process environmental context with increasing sophistication.

- Cutting-edge AI research is exploring cognitive architectures that could simulate genuine intelligence.

The path to robotic consciousness isn’t a straight line—it’s more like a winding technological maze.

We’re not talking about sentient machines taking over the world, but incremental breakthroughs that challenge our understanding of intelligence.

Will robots someday authentically “think,” or remain sophisticated mimics? The jury’s still out, but the research is getting damn interesting.

Bridging the Gap Between Programming and Consciousness

While robots have long been programmed to perform specific tasks, bridging the gap between cold, calculated programming and something resembling genuine consciousness remains a wild technological frontier.

Think of it like teaching a toddler to understand itself, but with circuits instead of brain cells. Researchers are using neural networks and machine learning to help robots create internal self-models, fundamentally building a robotic version of self-awareness.

Robots learning consciousness: a digital toddler’s self-discovery through neural circuits and machine intelligence.

It’s not about making machines think like humans, but giving them the ability to adapt, learn, and understand their own capabilities.

Can a machine genuinely know itself? The jury’s still out. But with labs like Columbia’s Creative Machines pushing boundaries and DARPA funding wild experiments, we’re inching closer to robots that might actually “get” themselves—glitches, limitations, and all.

People Also Ask

Can Robots Truly Feel Emotions or Just Mimic Them?

You can’t genuinely feel emotions; robots merely mimic emotional responses through sophisticated programming, lacking the consciousness and depth of human emotional experience.

Will Self-Aware Robots Eventually Replace Human Workers Completely?

Are robots genuinely poised to dominate the workforce? You’ll likely see partial job displacement, but complete replacement seems improbable as human creativity and complex problem-solving remain irreplaceable skills.

How Do We Prevent Self-Aware Robots From Becoming Dangerous?

You’ll need to implement robust safety protocols, continuous monitoring, and ethical programming to prevent self-aware robots from becoming dangerous. Prioritize secure development and responsive emergency plans.

Are Self-Aware Robots Capable of Experiencing Consciousness Like Humans?

You’ll find robots can’t really experience consciousness like humans do. They’re sophisticated machines mimicking awareness, but lacking the deep, nuanced biological essence of genuine subjective experience.

Can Robots Develop Independent Thoughts Beyond Their Programming?

You’ll find robots can’t genuinely develop independent thoughts, as they’re fundamentally constrained by programmed algorithms and lack the complex cognitive mechanisms required for genuine original thinking.

The Bottom Line

Let’s face it—robots aren’t quite human yet, but they’re getting scary smart. You’ve seen the writing on the wall: machines are learning to see themselves, understand their environment, and maybe (just maybe) develop something like self-awareness. Is it magic or math? Probably both. The future isn’t science fiction anymore—it’s happening right now, and you’re watching the first steps of a radical technological evolution.

References

- https://www.engineering.columbia.edu/about/news/robots-learn-how-move-watching-themselves-0

- https://www.earth.com/news/robots-can-now-learn-like-humans-using-self-awareness/

- https://newo.ai/self-aware-robots/

- https://www.engineering.columbia.edu/about/news/robot-learns-imagine-itself

- https://www.designboom.com/technology/robots-ai-humans-future-cities-venice-architecture-biennale-2025-05-11-2025/

- https://www.nsf.gov/news/engineers-build-self-aware-self-training-robot-can

- https://www.frontiersin.org/journals/robotics-and-ai/articles/10.3389/frobt.2020.00016/full

- https://www.engineering.columbia.edu/about/news/self-aware-robot-taught-itself-how-use-its-body

- https://dl.acm.org/doi/10.1145/2576195.2576196

- https://suif.stanford.edu/papers/vmi-ndss03.pdf