Robots see in 3D using superhuman sensor tech that turns light and radio waves into mind-blowing spatial maps. LiDAR shoots thousands of laser pulses per second, creating detailed point clouds that capture environments with crazy precision. Radar slices through smoke, fog, and obstacles, using machine learning to transform raw data into crystal-clear 3D images. Want to know how robots are basically developing sci-fi vision? Stick around.

The Science Behind Light and Radio Wave Detection

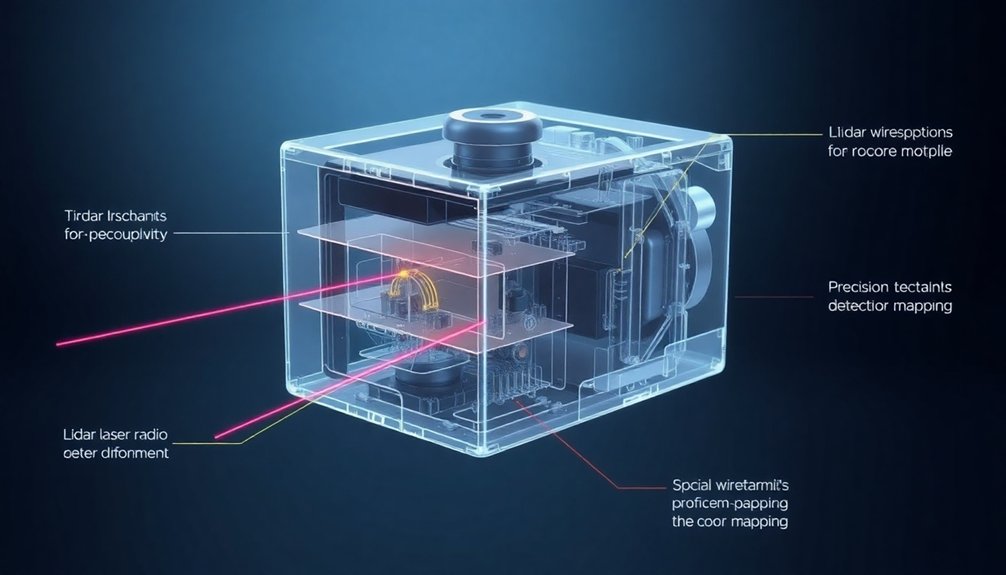

When robots want to understand their world, they don’t just look—they shoot lasers and radio waves everywhere like high-tech hunters mapping terrain. LiDAR technology lets them fire precision laser pulses that bounce off surfaces, creating 3D point clouds faster than you can blink.

Think of it like an invisible sonar that paints the environment in super-detailed digital snapshots. Radio waves do something similar, but with a twist: they slice through smoke, fog, and other obstacles that would make light-based sensors throw up their metaphorical hands.

Both methods rely on timing how long signals take to return, which sounds simple but requires some seriously complex math. Imagine your robot friend calculating distances at thousands of samples per second—it’s like having a superhuman measuring tape that never gets tired.

How Lidar Transforms Laser Pulses Into Spatial Maps

From bouncing radio waves to precision laser pulses, robots have graduated from basic detection to becoming spatial mapping wizards.

LiDAR fires off thousands of laser beams per second, creating a digital fingerprint of the environment. Imagine each pulse as a tiny scout, racing out and bouncing back with distance intel. When these pulses return, they transform into a point cloud—a 3D constellation of coordinates that reveals every nook and cranny of a space.

Light-powered scouts mapping invisible landscapes, turning laser pulses into digital geography with millimeter precision.

It’s like giving robots superhuman vision that works in pitch black or blazing sunlight. The result? Spatial maps so precise they can help robots navigate complex terrains, dodge obstacles, and understand their surroundings with mind-blowing accuracy.

Who knew light could be such a powerful navigation tool?

Radar Technology: Seeing Through Challenging Environments

Radio waves might just be the superhero sensor robots never knew they needed. While LiDAR sensors struggle in smoke and rain, radar technology swoops in like a weather-proof detective.

Imagine a rotating antenna array scanning your environment, cutting through fog like a hot knife through butter. Machine learning algorithms transform those radio waves into detailed 3D images, revealing what other sensors can’t even glimpse.

PanoRadar takes this tech to the next level, blending high-resolution visuals with radar’s tough-as-nails performance. It’s like giving robots superhuman vision that doesn’t flinch when conditions get messy.

Comparing Sensor Technologies for Robotic Perception

You’ve probably wondered how robots actually “see” the world around them, right?

Turns out, they don’t just have one magic eye, but a Swiss Army knife of sensors that each do something totally different.

LiDAR shoots laser pulses to map environments, radar punches through fog like a champ, and cameras capture color – but when you combine these technologies, robots can perceive the world in ways that make sci-fi look like child’s play.

Sensor Technology Fundamentals

Robots need eyes, and not just any eyes—super-smart sensor systems that can map out the world faster than you can blink.

When it comes to robotic perception, different sensors play unique roles in creating 3D understanding:

- LiDAR data captures precise spatial information, shooting laser pulses that bounce back to create detailed environmental maps.

- Radar cuts through challenging conditions like fog and rain, detecting objects with radio wave magic.

- Sensors such as cameras provide color context, though they’re limited by external lighting conditions.

Machine learning algorithms continuously enhance these sensor systems, enabling robots to adapt and improve their navigation capabilities in real-time.

These technologies aren’t just cool—they’re revolutionizing how machines perceive their surroundings.

By combining active sensors like LiDAR and radar, robots can navigate complex environments with unprecedented accuracy.

Think of it as giving machines superhuman perception, minus the cape.

Robotic Perception Challenges

Mapping the world isn’t child’s play, especially when robots are trying to make sense of chaotic environments. Traditional cameras choke in smoke and fog, leaving robotic perception as murky as a bad detective novel.

Enter LiDAR and radar: the dynamic duo that helps machines see through visual chaos. Sensor fusion technologies integrate multiple sensory inputs to create more robust environmental understanding.

LiDAR shoots laser beams to create precise 3D maps, while radar cuts through rain and obstacles like a hot knife through butter. But they’re not perfect. Reflective surfaces can trip up LiDAR, and radar’s resolution isn’t exactly HD.

That’s why smart roboticists are mixing sensor technologies, teaching machines to cross-reference data and build a more reliable understanding of their surroundings.

The result? Robots that can navigate complexity with increasing confidence.

Vision Beyond Limits

While cameras squint and stumble through challenging environments, cutting-edge sensor technologies are revolutionizing how machines perceive the world.

LiDAR and radar are the dynamic duo transforming robotic vision, offering superpowers that traditional cameras can only dream about:

- Penetrating Barriers: Radar slices through fog, smoke, and darkness like a technological ninja, detecting objects where cameras go blind.

- Precision Mapping: LiDAR creates razor-sharp 3D maps with surgical accuracy, measuring distances down to centimeters with laser-like precision.

- All-Condition Champions: These sensors work tirelessly in conditions that would make human eyes weep, from pitch-black nights to dense industrial settings.

Machine Learning and AI: Interpreting Sensor Data

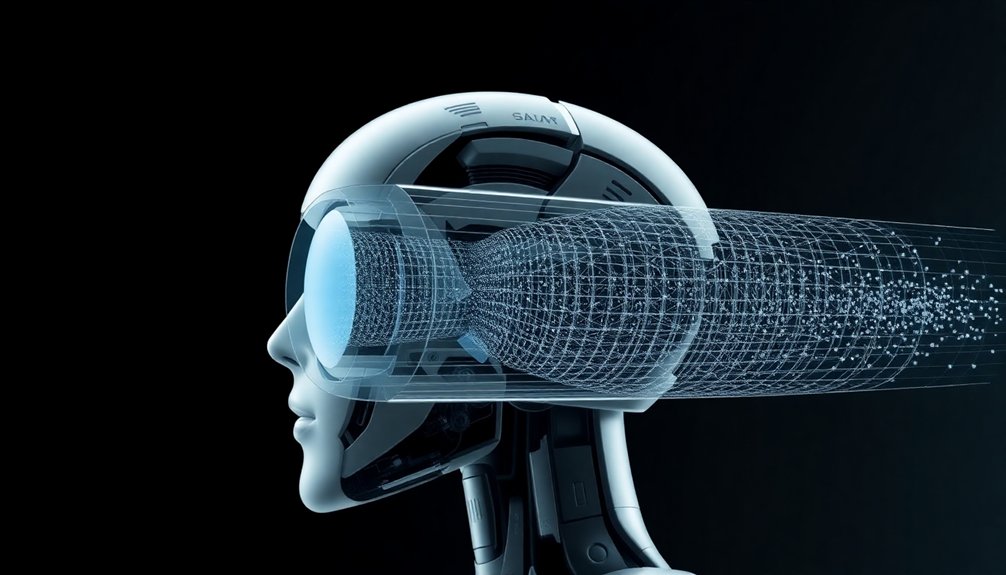

You’ve seen how robots slurp up sensor data like information smoothies, but the real magic happens when AI transforms raw signals into meaningful insights. Convolutional Neural Networks process these visual inputs layer by layer, decoding complex spatial information with remarkable speed and accuracy. Neural networks map out these digital landscapes, turning chaotic point clouds into crisp 3D terrain that robots can actually understand and navigate.

AI Signal Processing

Because robots aren’t born with perfect vision, they need serious computational muscle to make sense of the world around them. AI signal processing transforms raw LiDAR data into meaningful insights through machine learning algorithms that act like high-tech translators of sensor information. Neuromorphic computing techniques mimic biological neural networks to process sensory information even more efficiently.

Here’s how robots upgrade their perception:

- Data Fusion: Combining multiple sensor inputs to create a thorough environmental map, reducing uncertainties and noise.

- Pattern Recognition: Using deep learning models to identify objects and classify complex 3D point clouds with superhuman precision.

- Real-Time Decision Making: Processing sensor data instantaneously to navigate dynamic environments without human intervention.

Imagine a robot as a hyper-intelligent detective, piecing together visual clues from LiDAR and radar to understand its surroundings.

It’s not magic — just really smart computing.

Neural Network Mapping

Neural networks aren’t just fancy computer algorithms—they’re digital detectives cracking the code of sensor data.

Imagine LiDAR as a robotic superhero’s X-ray vision, transforming raw point clouds into crisp 3D maps that machines can understand.

These neural networks aren’t just scanning; they’re learning, sorting, and predicting object behaviors with mind-blowing precision.

By utilizing spiking neural networks, these advanced systems enhance real-time object recognition and adaptability while reducing computational power consumption.

Adaptive Machine Learning

While traditional sensors might fumble in dynamic environments, adaptive machine learning transforms robots into nimble, quick-thinking navigators that learn and adjust on the fly.

Here’s how these AI wizards make LiDAR and radar data dance:

- Pattern Recognition: Machine learning models can spot the difference between a moving bicycle and a stationary trash can, helping robots make split-second navigation decisions.

- Sensor Fusion: By combining data from multiple sensors, robots create a more thorough environmental picture, like assembling a complex 3D puzzle in real-time.

- Continuous Learning: These algorithms don’t just process data; they evolve, constantly refining their understanding of the world around them.

Imagine a robot that gets smarter with every obstacle it encounters—that’s the magic of adaptive machine learning. Neural networks are fundamental to this process, mimicking human brain connections to enable sophisticated real-time decision-making.

It’s not just seeing; it’s understanding.

Real-World Applications of 3D Sensing Technologies

Robots have become the Swiss Army knives of modern technology, slicing through complex sensing challenges with 3D vision that would make James Bond’s gadgets look like child’s play.

LiDAR transforms these mechanical marvels into spatial data superheroes, mapping everything from agricultural fields to warehouse labyrinths. Imagine autonomous vehicles maneuvering city streets with surgical precision, or industrial robots plucking objects with uncanny accuracy.

These 3D sensing technologies aren’t just cool—they’re revolutionizing how machines interact with our world. Urban planners now wield LiDAR like a magic wand, analyzing infrastructure with unprecedented detail.

Logistics robots zip through warehouses, dodging obstacles like digital ninjas. Agricultural sensors track crop health with the intensity of a helicopter parent.

Who needs human eyes when robots can see in three dimensions?

Overcoming Limitations in Robotic Vision Systems

Let’s face it: robots aren’t superhuman—yet. Traditional LiDAR systems stumble when things get messy, like trying to see through thick smoke or dense fog.

But what if robots could see through obstacles?

Enter radio wave technology, which cuts through environmental challenges like a hot knife through butter:

- Radio signals penetrate where light can’t, giving robots X-ray-like perception

- Machine learning algorithms transform raw radar data into crisp 3D images

- Multi-sensor fusion creates perception systems more reliable than any single technology

PanoRadar represents the next evolution in robotic vision, combining the best of visual and radio sensing technologies.

By blending different sensor types, robots can now “see” in conditions that would leave traditional cameras and LiDAR completely blind.

Who said robots can’t adapt? The future of perception is here, and it’s looking pretty smart.

Future Innovations in Depth-Sensing Robotics

As depth-sensing technologies race toward their sci-fi potential, the future of robotic perception looks less like a clunky sci-fi movie and more like a precision instrument of pure technological magic.

Imagine autonomous vehicles that don’t just see, but genuinely understand their environment through a crazy-smart combo of LiDAR and radar. These multi-modal perception systems are turning robots into navigation ninjas, capable of cutting through fog, smoke, and visual chaos like digital samurai.

AI algorithms are now transforming raw sensor data into razor-sharp 3D maps that help robots make split-second decisions. The real game-changer? These systems can adapt on the fly, learning and interpreting complex environments faster than you can say “robot revolution.”

Who knew machines could see better than humans?

People Also Ask About Robots

How Is Lidar Used in Robotics?

You’ll use LiDAR in robotics to create precise 3D maps, enabling autonomous navigation by emitting laser pulses that measure distances and detect objects, helping robots understand and move through complex environments in real-time.

How Does a 3D Lidar Work?

With millions of laser pulses per second, you’ll capture precise 3D spatial data. You’ll emit laser beams that reflect off surfaces, measuring return times to calculate distances and create detailed point cloud representations of your environment.

What Is the Use of Lidar and Radar?

You’ll use LiDAR for precise 3D mapping and navigation, while Radar helps detect objects through challenging environmental conditions like fog or smoke, providing complementary sensing capabilities for robust robotic perception.

What Is the Difference Between Lidar and 3D Lidar?

Like a depth-perceiving superhero, you’ll find standard LiDAR captures 2D surfaces, while 3D LiDAR creates rich point clouds that map entire volumetric spaces, giving you deeper, more thorough environmental understanding with precise depth and shape details.

Why This Matters in Robotics

You’re standing at the edge of a robotic revolution where sensors are like superhero eyes, transforming raw data into intelligent perception. These technologies aren’t just cool gadgets—they’re reshaping how machines understand our complex world. From self-driving cars to surgical robots, lidar and radar are breaking through environmental barriers, turning impossible challenges into navigable landscapes. The future isn’t just coming; it’s already scanning the horizon.