Robots navigate like super-smart treasure hunters, using GPS signals, laser scanners (LiDAR), and clever algorithms to build digital maps of their world. They’re not just wandering blindly—they’re stitching together data from satellites, cameras, and motion sensors to understand exactly where they are. Think of it like having a real-time GPS and autopilot system that constantly updates, helping robots dodge obstacles and explore spaces humans can’t. Curious about their navigation tricks?

The Global Positioning System: Robots’ Satellite Navigation

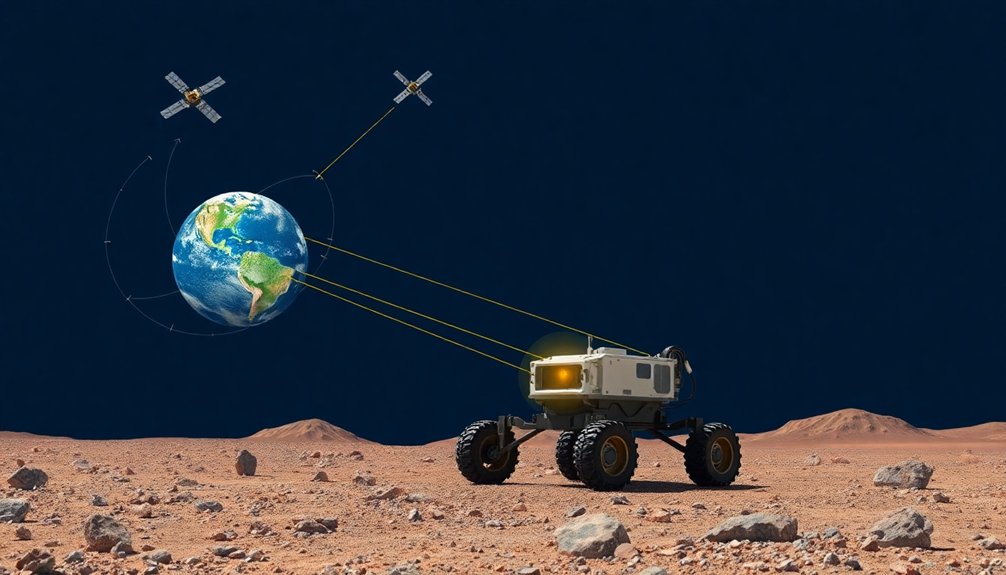

When it comes to helping robots navigate our wild, complex world, the Global Positioning System (GPS) is like that reliable but slightly outdated smartphone GPS app you’ve got. It works great outdoors, using 24 satellites orbiting Earth that beam signals to receivers calculating precise locations.

But here’s the catch: GPS isn’t superhero-level accurate. Its standard positioning ranges from one to twenty meters, which is basically useless for robot localization in tight indoor spaces.

Want pinpoint navigation? You’ll need backup. Real-Time Kinematic positioning can boost accuracy to decimeters, but it demands extra infrastructure.

Smart robots combine GPS with other sensors like IMUs and wheel odometry, using tricks like Extended Kalman Filter to patch the system’s weaknesses and navigate more precisely.

Mapping the Environment: Creating Digital Blueprints

Since robots can’t exactly pull out a street map and ask for directions, they’ve got to get smart about understanding their environment. Mapping becomes their superpower, turning spaces into digital blueprints they can actually comprehend.

Through Simultaneous Localization and Mapping (SLAM), robots create real-time navigational intelligence, constantly updating their internal “world view” as they move. Think of it like a robot drawing its own treasure map, except the treasure is knowing exactly where it’s going.

SLAM turns robots into cartographers of their own digital universe, charting unknown territories one millisecond at a time.

LiDAR and computer vision are the robot’s eyes, scanning rooms and detecting obstacles with crazy precision. They’re not just seeing—they’re remembering.

Each sweep builds a more accurate representation of the environment, allowing autonomous machines to dodge chairs, navigate hallways, and plot the most efficient paths without bumping into stuff. Pretty slick, right?

Machine learning algorithms enable robots to continuously adapt and improve their navigation skills, making their spatial understanding even more sophisticated and dynamic.

Sensor Fusion: Combining Data for Precise Localization

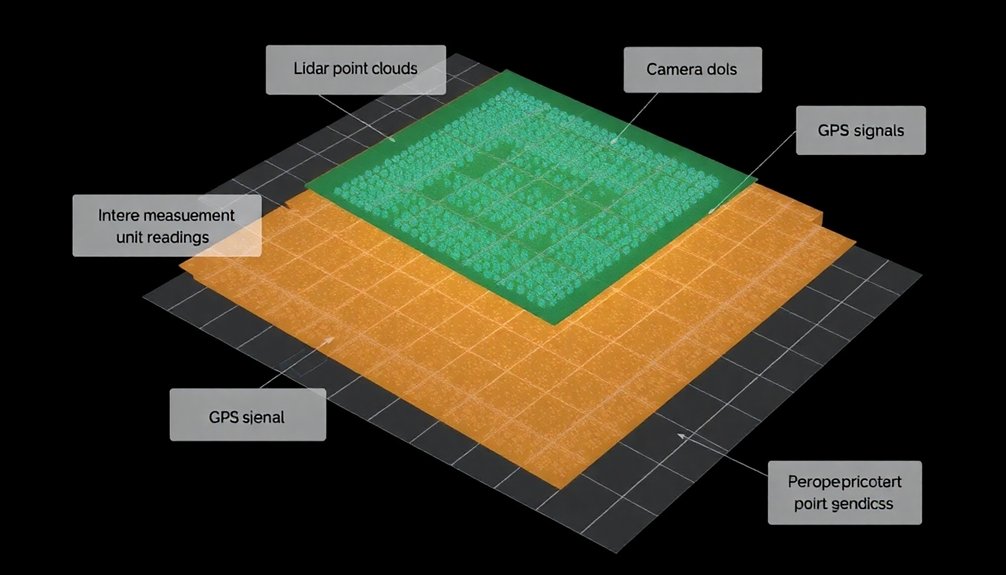

Because robots aren’t superhuman (yet), they need a clever way to understand exactly where they’re and what’s around them. Enter sensor fusion: the tech that turns robots into location-savvy navigators. By combining data from GPS, wheel encoders, and LIDAR, mobile robots can pinpoint their position with crazy accuracy.

Think of it like having multiple backup dancers helping a performer nail their choreography—each sensor adds its own unique information to create a near-perfect performance.

The Extended Kalman Filter is the secret sauce that helps robots process these complex data streams. It’s especially handy when GPS signals drop out, ensuring robots don’t lose their way.

Localization using multiple sensors means robots can confidently explore everything from warehouse floors to unpredictable outdoor terrain.

Indoor Navigation Challenges and Solutions

When you’re a robot trying to navigate indoors, you’ve got serious problems: GPS signals vanish faster than free donuts in an office break room, leaving you blind and confused.

Sensor fusion becomes your superhero strategy, combining LiDAR, computer vision, and inertial data to help you map out complex spaces that would make a human navigator throw up their hands in defeat.

You’ll need clever tricks to understand unnamed hallways and hidden obstacles, turning indoor navigation from a nightmare into a precise dance of technological problem-solving.

GPS Signals Blocked

Wherever robots roam indoors, GPS signals suddenly go dark—like trying to use Google Maps in a basement.

Without satellite guidance, robots become blind navigators in a complex indoor maze. LIDAR swoops in as the hero, shooting laser beams to create precise indoor positioning maps that help machines understand their surroundings.

Other navigation systems like computer vision and inertial tracking pick up the slack, helping robots dodge chairs, climb stairs, and weave through hallways without crashing.

Think it’s easy? Imagine a robot trying to read an old, crumpled blueprint while simultaneously tracking its location and avoiding obstacles.

These smart machines must constantly adapt, interpreting architectural challenges with split-second decisions. Indoor navigation isn’t just about moving—it’s about understanding space in real-time.

Machine learning algorithms continuously refine robots’ ability to process sensor information and improve their environmental perception and navigation accuracy.

Sensor Fusion Strategies

If indoor navigation were like a chess match, sensor fusion would be the grandmaster strategy that transforms robots from bumbling rookies to precision navigators. By combining data from GPS, IMUs, wheel odometry, and other sensors, robots can now triangulate their position with mind-blowing accuracy.

Think of it like having multiple teammates constantly whispering location hints in your ear.

When GPS signals vanish indoors, these sensor fusion strategies don’t miss a beat. LiDAR scans create detailed maps, cameras track visual landmarks, and inertial systems maintain momentum estimates.

It’s like giving robots a superhuman sense of spatial awareness. The result? Robots that can navigate complex indoor environments with surgical precision, dodging obstacles and finding their way without breaking a sweat—or a circuit.

Mapping Complex Spaces

Maneuvering indoor spaces isn’t just about avoiding walls—it’s a robotic puzzle that would make even the most seasoned GPS system throw up its digital hands in defeat.

Robots face a wild challenge when mapping complex indoor environments where traditional navigation breaks down. Their secret weapons? A mix of cutting-edge technologies that transform seemingly chaotic spaces into navigable landscapes.

- LiDAR scans create digital blueprints where none existed before

- Inertial navigation systems track robots’ position with microscopic precision

- Computer vision algorithms interpret spatial relationships in real-time

- Multi-sensor fusion compensates for individual technology limitations

Indoor navigation demands more than just avoiding obstacles—it’s about understanding context, predicting movement patterns, and creating intelligent mapping strategies that turn blind spaces into readable digital terrain.

Robots aren’t just moving; they’re decoding architectural mysteries one sensor sweep at a time. Depth estimation techniques allow robots to build dynamic, real-time mental maps of complex indoor environments, transforming raw sensor data into intelligent spatial understanding.

Simultaneous Localization and Mapping (SLAM) Techniques

You’re probably wondering how robots figure out where they’re going without crashing into everything like a drunk bumper car.

SLAM techniques let robots simultaneously map unknown spaces and track their exact position by gobbling up sensor data from cameras, LIDAR, and motion sensors faster than you can say “technological wizardry”.

Think of it like giving a robot a super-smart internal GPS that constantly updates its understanding of the world around it, turning potential navigational chaos into a precise, real-time positioning dance.

Sensor Data Fusion

Since robots can’t exactly ask for directions, they’ve had to get creative about maneuvering through unknown spaces. Sensor data fusion is their secret sauce in SLAM, allowing them to navigate indoor environments like seasoned explorers. By blending inputs from multiple sensors, robots create a thorough understanding of their surroundings.

Key strategies for sensor data fusion include:

- Combining LIDAR, camera, and IMU data for precise location tracking

- Using Extended Kalman Filters to estimate robot position

- Integrating visual and inertial information for enhanced navigation

- Enabling collaborative mapping between multiple robotic systems

This technological magic transforms raw sensor data into a coherent map, letting robots understand their environment with remarkable accuracy.

Who knew machines could be such clever navigators, piecing together spatial puzzles without ever stopping to ask a human for help?

Mapping Unknown Spaces

While robots mightn’t have an innate sense of direction, Simultaneous Localization and Mapping (SLAM) is their GPS-like superpower for exploring uncharted territories.

Think of SLAM as a robot’s internal compass and cartographer, helping machines create real-time maps while simultaneously tracking their precise location. In indoor navigation scenarios where satellite signals are useless, SLAM becomes the robot’s brain, blending data from cameras, LIDAR, and motion sensors to understand complex environments.

Machine learning has turbocharged these mapping techniques, allowing robots to not just map spaces, but comprehend them semantically.

Is a chair an obstacle or a potential resting spot? SLAM algorithms can now make those nuanced distinctions, transforming robots from blind wanderers into intelligent explorers that can adapt and navigate with unprecedented precision.

Real-Time Robot Positioning

Whenever robots venture into unknown territories, they’re basically playing an epic real-time game of “Where Am I?” and “What’s Around Me?” That’s where Simultaneous Localization and Mapping (SLAM) swoops in like a digital superhero.

This nifty technology helps Mobile robots nail their navigation by tracking position and orientation with crazy precision. SLAM isn’t just mapping—it’s like giving robots a supercharged internal GPS that adapts on the fly.

- Combines sensor data from LIDAR and cameras

- Handles complex, non-linear movements

- Adjusts for environmental changes in real-time

- Provides reliable motion estimation

Think of SLAM as a robot’s brain constantly solving a complex puzzle, figuring out its exact location while simultaneously creating a dynamic map of its surroundings. Pretty wild, right?

Advanced Sensors: LiDAR, Cameras, and Inertial Measurement Units

Because robots aren’t magical beings that navigate by wishful thinking, they rely on a trio of superhero sensors to make sense of the world around them. LIDAR shoots laser beams like a precision mapping wizard, creating high-resolution environmental snapshots. Cameras, powered by computer vision algorithms, transform visual chaos into meaningful scenes. Inertial Measurement Units (IMUs) track movement like an internal GPS, measuring acceleration and rotation.

| Sensor | Primary Function | Cool Factor |

|---|---|---|

| LiDAR | Distance Mapping | 🚀 High |

| Cameras | Visual Interpretation | 🔍 Medium |

| IMUs | Movement Tracking | 🌐 High |

| Sensor Fusion | Data Integration | 🧠 Very High |

| Computer Vision | Object Recognition | 👀 High |

The Future of Robotic Navigation and Positioning Technologies

As robotic navigation evolves from clunky trial-and-error to sci-fi-level precision, the future looks less like a random walk and more like a strategic dance. Closed-loop feedback systems enable robots to continuously correct their positioning and trajectory, transforming navigation from reactive to predictive.

Mobile robots are getting smarter, turning indoor spaces into playgrounds of possibility through cutting-edge artificial intelligence. You’ll see navigation technologies that make today’s robots look like bumbling toddlers.

Robotic intelligence transforms indoor spaces into smart, dynamic landscapes of technological wonder.

Key breakthroughs include:

- Visual-Inertial Odometry merging sensor data for superhuman accuracy

- Semantic SLAM transforming robots from blind wanderers to context-aware explorers

- Machine learning algorithms that learn environments faster than humans

- Collaborative mapping letting robots share intel like high-tech secret agents

These innovations mean robots won’t just move—they’ll understand.

They’ll read spaces like a book, predicting obstacles and charting ideal paths with uncanny intelligence.

People Also Ask About Robots

How Does the Robot Know Where It Is?

You’ll determine your location by combining sensor data like GPS, LIDAR, and computer vision. When GPS fails indoors, you’ll use advanced techniques like SLAM and Kalman filters to track your precise position dynamically.

How Does Robot Mapping Work?

You’re like a cartographer with mechanical limbs, piecing together environmental puzzles. Robot mapping uses sensors and algorithms to build precise spatial representations, tracking your position while simultaneously creating a dynamic, real-time understanding of your surroundings.

What Is the Algorithm for Robot Navigation Search?

You’ll use path planning algorithms like A* to search ideal routes, integrating sensor fusion techniques from GPS, IMUs, and LIDAR to dynamically calculate the most efficient navigation path while avoiding obstacles in real-time.

How Do the Robots Get Directions for Navigating Around the Warehouse?

You’ll rely on pre-mapped digital layouts and sensor fusion techniques. Your robot uses LIDAR, GPS, and inertial sensors to track paths, detect obstacles, and dynamically adjust navigation in real-time through the warehouse’s complex environment.

Why This Matters in Robotics

Robots are evolving from clunky GPS followers to spatial ninjas that can navigate anywhere. Like Jason Bourne mapping escape routes, they’re learning to read environments in real-time. You’ll soon see machines that understand space as intuitively as you do, weaving through complex terrains with machine-like precision and human-like adaptability. The future isn’t just about location—it’s about intelligent, context-aware movement that blurs the line between programmed and intuitive navigation.