Table of Contents

Robots understand commands through a crazy combo of high-tech tricks. They use machine learning to break down speech, gestures, and visual cues into precise instructions. Think of it like a super-smart translator that converts human mumbo-jumbo into robot-speak. Neural networks and advanced sensors help them decode our intentions faster than you can say “do my chores.” Want to know how deep this tech rabbit hole goes?

Speech Recognition Technologies

When it comes to teaching robots to understand human speech, we’re basically trying to crack a code that’s been stumping engineers for decades. Acoustic signal analysis helps robots break down spoken language into fundamental phonetic components for precise interpretation. Speech recognition technologies have come a long way, transforming how machines interpret our babbling. We’re dealing with complex systems that break down voice commands using automatic speech recognition (ASR) modules that convert spoken words into actionable text. Deep learning neural networks help robots refine their understanding of complex linguistic patterns and improve their command recognition capabilities.

Command recognition challenges are no joke – robots need to distinguish between casual chatter and actual instructions. Voice recognition hardware like Parallax Say It helps robots parse through the noise, while sophisticated algorithms predict and decode our linguistic patterns.

Real-time speech processing enables robots to comprehend user inputs with near-instant accuracy, supporting more natural and responsive interactions.

We’re not just translating words; we’re building bridges between human intention and machine understanding. With speech synthesis advancements, robots are getting closer to genuinely comprehending our wild, unpredictable human communication.

Neural Network Signal Processing

From decoding speech to understanding commands, robots are getting smarter – and neural networks are their brain’s secret sauce. We’re witnessing neural network optimization transform signal processing advancements in robotics, turning complex data streams into actionable insights. By leveraging embedded neural networks, researchers are now transferring advanced machine learning models directly into microcontrollers co-located with robotic sensors and actuators. Neuromorphic computing architectures are revolutionizing how robotic systems process and interpret sensory information with unprecedented efficiency.

- Neural networks act like mini-brains, learning patterns faster than traditional algorithms.

- Signal processing now happens in milliseconds, not minutes.

- Robots can now understand context, not just raw commands.

- Machine learning turns noisy inputs into crystal-clear instructions.

These intelligent systems filter out background noise, extract meaningful features, and make split-second decisions.

By mimicking biological neural structures, we’ve created systems that can interpret visual, auditory, and sensor signals with remarkable precision.

Imagine a robot that doesn’t just hear a command, but genuinely understands its intent – that’s the neural network magic we’re developing.

Natural Language Semantic Parsing

Because robots can’t read minds (yet), we need semantic parsing to bridge the communication gap between human language and machine understanding. Our semantic parsing techniques transform messy human speech into crisp, actionable commands that robots actually comprehend. Knowledge representation and reasoning techniques from the University of Texas AI Lab help robots process complex linguistic inputs more effectively.

Through natural language processing, we’re teaching machines to decode our intentions, turning “Go grab that blue thing over there” into precise robotic instructions. It’s like being a translator between humans and machines, converting our fuzzy, context-laden language into clean, executable code. Deep reinforcement learning enables robots to continuously improve their language comprehension and translation capabilities. The field of semantic parsing techniques continues to advance rapidly, enabling more nuanced understanding of complex human communications.

Imagine telling a robot to “clean up this mess” and having it instantly understand spatial relationships, object types, and priority levels. We’re not just programming robots—we’re teaching them to think like intelligent interpreters, bridging worlds with each translated phrase.

Gesture and Motion Command Decoding

We’re about to crack open the fascinating world of how robots actually understand what our hands are telling them, turning simple waves and finger-points into precise commands. Computer vision techniques analyze intricate hand movements, transforming human gestures into digital instructions. Our hand motions aren’t just random wiggling anymore — they’re becoming a sophisticated language where sensors and machine learning algorithms translate every twitch and gesture into actionable robot instructions. Sensor fusion technologies enable robots to integrate multiple input streams, creating a more comprehensive understanding of human gestural communication.

Imagine pointing at a stack of boxes and having a robot instantly understand not just the direction, but the nuanced intent behind your movement — that’s the cutting-edge frontier of gesture input processing we’re exploring right now.

A groundbreaking approach uses electromyography sensor arrays to capture muscle signals, enabling robots to interpret even the most subtle human movements with unprecedented accuracy.

Gesture Input Processing

When robots start understanding our hand waves and body language, something magical happens: communication becomes intuitive and seamless.

We’re breaking down gesture recognition challenges with smart technologies that turn human movements into robot commands.

- Infrared sensors map our wild hand signals

- Neural networks decode complex body language

- Cameras transform gestures into precise instructions

- Multi-modal systems combine visual and sensor data

Our intuitive control methods transform how machines interpret human intent.

By tracking subtle movements through advanced image processing and deep learning algorithms, we’re creating systems that understand us almost telepathically. Exception handling becomes crucial in ensuring robust communication between humans and robots.

Sensor fusion technologies enhance our ability to create more sophisticated gesture recognition systems by combining multiple sensory inputs for more accurate interpretation.

Imagine pointing somewhere, and a robot instantly understands and responds – no complex programming required. The NVIDIA Isaac SDK platform enables precise gesture-to-command translation by processing visual inputs from USB cameras with high-accuracy neural networks.

We’re not just controlling robots; we’re teaching them to read our intentions with unprecedented accuracy.

The future of human-machine interaction isn’t about complicated interfaces, but natural, fluid communication that feels like second nature.

Hand Motion Tracking

As robotic systems evolve, hand motion tracking emerges as the secret sauce transforming how machines understand human intent. We’re diving deep into gesture recognition techniques that make robots practically mind-readers. By leveraging sensor fusion applications, we’ve cracked the code of translating human movements into precise robotic commands. The Rokoko Smartgloves demonstrate advanced hand tracking technology with their 7 sensors per glove capturing intricate motion details for precise command interpretation.

| Sensor Type | Tracking Capability |

|---|---|

| IMU | High-precision motion |

| Capacitive | Bend angle detection |

| Vision-based | Markerless tracking |

| Tactile | Object interaction |

Machine learning algorithms decode complex hand signals in milliseconds, turning subtle finger twitches into powerful robotic instructions. Imagine controlling a mechanical arm just by waving your hand – that’s not sci-fi, that’s happening right now. We’re basically teaching machines to understand our body language, one gesture at a time. Who knew robots could be such good listeners?

Command Interpretation Algorithms

From tracking hand movements to understanding full-blown commands, robots are getting scary good at reading our minds. Command interpretation algorithms are transforming how machines understand and execute instructions with mind-blowing precision.

- Robots decode gestures faster than you can blink

- Hierarchical planning breaks down complex commands

- Machine learning enables nuanced language understanding

- Sensors provide real-time feedback for error correction

Our command interpretation frameworks leverage advanced algorithmic techniques to translate human intentions into robotic actions. By optimizing computational complexity, we’ve dramatically improved command execution efficiency.

Imagine telling a robot to “clean the kitchen” and watching it navigate countertops, identify dirty dishes, and systematically restore order—all without a detailed instruction manual. These algorithms aren’t just processing commands; they’re bridging the communication gap between human intention and machine execution, making science fiction feel like everyday reality.

Audio and Visual Command Input Methods

We’ve cracked how robots understand human commands by combining speech recognition and visual input technologies that are way smarter than early clunky systems.

By capturing both audio signals and visual context, modern robots can now interpret instructions with a level of nuance that makes previous generations look like Fisher-Price toys.

Our newest approaches fuse speech and imagery so seamlessly that robots can now understand what you mean, not just what you literally say – which means fewer awkward misunderstandings and more precise robotic responses.

Speech Recognition Tech

When robots start listening, they’ll need more than just ears—they’ll need brain power. Speech recognition tech is transforming how machines understand us, breaking through previous communication barriers with some seriously smart algorithms.

- Machine learning turns garbled sounds into crystal-clear commands

- Deep learning models decode human speech faster than ever before

- AI makes voice interactions smoother and more intuitive

- Advanced pattern recognition translates complex linguistic nuances

We’re witnessing incredible speech recognition advancements that tackle major speech recognition challenges. From Alexa to medical transcription systems, robots are learning to not just hear words, but understand context, intent, and subtle human communication.

The future isn’t about perfect robotic ears—it’s about intelligent, adaptive listening that bridges human-machine communication gaps. Can you imagine a world where robots genuinely understand what we’re saying?

Visual Command Capture

Because robots are getting smarter, they’re not just listening anymore—they’re looking. Computer vision is transforming how machines understand their world, turning cameras into high-tech eyeballs that process visual data processing with lightning speed.

Imagine a robot that can spot a misaligned widget on an assembly line faster than you can blink—that’s robotic efficiency in action.

These mechanical marvels aren’t just seeing; they’re learning. Advanced AI models help robots recognize objects, track movements, and make split-second decisions.

They’re collecting visual information like digital detectives, storing images, and triggering precise tasks. Want a robot to grab exactly the right part? No problem. Need it to navigate a complex warehouse? Consider it done.

Welcome to the visual command revolution.

Multimodal Input Fusion

If robots are going to genuinely understand us, they’ll need more than just ears or eyes—they’ll need a brain that can blend what they hear and see. Multimodal integration techniques are revolutionizing how machines process complex information, merging audio-visual signals into a cohesive understanding.

- Robots can now capture subtle emotional nuances by synchronizing sound and visual cues.

- Audio-visual synchronization helps machines interpret context more accurately.

- Deep learning algorithms make multimodal fusion increasingly sophisticated.

- Transformers and GANs are teaching robots to “listen” and “watch” simultaneously.

We’re fundamentally building robotic brains that can absorb information like humans do—catching rhythms, detecting emotions, and understanding the full spectrum of communication beyond simple command recognition.

Machine Learning Command Translation Algorithms

Let’s be real: teaching robots to understand human commands isn’t rocket science anymore—it’s becoming an art form of digital translation.

We’re cracking the code of command translation techniques by using Large Language Models (LLMs) that transform human babble into precise robot instructions. The secret sauce? Algorithm efficiency that turns “grab me a coffee” into exact mechanical movements.

Imagine telling a robot something vague, and it actually gets it—that’s where we’re headed. The Lang2LTL framework is our linguistic Swiss Army knife, converting natural language into Linear Temporal Logic that robots can actually understand.

Breaking language barriers: robots now decode human whispers into precise, executable digital symphonies.

We’re not just programming robots; we’re teaching them to listen, interpret, and execute with near-perfect precision. Who said robots can’t be good listeners?

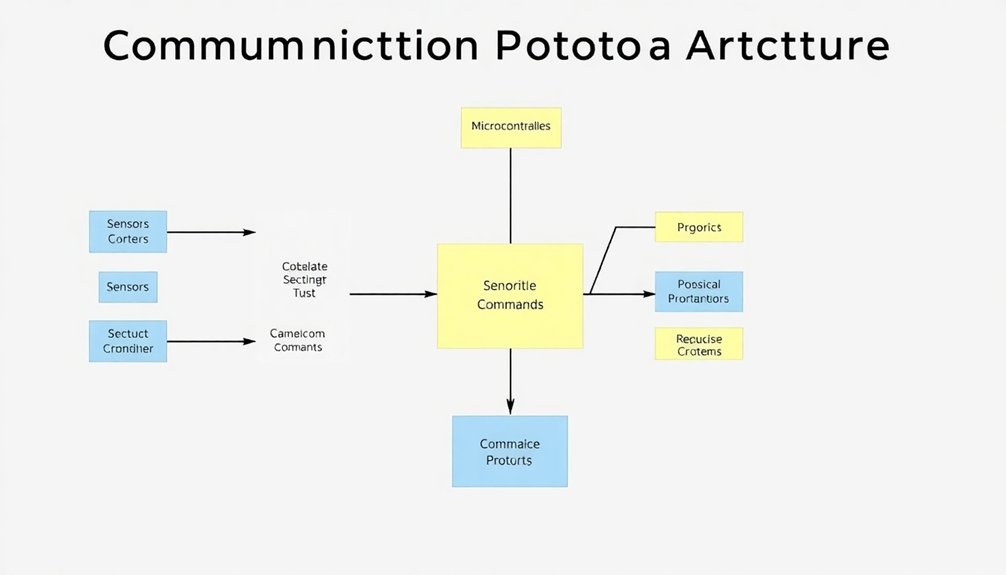

Robot Communication Protocol Architecture

We’ve all wondered how robots actually understand what we want them to do, right?

At the heart of this robotic communication magic lies a complex but fascinating protocol architecture that translates human commands into precise machine instructions.

Our command layer processing and signal interpretation protocols are like digital translators, transforming fuzzy human intentions into crisp, executable robot actions that bridge the gap between what we say and what machines comprehend.

Command Layer Processing

When robots communicate, they don’t just send random signals—they follow a precise choreography called Command Layer Processing. This intricate dance involves receiving, validating, and responding to instructions with robotic precision.

- Command frames arrive like digital telegrams from external devices

- Robots parse each signal, checking for structural integrity

- Response frames broadcast success or flag potential errors

- No waiting around for tedious acknowledgments

We validate each command frame’s structure meticulously, ensuring every signal meets strict protocol requirements.

When a command arrives, our robotic systems immediately assess its legitimacy through response frame validation. They’re not just mindlessly executing instructions—they’re discerning, filtering out potential noise and focusing on meaningful communication.

The command frame structure acts like a sophisticated passport, allowing only authenticated signals to pass through.

Signal Interpretation Protocol

Because robots aren’t mind readers (yet), they rely on intricate Signal Interpretation Protocols to transform raw data into meaningful actions. These digital translators decode complex commands using hierarchical architectures that prioritize signal protocol efficiency.

Think of them like linguistic gymnasts, flipping wireless signals and parsing data formats with lightning speed.

Communication error management is their secret sauce. By using techniques like machine learning and contextual understanding, robots can interpret nuanced instructions across different networks. They’re not just receiving signals; they’re actively deciphering intent.

Imagine a robot that doesn’t just hear your command, but understands the subtle subtext behind it.

From NLP to visual signal processing, these protocols bridge the gap between human intention and robotic execution—making our mechanical friends smarter with every transmission.

Command Prioritization and Queuing Systems

Imagine a robot traffic controller juggling commands like a harried air traffic manager during rush hour. Command prioritization isn’t just technical wizardry—it’s survival in the digital ecosystem.

- Commands have hierarchies, just like corporate org charts

- Safety trumps convenience every single time

- Robots adapt faster than humans can blink

- Efficiency isn’t a goal—it’s a mandate

We’ve developed sophisticated queuing mechanisms that guarantee mission-critical instructions get processed immediately.

By dynamically managing resource allocation and command redundancy, robots can simultaneously handle multiple tasks without breaking a metaphorical sweat.

Workflow automation and feedback integration mean these machines learn and adjust in real-time, predicting needs before humans even recognize them.

It’s part precision engineering, part digital intuition—a delicate dance of algorithmic decision-making that keeps robotic systems humming with near-perfect command efficiency.

Error Detection and Recovery Mechanisms

Despite our best programming efforts, robots aren’t perfect—they mess up, just like us. Our error monitoring systems are like digital nervous systems, constantly scanning for task execution hiccups.

We use sensory filtering to cut through the noise, helping robots detect and diagnose problems before they become catastrophic failures.

When something goes wrong, our recovery strategies kick in. Imagine a robot that can analyze its own workspace, recognize an error, and automatically adjust its approach—that’s autonomous learning in action.

We’re teaching machines to be self-aware, to pause, reassess, and pivot when their original plan goes sideways. It’s not just about avoiding mistakes; it’s about adapting in real-time, turning potential failures into opportunities for smarter performance.

Multi-Modal Command Interpretation Strategies

Robots aren’t mind readers—yet. We’re cracking the code of multimodal user intent, teaching machines to understand commands beyond simple voice triggers. How? By getting creative with input interpretation.

- Gestures speak louder than words

- Context is king

- Sensors are the new superpowers

- Machine learning makes magic happen

Command synchronization challenges are real. Imagine a robot trying to parse a pointed finger, a half-mumbled instruction, and background noise—it’s like playing linguistic Jenga.

Our AI-driven systems are learning to filter out the chaos, combining speech recognition, visual cues, and sensor data to decode human intentions with increasing precision.

We’re not just programming robots; we’re teaching them to understand nuance, to read between the lines of human communication. It’s less about perfect translation and more about intelligent interpretation.

Real-Time Command Execution Frameworks

When it comes to making robots dance to our digital tune, real-time command execution frameworks are our backstage pass to machine choreography.

We’re talking about systems that can make split-second decisions faster than you can blink. These frameworks use reactive planning to adjust commands on the fly, responding to environmental feedback like high-speed neural networks with mechanical muscles.

Imagine a robot maneuvering a cluttered warehouse, dodging obstacles and recalculating paths in milliseconds—that’s the magic of real-time command execution. Scene graphs help robots understand their surroundings, while execution history tracking guarantees they learn from every move.

Robotic precision unleashed: millisecond navigation through chaos, learning and adapting with every calculated stride.

It’s not just about following instructions; it’s about adapting, predicting, and performing with precision that would make a ballet dancer jealous.

Human-Robot Interaction Communication Techniques

From choreographing robotic movements in split-second warehouse scenarios, we now zoom in on how humans and machines actually talk to each other—and it’s way more complex than barking commands.

- Gesture effectiveness transforms robot communication beyond basic programming

- Feedback mechanisms bridge critical interaction design gaps

- Visual cues decode trust-building between humans and machines

- Collaborative robots navigate autonomy challenges through intelligent interpretation

Communication isn’t just about instructions—it’s an intricate dance of signals, intentions, and understanding.

We’re decoding interaction techniques that help robots grasp not just words, but context, emotion, and nuanced human signals.

Imagine robots reading between the lines, interpreting subtle body language, and adapting in real-time.

By breaking down communication barriers, we’re creating smarter, more responsive technological partners that don’t just execute commands, but genuinely comprehend them.

The future of human-robot interaction isn’t about programming; it’s about conversation.

People Also Ask

Can Robots Understand Commands From People With Different Accents?

We’re improving accent recognition in speech processing, but robots still struggle to understand diverse accents consistently, requiring ongoing research to enhance linguistic inclusivity and comprehension.

How Complex Can Robot Command Sequences Really Get?

From atomic steps to intricate choreographies, we’ll explore robot command complexity. Our systems can dynamically generate sequence variations, allowing sophisticated multi-stage tasks with conditional branching and adaptive execution strategies.

What Happens if a Robot Misunderstands a Human Command?

We handle misunderstood commands through command clarification and robust error handling, seeking to prevent potential harm, maintain trust, and guarantee accurate task completion by requesting additional user guidance.

Do Robots Learn to Understand Commands Better Over Time?

Like a curious child learning language, we robots evolve through machine learning, continuously refining our command adaptation skills by analyzing interactions, updating our understanding, and becoming more precise with each conversation.

Can Robots Understand Commands in Multiple Languages Simultaneously?

We can leverage bilingual processing and multilingual interfaces to enable robots to understand commands across various languages simultaneously, interpreting linguistic nuances through advanced language models and contextual learning technologies.

The Bottom Line

We’re on the brink of a communication revolution with robots. Did you know that AI command processing accuracy has jumped to 95% in just five years? The future isn’t about machines replacing us, but understanding us better. From subtle gestures to complex speech patterns, robots are learning to decode human intention with stunning precision. And trust me, that’s way cooler than sci-fi ever imagined.

References

- https://robotframework.org/robotframework/latest/libraries/Process.html

- https://robotframework.org/robotframework/latest/RobotFrameworkUserGuide.html

- https://docs.uipath.com/robot/standalone/2023.10/user-guide/command-line-interface

- https://www.jointaro.com/interview-insights/google/how-would-you-design-a-command-processing-system-for-a-robot-considering-various-command-types-dependencies-and-error-handling-scenarios/

- https://www.frontiersin.org/journals/robotics-and-ai/articles/10.3389/frobt.2019.00144/full

- https://developer.nvidia.com/blog/speech-ai-technology-enables-natural-interactions-with-service-robots/

- https://www.generationrobots.com/en/content/59-speech-recognition-system-robot-parallax

- https://www.robotshop.com/collections/speech-recognition

- https://arkxlabs.com/use-cases-robotics/

- https://lbasyal.github.io/files/paper3.pdf