Robots don’t hear like you do—they’re basically audio supercomputers with killer microphones. Crazy-smart neural networks transform your voice into precise digital signals, breaking down every sound wave into actionable data. Advanced algorithms filter out background noise, recognize speech patterns, and understand context faster than your brain can process. Think of it like having a hyper-intelligent translator inside a machine that’s always listening. Curious about how deep this tech rabbit hole goes?

The Science Behind Robotic Hearing

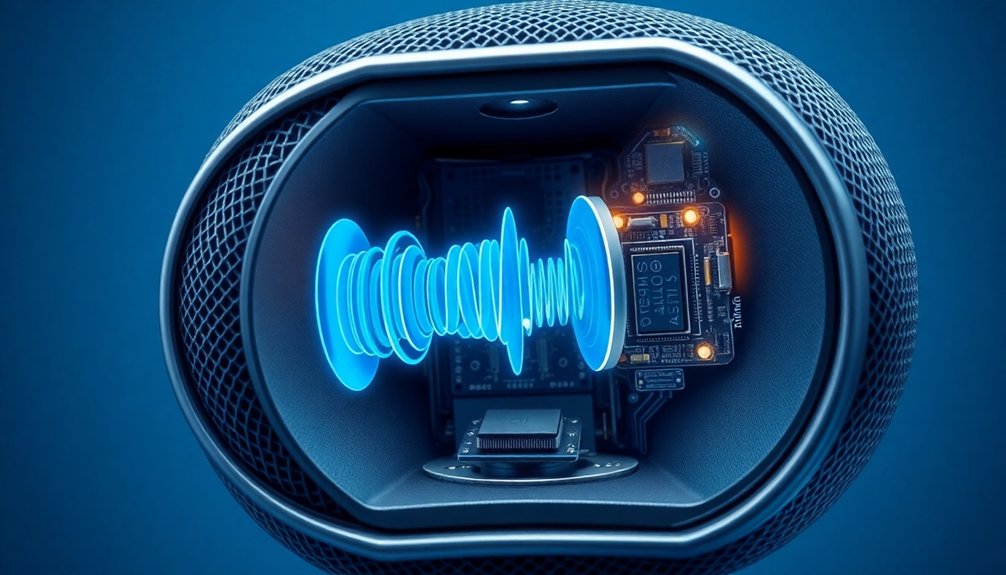

Ever wondered how robots seem to “hear” without ears? It’s all about sophisticated microphones and wild audio processing magic. Instead of human ears, robots use high-tech sensors that capture sound waves like sonic superheroes.

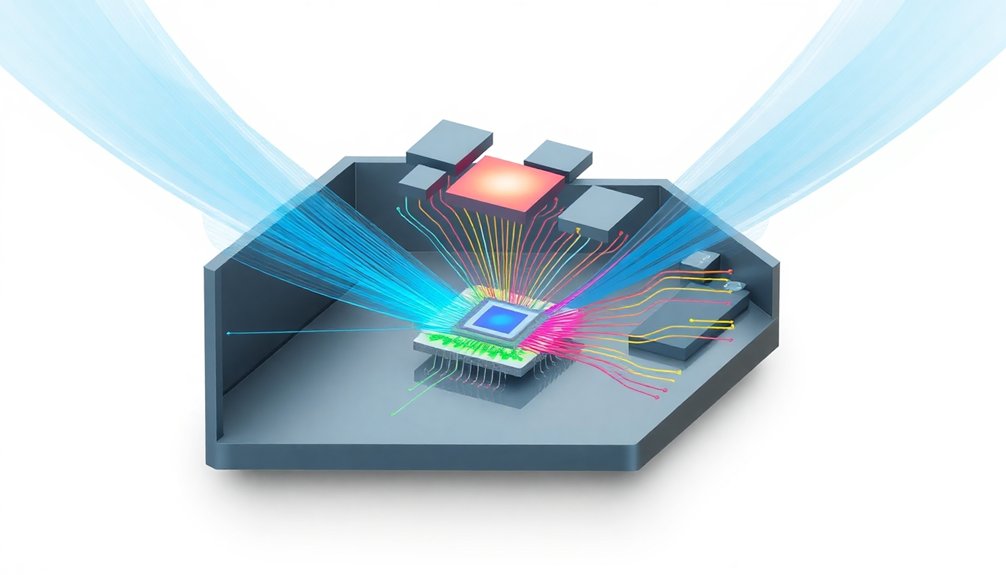

Robots transform sound waves into superhuman sensory intelligence with mind-blowing digital microphone technology.

These microphones transform audio signals into digital information faster than you can blink, using sound-source localization to pinpoint exactly where noise originates. Imagine a robot triangulating sounds like an acoustic detective, determining distance and direction with crazy precision.

Neural networks and machine learning have turbocharged this technology, allowing robots to separate overlapping sounds and understand complex audio environments. They’re basically turning raw noise into meaningful data, transforming random sound waves into actionable intelligence.

Who needs biological ears when you’ve got cutting-edge tech doing the heavy lifting?

Microphone Technologies Powering Auditory Perception

When it comes to robotic hearing, microphone technologies are the unsung heroes transforming how machines perceive sound. These aren’t your grandpa’s simple audio receivers—we’re talking about sophisticated arrays that can pinpoint sounds with laser precision.

Sound-source localization lets robots triangulate exactly where a noise originates, turning them into audio detectives. Automatic speech recognition cranks this up another notch, allowing robots to not just hear you, but understand what you’re saying.

Imagine microphones so smart they can filter out background noise and focus on your voice in a crowded room. Advanced processing hardware makes this possible, creating robots that can hear better than most humans.

Who knew machines could become such stellar listeners?

Signal Processing and Sound Conversion

So, you’ve got these high-tech microphones picking up sounds—but how do robots actually make sense of all that audio chaos? Signal processing is where the magic happens. Your robot’s brain transforms sound waves into electrical signals faster than you can say “machine learning.”

Advanced algorithms for sound-source localization help pinpoint exactly where a noise originates—imagine a robot turning its head precisely toward your voice. Automatic speech recognition then decodes your human babble into something the robot understands.

Robots triangulate sound sources with pinpoint precision, transforming human speech into actionable digital intelligence.

Neural networks work overtime, filtering background noise and distinguishing between a command and random sounds. It’s like giving robots super-hearing: they can separate voices, recognize speech patterns, and learn from every audio interaction.

Think of it as teaching machines to listen—not just hear—with increasing sophistication.

Natural Language Processing Foundations

Because robots can’t just magically understand human speech, Natural Language Processing (NLP) steps in as the brainy translator between human chatter and machine comprehension.

It’s basically teaching robots to decode your rambling conversations like linguistic detectives. Through speech recognition and machine learning algorithms, robots break down your words into digestible chunks, analyzing syntax, context, and intent with computational precision.

Imagine neural networks as tiny language interpreters inside robot brains, constantly learning from massive conversational datasets.

They’re not just listening—they’re understanding the subtle nuances of human communication. These smart systems tokenize your speech, parse complex sentences, and transform verbal chaos into structured data robots can actually process.

Want a robot to genuinely hear you? NLP is your linguistic bridge.

Machine Learning Algorithms in Sound Recognition

You might think robots just hear sounds, but they’re actually learning to recognize audio patterns like tiny digital detectives.

Machine learning algorithms, especially neural networks, are teaching robots to pick up on subtle sound nuances—imagine a robot that can tell the difference between a cat’s meow and a car alarm.

Sound Pattern Recognition

When robots listen, they’re not just hearing sounds—they’re decoding complex audio landscapes using some seriously smart machine learning tricks. Sound pattern recognition transforms raw audio into meaningful insights through Artificial Intelligence (AI) algorithms that break down sonic waves like digital detectives. Neural network architectures leverage sophisticated learning techniques that mirror the complex information processing seen in advanced robotic perception systems.

| AI Technique | Function | Performance Impact |

|---|---|---|

| CNNs | Feature Extraction | High Accuracy |

| NLP | Speech Understanding | Enhanced Interpretation |

| Neural Networks | Audio Classification | Robust Recognition |

| Machine Learning | Pattern Detection | Adaptive Learning |

These advanced systems don’t just hear—they comprehend. By analyzing frequency, amplitude, and contextual cues, robots can distinguish between a whisper and a shout, a car horn and a human voice. The magic happens through sophisticated neural networks that transform chaotic soundscapes into precise, actionable information. Who knew robots could be such excellent listeners?

Neural Network Learning

Neural networks aren’t just fancy computer circuits—they’re the brain-like engines teaching robots how to listen like champs. Imagine machines learning to recognize sounds with superhuman precision:

- Machine learning algorithms gobble up audio samples

- Neural networks map complex sound patterns

- Robots distinguish whispers from screams instantly

- Sound classification becomes an intelligent art form

These sophisticated neural networks transform raw audio into meaningful insights. By analyzing massive datasets, they develop an uncanny ability to differentiate between subtle acoustic nuances.

Convolutional and recurrent neural networks work together, processing temporal sound dynamics with remarkable accuracy.

Think of it like training a highly intelligent audio detective. The more data these networks consume, the sharper their listening skills become. They adapt, learn, and evolve—turning mechanical ears into sophisticated sound recognition systems that can interpret the world’s auditory landscape with incredible precision.

Speech-to-Command Translation Mechanisms

Because robots aren’t mind readers (yet), they rely on sophisticated speech-to-command translation mechanisms to understand what humans actually want. Advanced speech recognition algorithms transform your vocal chaos into precise instructions through multi-layered neural networks that dissect audio processing like linguistic surgeons. Contextual language models leverage sophisticated algorithms to enhance robots’ ability to understand and interpret complex human communication beyond simple command translation.

| Input Type | Processing Method |

|---|---|

| Clear Command | Direct Translation |

| Background Noise | Intelligent Filtering |

| Accent Variation | Adaptive Learning |

| Complex Instruction | Contextual Analysis |

| Emotional Tone | Sentiment Interpretation |

Natural Language Processing acts as the robot’s universal translator, converting human babble into actionable code. These intelligent systems learn and adapt, becoming more fluent with each interaction. Imagine a robot that doesn’t just hear you, but genuinely understands the nuanced intent behind your words—decoding not just what you say, but what you mean. Creepy? Maybe. Revolutionary? Definitely.

Audio Pattern Matching and Interpretation

Every robot’s superpower is its ability to turn random sound waves into meaningful commands, and audio pattern matching is the secret sauce that makes this magic happen. When it comes to sound processing, robots aren’t just listening—they’re decoding:

Robotic ears transform sonic chaos into precision commands, decoding sound waves with uncanny technological intelligence.

- Machine learning algorithms analyze complex audio signatures

- Advanced filters eliminate distracting background noise

- Precise signal processing breaks down sound waves

- Neural networks recognize linguistic patterns instantly

Imagine a robot transforming your spoken words into crystal-clear instructions through sophisticated audio pattern matching. It’s like having a translator that not only understands language but deciphers intent with robotic precision.

Noise Filtering and Environmental Sound Management

When robots enter noisy environments, they can’t just cover their ears like humans do—they need superhuman audio processing skills. Sound-source localization becomes their secret weapon, helping them pinpoint exactly where sounds originate.

Machine learning algorithms act like audio bouncers, filtering out background noise and prioritizing human voices with laser-like precision.

Think of noise filtering as a high-tech audio sieve. Advanced microphones can distinguish between a dog barking, a car honking, and your specific voice command.

These smart systems use complex audio processing techniques to separate signal from chaos, training themselves to understand what matters most.

Imagine a robot that can hear you perfectly in a crowded subway or bustling factory—that’s not science fiction, that’s today’s cutting-edge robotics technology.

Multi-Modal Sensory Integration

You’ve probably wondered how robots make sense of their chaotic environments, and the answer lies in their ability to mash up sensory data like a DJ mixing tracks.

Imagine a robot that can’t only see a coffee mug but also hear the subtle clink of ceramic, creating a more complete understanding of its surroundings through what tech nerds call “sensory fusion”. Robotic vision systems utilize advanced sensor technologies that continuously learn and adapt, enabling multi-modal perception beyond simple audio or visual input.

Sound and Vision Synergy

Because humans rely on multiple senses to navigate the world, robots are now learning the same trick with multi-modal sensory integration.

Sound and vision are becoming the dynamic duo of robotic perception, transforming how machines understand their environment. Sensor fusion technologies enable robots to combine data from multiple sources, creating a more comprehensive understanding of their surroundings.

Here’s why this matters:

- Audio cues boost object recognition from 27% to 94%

- Robots can now “hear” what they can’t clearly see

- Sound helps classify objects with 76% accuracy

- Multi-modal sensors create more adaptable machines

Imagine a robot in a smoky room: while visual sensors struggle, sound becomes its secret weapon.

By combining microphones and cameras, these machines are developing a superhuman ability to process complex environments.

It’s like giving robots a sixth sense—one that doesn’t just see the world, but genuinely understands it.

Who said robots can’t be perceptive?

AI Sensory Fusion Techniques

As robots evolve from clunky machines to intelligent companions, multi-modal sensory integration has become their superpower. Neuromorphic computing enables these systems to process sensory inputs with unprecedented efficiency and adaptability.

Imagine a robot that doesn’t just hear you, but understands context by blending audio data with visual and tactile information. Machine learning algorithms are the secret sauce, helping these mechanical friends learn and adapt faster than ever.

Think of it like a superhuman brain: audio processing meets computer vision, creating robots that can pinpoint sounds, recognize speech, and interpret complex environments.

They’re not just listening; they’re comprehending. By combining microphone inputs with visual cues, these AI companions can distinguish between a whisper and a shout, a bark and a meow.

The result? Robots that don’t just hear—they genuinely understand.

Advanced Neural Network Approaches

When it comes to making robots hear like humans, neural networks are basically the secret sauce. These AI wizards transform audio processing from clunky guesswork into razor-sharp interpretation.

Advanced neural network approaches are revolutionizing how robots understand sound by:

- Analyzing complex audio signals with superhuman precision

- Learning from massive datasets of sound patterns

- Distinguishing nuanced acoustic environments in real-time

- Translating verbal commands into actionable robotic responses

Imagine a robot that doesn’t just hear noise, but comprehends context and intent. By leveraging deep learning techniques, these networks can recognize everything from whispered commands to background environmental sounds.

Robots now hear beyond noise: deep learning decodes whispers, context, and subtle sonic landscapes.

Convolutional and recurrent neural networks are the brainy algorithms making this possible, continuously pushing the boundaries of machine auditory perception. Who knew robots could develop such impressive listening skills?

Real-World Applications and Practical Implementations

If you’ve ever wondered how robots are transforming from sci-fi fantasies into practical problem-solvers, audio technology is where the magic really happens.

Imagine robots using sound like a superpower: they’re not just listening, they’re decoding complex audio data in real-time. Take the HEARBO robot, which can distinguish a doorbell’s chime from kids playing nearby.

Or consider drones with 16-microphone arrays that can pinpoint disaster victims by sound alone. Training robots to hear isn’t just about microphones—it’s about sophisticated software like HARK that helps machines understand acoustic environments.

Future Developments in Robotic Auditory Systems

The world of robotic hearing is about to get wild. Imagine robots with superhuman auditory abilities that’ll make your smart speaker look like a stone-age relic. Here’s what’s brewing in the labs:

- Sound-source classification that can pick out whispers in a hurricane

- Multi-sensory robotic systems that hear, feel, and understand context

- AI algorithms decoding complex audio landscapes with surgical precision

- Integrated sensor packages that transform robots into audio-sensing ninjas

Robotic Artificial Intelligence (AI) is rapidly evolving how machines process auditory cues. Researchers are pushing boundaries, creating systems that don’t just hear sounds but interpret them like sophisticated acoustic detectives.

They’re developing smart algorithms that can distinguish between overlapping noises, track multiple sound sources, and adapt to dynamic environments.

Want a robot that genuinely listens? The future’s knocking, and it sounds incredible.

People Also Ask About Robots

How Do Robots Hear Sound?

You’ll hear sounds through advanced microphones that capture audio vibrations, process them using noise-robust algorithms, and convert spoken language into text, allowing robots to understand and respond to your commands effectively.

Can AI Understand Sound?

You might think AI’s just guessing, but it’s actually brilliant at understanding sound. By leveraging advanced NLP and machine learning algorithms, AI can interpret complex audio signals, recognize speech patterns, and respond with remarkable accuracy.

How Do Robots See and Hear Things?

You’ll see and hear through advanced sensors like cameras and microphones that process visual and audio signals, using AI algorithms to interpret environmental data, recognize patterns, and respond intelligently to what’s detected around you.

How Robots and Artificial Intelligence Communicate With Humans?

You’re the conductor, and AI’s your orchestra—listening intently through advanced microphones. It’ll decode your speech using Natural Language Processing, transforming your words into actionable commands with remarkable precision and responsive understanding.

Why This Matters in Robotics

As robots evolve, their ears are becoming smarter than yours. Imagine machines that don’t just hear words, but understand context, emotion, and intent. The future isn’t about perfect listening—it’s about intelligent interpretation. You’ll soon interact with devices that catch nuances humans miss. Will they become better listeners than your closest friends? The line between artificial and natural perception is blurring, and you’re witnessing the revolution, one sound wave at a time.