Table of Contents

Robot vision turns machines into seeing machines that interpret the world like super-smart cameras. You’ll discover how cameras, sensors, and crazy-smart algorithms help robots “see” by transforming visual data into actionable intelligence. Think of it like giving robots eyes that can analyze every pixel, detect objects, and navigate complex environments in milliseconds. Want to peek behind the curtain of how machines are learning to see? Stick around.

How Robotic Vision Works

While robots might seem like something straight out of a sci-fi movie, their ability to “see” is actually a mind-blowing technological dance of sensors, algorithms, and raw computational power.

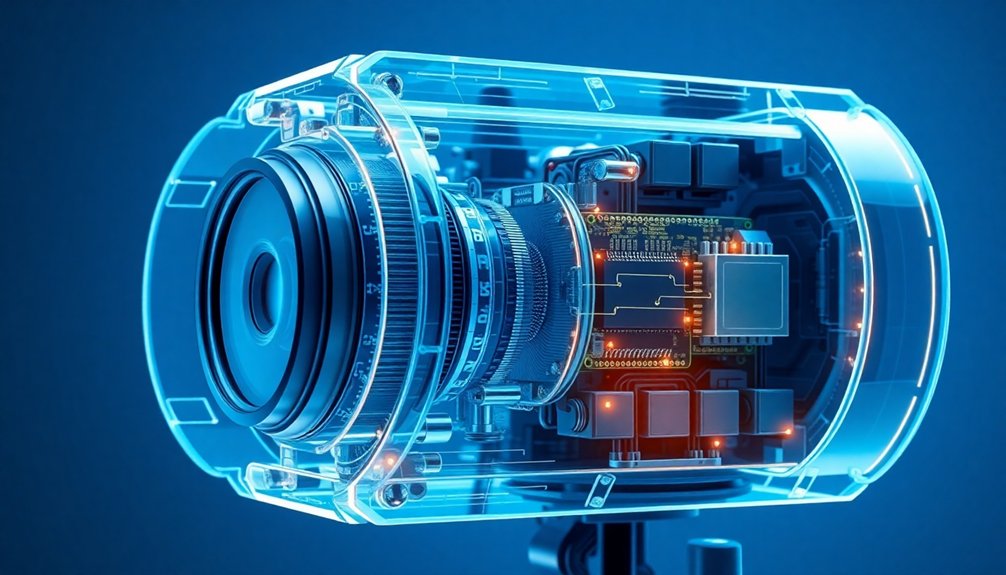

Think of robot vision like a supercharged camera system that doesn’t just snap pictures, but understands what it’s looking at. Depth perception capabilities enable robots to differentiate between near and far objects, adding critical spatial awareness to their visual processing. These mechanical eyes start by grabbing images through cameras or LiDAR sensors, then run that raw data through a complex cleaning process.

Robot vision: a smart lens that captures and comprehends, turning raw sensor data into intelligent environmental understanding.

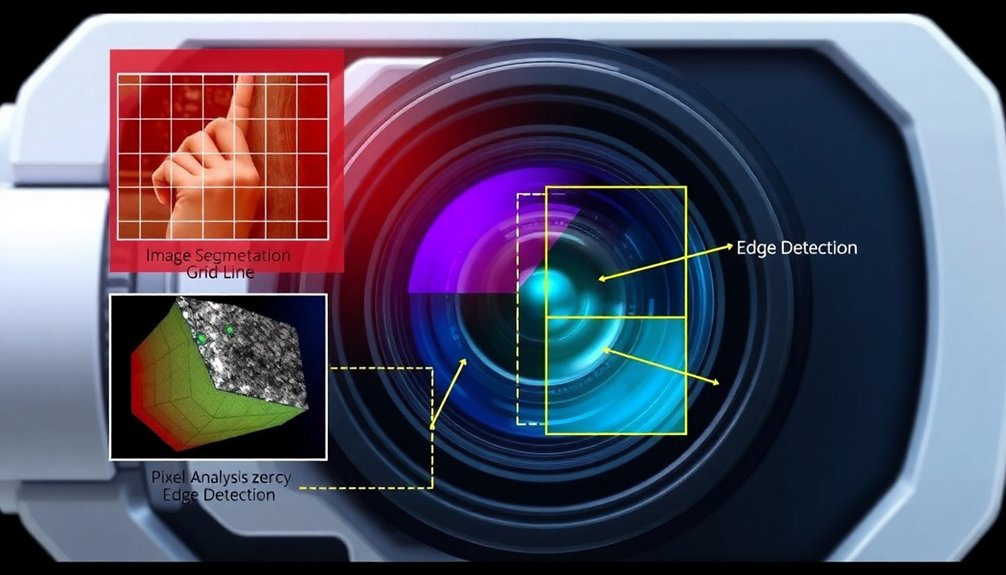

Preprocessing removes noise, sharpens details, and normalizes information. Next, feature extraction kicks in—hunting for edges, shapes, and key details that help the robot make sense of its world. Advanced image processing algorithms transform vision systems into sophisticated tools that compare manufactured products against precise reference images, enabling comprehensive quality control.

Pattern recognition then takes over, transforming visual data into actionable insights. It’s like giving robots a brain to interpret their visual inputs, turning cold mechanical perception into intelligent environmental understanding.

Essential Components of Vision Systems

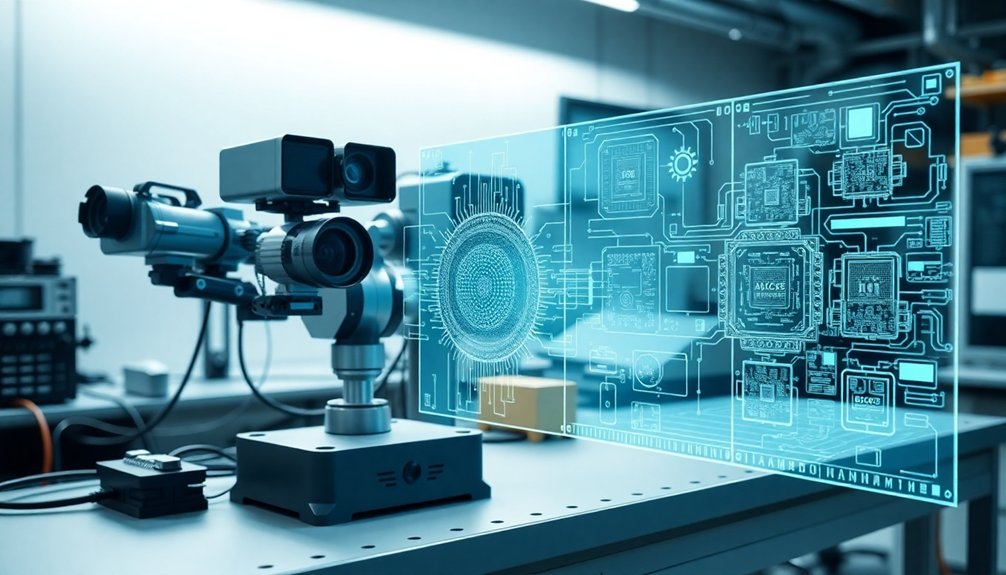

Robot vision isn’t just some magical black box—it’s a symphony of carefully engineered components working together like a precision Swiss watch. Each component plays a critical role in transforming raw visual data into actionable insights. The industrial automation landscape relies on these intricate systems to perform complex tasks with unprecedented accuracy. Understanding optoelectronic sensor principles empowers engineers to design vision systems that capture nuanced visual information with extraordinary precision. Stereo camera technology enables these systems to replicate human-like depth perception, creating a more sophisticated understanding of visual environments.

- Lighting Systems: The unsung heroes that illuminate objects, making hidden details pop like a spotlight on a secret agent.

- Optical Components: Lenses that capture images with surgical precision, turning light into digital gold.

- Image Sensors: Pixel-powered translators converting visual signals into computer-readable language.

- Vision Processing Systems: Algorithmic brains that analyze images faster than you can blink.

Want to know how robots actually “see”? It’s a complex dance of hardware and software, transforming light into intelligence with mind-bending speed and accuracy.

Camera Technologies for Robotics

You’ve heard cameras are just image-catchers, but in robotics, they’re way more complex—think of them as the robot’s eyeballs on steroids. From the case studies in studio robotics, robotic camera systems have become increasingly sophisticated in adapting to dynamic production environments. Multi-spectral imaging technologies now enable robots to analyze not just visual data, but also depth, color, and contextual information with unprecedented precision.

Different camera sensor types aren’t just about megapixels; they’re about how precisely a robot can interpret its world, from microscopic circuit boards to vast manufacturing floors. Depth perception technologies like stereo and 3D vision cameras enable robots to create comprehensive spatial maps of their environment, transforming visual input into actionable spatial intelligence.

When you’re selecting camera tech for a robot, you’ll need to understand resolution, performance characteristics, and lens selection—because one wrong choice could mean the difference between a robot that sees and a robot that stumbles.

Camera Sensor Types

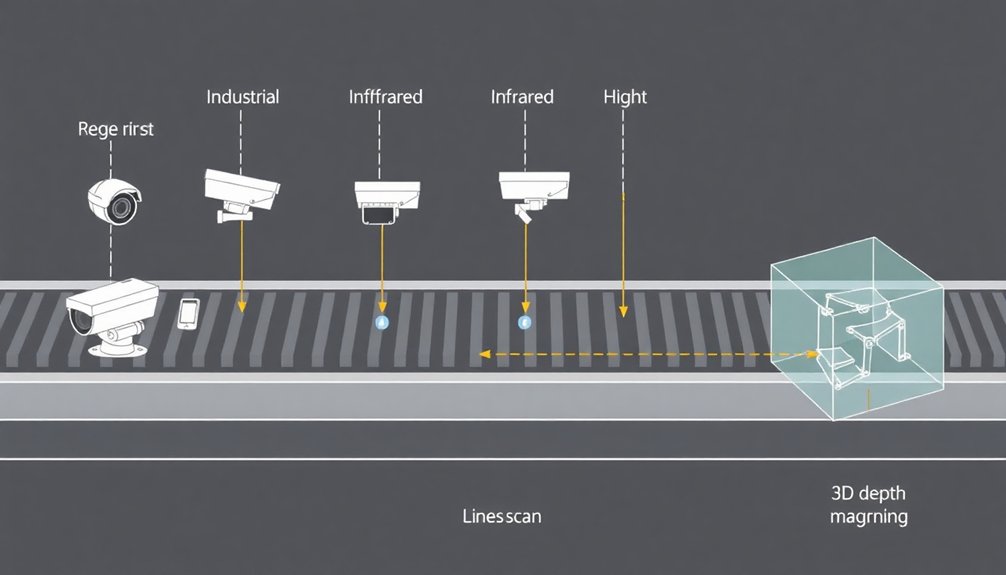

In the world of robot vision, not all cameras are created equal. Your robot’s visual perception depends on picking the right sensor type:

- CCD sensors: Old-school high-quality image capture, perfect for precision tasks

- CMOS sensors: Modern, energy-efficient, with integrated processing magic

- Infrared cameras: Seeing beyond visible light’s limitations

- 3D sensors: Depth perception that transforms robotic spatial awareness

Think of camera sensors like the eyes of your mechanical friend. CCD sensors deliver crisp images but gulp power like a thirsty robot. CMOS sensors are the lean, mean, seeing machines that can process images on-the-fly.

Want to navigate through smoke or darkness? Infrared and ultrasonic cameras become your robot’s secret weapon. Each sensor type isn’t just about capturing images—it’s about understanding the environment in ways human eyes can’t. Line scan cameras provide precise image capture by scanning one line at a time, enabling detailed inspection of continuous materials in manufacturing.

The right sensor turns a blind machine into a perceptive, adaptable companion. AI-enabled vision systems are revolutionizing how robots interpret and interact with complex visual environments, offering unprecedented levels of intelligent perception.

Resolution and Performance

Five milliseconds. That’s how fast robot vision systems can now detect and classify objects with near-perfect accuracy. Ever wondered how machines see the world? It’s not magic—it’s precision engineering. Pixel resolution precision directly impacts a robot’s ability to detect minute details and classify objects with extraordinary accuracy.

Your robot’s camera isn’t just capturing images; it’s measuring every pixel like a microscopic ruler. Resolution isn’t just about megapixels—it’s about detecting the tiniest details with crazy accuracy. Adaptive machine learning enables vision systems to continuously improve object detection capabilities by processing complex sensor data and refining perception algorithms. Advanced vision systems can dramatically improve object detection through strategic calibration techniques, ensuring consistent performance across diverse manufacturing environments.

But here’s the catch: not all robot eyes are created equal. Some systems nail 99.9% object detection in controlled environments, while others struggle with low contrast or tricky lighting.

You’ll want a system that matches your specific needs—whether it’s factory inspection or traversing complex terrain. The right resolution means the difference between a robot that works and one that just looks cool.

Lens Selection Basics

Picking the right camera lens for a robot isn’t rocket science—it’s more like matchmaking for machines. Your lens choice can make or break a robot’s visual performance, so pay attention:

- Sensor Size Matters: Match your lens to your sensor like a perfect puzzle piece.

- Focal Length is King: Control your robot’s visual reach with the right lens length.

- Working Distance Counts: Know how far your robot needs to “see.”

- Light is Everything: Aperture controls how much visual information floods in.

Think of a lens as your robot’s eyeball. It’s not just about capturing images—it’s about capturing the right images.

A mismatched lens is like giving your robot prescription glasses from the wrong eye doctor. Precision matters. The wrong lens can turn your high-tech vision system into an expensive paperweight.

Choose wisely, and your robot will thank you with crystal-clear perception.

Understanding Image Capture Methods

When robots need to see the world, they rely on a dizzying array of image capture methods that would make your smartphone camera look like a stone-age painting tool.

From webcams grabbing single frames to depth cameras measuring distances, these visual systems are way more than just point-and-shoot.

Imagine stereo vision mimicking human eyes, with two cameras capturing slightly different perspectives to create 3D spatial awareness.

Or think about structured light tricks that project patterns onto surfaces to map out depth like some kind of robotic X-ray vision.

Time-of-Flight sensors shoot light pulses and measure their return trip, fundamentally giving robots superhuman distance perception.

Want precision?

Machine learning algorithms will analyze those captured images faster than you can blink, identifying objects and plotting navigation routes with scary accuracy.

Optical Sensors and Their Functions

The mechanical eyeballs of robotics—optical sensors—are the unsung heroes transforming machines from blind metal creatures into precision-seeing digital hunters.

Imagine these sensors as your robot’s Swiss Army knife of sight:

- Thermal vision that spots heat signatures like a sci-fi superhero

- Depth perception that maps environments faster than you can blink

- Color recognition that distinguishes materials with laser-like precision

- Proximity alerts that prevent collision catastrophes

These tiny technological marvels do more than just look around—they interpret complex visual landscapes in milliseconds.

From manufacturing floors to surgical theaters, optical sensors turn raw data into actionable intelligence.

They’re the difference between a robot bumbling through tasks and one that navigates with surgical accuracy.

Want a machine that genuinely sees? Optical sensors are your ticket to the future.

Image Processing Fundamentals

From robotic optical sensors that scan environments, we now zoom into the digital brains behind machine vision: image processing. It’s where raw visual data transforms into meaningful insights robots can actually understand. Deep learning algorithms enable robots to progressively improve their visual understanding through continuous exposure to diverse images.

| Operation | Purpose | Complexity |

|---|---|---|

| Filtering | Noise Reduction | Low |

| Segmentation | Object Identification | Medium |

| Feature Extraction | Pattern Recognition | High |

| Color Transformation | Enhanced Analysis | Medium |

Think of image processing like teaching a robot to “see” — not just collect pixels, but interpret them. It’s about breaking down visual information into digestible chunks: detecting edges, recognizing shapes, and understanding spatial relationships. Algorithms convert chaotic visual noise into structured data. Imagine turning a blurry snapshot into a precise map of objects, textures, and potential interactions. The magic happens through mathematical transformations that simplify complex visual information, making robot perception possible. Who knew mathematics could give machines eyes?

Lighting Techniques for Clear Vision

You’ll want to nail lighting for robot vision like a pro photographer, but with way cooler tech.

Diffuse light distribution helps spread illumination evenly across surfaces, ensuring your robot can see every microscopic detail without harsh shadows or blind spots.

Diffuse Light Distribution

Imagine robot vision systems struggling to see clearly in harsh industrial environments—that’s where diffuse light distribution swoops in like a lighting superhero.

Why does this matter? Check out these lighting superpowers:

- Eliminates nasty shadows and glare that make machines squint

- Provides uniform illumination across complex surfaces

- Works magic on shiny, curved objects that typically confuse cameras

- Transforms tricky inspection tasks into crystal-clear visual experiences

Dome and flat diffuse lights aren’t just fancy accessories—they’re vision system game-changers. By flooding an object with soft, multi-angle light, these technologies solve visibility challenges that would make traditional cameras throw a digital tantrum.

Whether you’re inspecting tiny circuit boards or massive automotive parts, diffuse lighting guarantees your robot sees everything with surgical precision. No more guessing, no more missed defects—just pure, uninterrupted visual clarity.

Direct Surface Illumination

When robot vision systems need razor-sharp image clarity, direct surface illumination steps up like a lighting ninja ready to slice through visual noise.

It’s basically the superhero of machine vision, blasting light straight onto surfaces to reveal every microscopic detail. Want to catch that tiny scratch on a circuit board? This technique’s got your back.

By positioning lights strategically—often at a 90-degree angle—it exposes imperfections that would normally hide in shadows.

Think of it like forensic lighting for machines: high-contrast, no-nonsense illumination that turns vague shapes into precise data points.

Perfect for quality control, robotic assembly, and making sure every manufactured part meets specs. Who knew light could be such a precise detective?

Backlighting Object Details

Three key ingredients make backlighting a secret weapon in robot vision: pure contrast, precise silhouettes, and zero-nonsense detection. This lighting technique turns your robot’s vision into a superhero with X-ray-like powers:

- Instant object detection by creating stark black-and-white outlines

- Measuring external dimensions with surgical precision

- Identifying object presence or absence faster than you can blink

- Revealing hidden internal structures through differential light penetration

Want to know the coolest part? Backlighting doesn’t care about surface details—it’s all about the big picture.

By positioning lights behind objects and capturing their silhouettes, you’re fundamentally giving your robot vision system a truth serum. It strips away complexity, leaving only pure, unadulterated shape and dimension.

Who needs fancy camera tricks when you can see right through things—literally?

Interpreting Visual Data

How do robots actually “see” the world around them? It’s like giving machines a brain and eyeballs, but way cooler. They process visual data through complex algorithms that break down images into bite-sized information.

First, cameras and sensors grab raw visual inputs, then sophisticated software goes to work – filtering noise, detecting edges, and segmenting scenes faster than you can blink. Deep learning models recognize objects with crazy accuracy, turning pixels into meaningful insights.

Think of it like training a superhuman detective who never gets tired. The magic happens when these systems can identify a chair, measure its distance, understand its context, and decide what to do next – all in milliseconds.

Fundamentally, robot vision transforms meaningless visual data into actionable intelligence that helps machines navigate and interact with the world.

Software Algorithms in Robot Vision

Because robots don’t have eyeballs like we do, they rely on mind-blowing software algorithms to transform raw visual data into meaningful insights.

These digital vision systems turn pixels into powerful perceptions through clever computational tricks:

- Filtering out the noise: Gaussian and median filters scrub visual static, making images crystal clear.

- Finding boundaries: Edge detection algorithms trace object outlines like a robotic forensics expert.

- Learning from examples: Convolutional Neural Networks gobble up image datasets and recognize complex patterns faster than you can blink.

- Understanding context: Semantic segmentation labels every pixel, giving robots a detailed scene map.

Think of these algorithms as the robot’s brain-eyes—translating visual chaos into actionable intelligence.

They’re not just seeing; they’re interpreting, analyzing, and making split-second decisions that would make human perception look like a slow-motion replay.

Integration With Robotic Control Systems

You’ve probably wondered how robots actually “see” and make sense of their world—and it’s all about killer communication protocols and lightning-fast vision processing.

When cameras and sensors start chatting with robotic control systems through high-speed networks, they’re basically creating a real-time nervous system that lets machines react faster than you can blink.

Think of it like giving your robot buddy a supercharged set of eyes that can instantly translate visual data into precise movements, turning what used to be sci-fi fantasy into today’s industrial reality. Sensor fusion technologies enable robots to integrate multiple data streams, creating a comprehensive understanding of their environment that goes beyond traditional visual perception.

Data Communication Protocols

When robots start talking to each other, they need a common language—and that’s where data communication protocols come into play.

These digital translators keep robot vision systems humming smoothly across industrial landscapes. Here’s why they matter:

- Protocols define how machines exchange information, from sensor data to precise movement commands.

- Different communication methods (Ethernet, serial, wireless) serve unique robotic needs.

- Real-time performance depends on choosing the right protocol.

- Compatibility determines whether your robot vision system works or becomes an expensive paperweight.

EtherNet/IP and EtherCAT reign supreme, enabling lightning-fast data transfers between vision systems and robot controllers.

Think of them as the universal translators of the automation world—helping machines chat seamlessly, avoiding miscommunication that could turn a precision task into a mechanical comedy of errors.

Real-Time Vision Processing

Data communication protocols set the stage, but real-time vision processing is where robots genuinely come alive.

Imagine a robot that doesn’t just blindly follow instructions, but actually sees and understands its environment. These systems transform robotic perception from rigid programming to dynamic interaction.

High-speed cameras and sensors capture split-second details, converting physical world information into actionable data faster than you can blink. Visual servoing means robots can now adjust movements on the fly, without relying on pre-programmed coordinates.

They’re not just machines anymore; they’re adaptive entities that recognize objects, detect human presence, and respond with uncanny precision.

Think of it as giving robots a brain and eyes that work in perfect sync – no more mindless automation, but intelligent, context-aware machines ready to revolutionize how we interact with technology.

Emerging Trends in Machine Vision Technology

As machine vision technology races forward like a sports car with no speed limit, the landscape of AI-driven visual perception is transforming faster than most industries can blink.

Ready to peek into the future? Check out these mind-blowing trends:

- Edge computing will bring real-time vision processing directly to your devices

- 3D reconstruction algorithms will create digital twins of physical spaces

- Specialized sensors will see beyond human visual limitations

- AI will generate synthetic training datasets, supercharging machine learning

Imagine robots that can perceive depth, analyze complex environments, and make split-second decisions without breaking a sweat.

We’re not just talking incremental improvements—this is a full-scale revolution in how machines understand visual information.

The future isn’t just coming; it’s already rewiring our technological landscape, one pixel at a time.

People Also Ask About Robots

How Much Does a Basic Robotic Vision System Typically Cost for Small Businesses?

You’ll find entry-level robotic vision systems cost between $1,000 to $3,000, perfect for small businesses seeking basic quality checks, barcode reading, and simple measurements without breaking the bank.

Can Robot Vision Systems Work Effectively in Low-Light or Challenging Environments?

You’ll see clearly, adapt swiftly, perceive deeply. Low-light robot vision systems can effectively navigate challenging environments by using advanced sensors, specialized cameras, and innovative technologies that enhance image quality in dim conditions.

What Programming Skills Are Needed to Develop or Maintain Vision Systems?

You’ll need Python for versatility, C++ for performance, and OpenCV for vision processing. Master machine learning techniques, understand neural networks, and develop strong skills in ROS to effectively create and maintain robust robot vision systems.

How Accurate Are Robot Vision Systems Compared to Human Visual Perception?

Like a hawk spotting prey, robot vision systems can be incredibly precise, often achieving near-perfect accuracy in structured tasks. You’ll find they outperform humans in speed, consistency, and detecting minute defects across various industrial applications.

Do Different Robot Vision Systems Require Specialized Training for Operators?

Yes, you’ll need specialized training for different robot vision systems. They’ve got unique hardware, software, and applications. Certification programs and manufacturer-specific courses will help you master the specific skills and knowledge required for each system.

Why This Matters in Robotics

Robot vision isn’t just sci-fi anymore—it’s happening now. By 2025, the machine vision market will hit $14.4 billion, proving these smart eyes aren’t just a passing trend. You’re witnessing a technological revolution where machines can literally see and understand their environment. From manufacturing to healthcare, robot vision is transforming how we interact with technology. Want to stay ahead? Keep learning, stay curious, and embrace the visual intelligence revolution.

References

- https://robotsdoneright.com/Articles/components-of-a-robotic-vision-system.html

- https://www.borunte.net/info/what-are-the-general-components-of-a-simple-ro-84696243.html

- https://recognitionrobotics.com/what-are-robotic-vision-systems/

- https://www.loopr.ai/blogs/understanding-the-key-components-of-machine-vision-systems

- https://www.baslerweb.com/en-us/learning/components-vision-system/

- https://www.tencentcloud.com/techpedia/105783

- https://www.tdipacksys.com/blog/principles-of-vision-inspection-system/

- https://www.borunte.net/info/detailed-introduction-to-the-working-principle-81967228.html

- https://lewisgroup.uta.edu/ee5325/lectures99/vision.pdf

- https://www.augmentus.tech/blog/what-is-robot-vision-system/