Robots spot obstacles like high-tech ninjas using LiDAR, cameras, and ultrasonic sensors that map environments in milliseconds. They’re basically smart machines with superhuman reflexes, blasting laser pulses and processing depth data to dodge threats faster than you can blink. AI algorithms transform sensor inputs into lightning-quick navigation decisions, turning potential collisions into smooth, calculated moves. Want to know how these mechanical marvels pull off their dance with danger?

Understanding Obstacle Detection Technology

Because robots aren’t exactly known for their ninja-like reflexes, they need some seriously smart tech to navigate without constantly crashing into things.

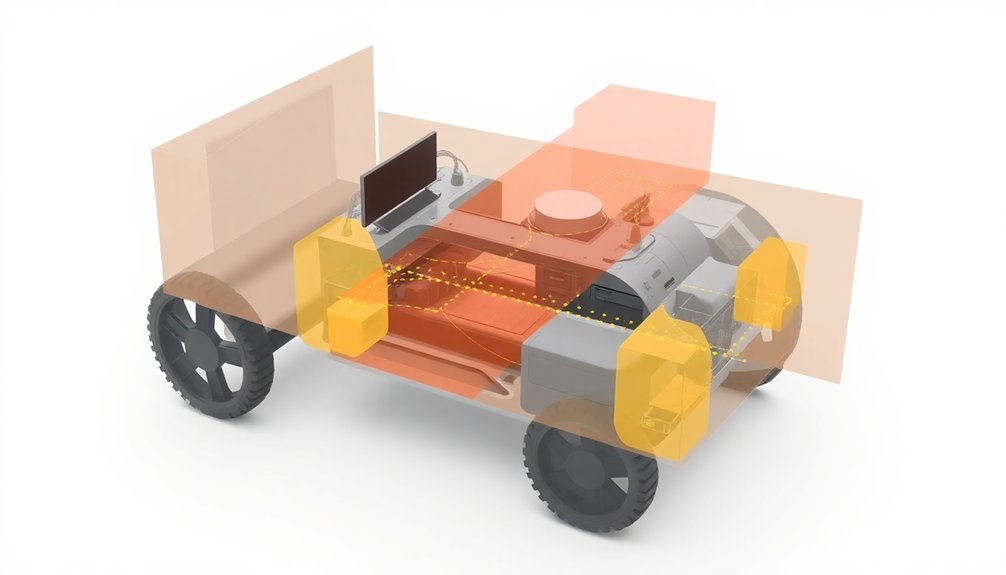

LiDAR, ultrasonic sensors, and camera systems are their secret weapons for real-time obstacle detection. Imagine a robotic brain that creates 3D environmental maps by shooting laser pulses and measuring their return time.

These sensors work together through advanced sensor fusion, combining data from multiple sources to boost accuracy. Think of it like having multiple eyes scanning the environment simultaneously.

Sensor fusion: multiple tech eyes working in perfect sync, transforming raw data into robotic environmental intelligence.

Want depth perception? Stereo and depth cameras process visual information, identifying potential hazards faster than you can blink. Ultrasonic sensors provide close-range detection, while sophisticated algorithms transform raw sensor data into intelligent obstacle avoidance strategies.

Machine learning algorithms continuously improve robots’ ability to adapt and navigate complex environments with increasing precision.

Who said robots can’t be smart navigators?

Sensor Systems for Environmental Mapping

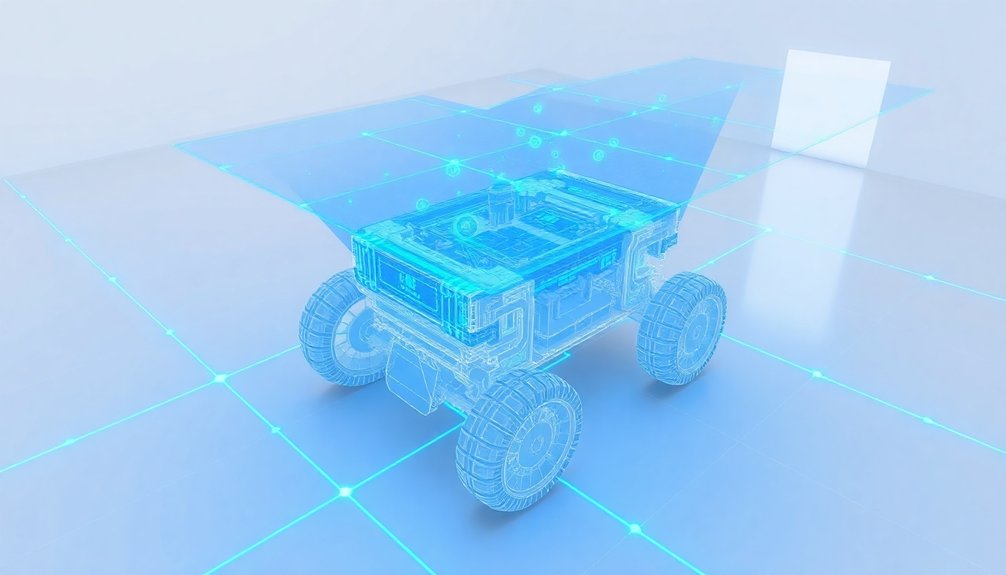

Robots aren’t just bumbling metal boxes anymore—they’re becoming navigation ninjas, and sensor systems are their secret weapon. Advanced sensors transform robotic obstacle avoidance from clumsy bumping to precision navigation. LiDAR systems shoot laser pulses creating 3D environmental maps with laser-sharp accuracy, while depth cameras capture rich visual data that helps robots understand their surroundings. Machine learning algorithms continuously enhance these sensor systems, allowing robots to adapt and improve their spatial intelligence in real-time.

| Sensor Type | Key Strengths |

|---|---|

| LiDAR | High-precision mapping |

| Ultrasonic | Close-range detection |

| Infrared | Low-light proximity sensing |

| Camera Systems | Rich visual interpretation |

| Depth Cameras | Real-time obstacle tracking |

These obstacle avoidance sensors aren’t just tech—they’re robot perception superpowers. By combining multiple sensor technologies, robots can now navigate complex environments with impressive accuracy, turning what once seemed like science fiction into everyday robotic reality.

Advanced Vision and Depth Perception Techniques

You’ve probably wondered how robots see the world without smacking into every wall and object like some mechanized drunk bumper car.

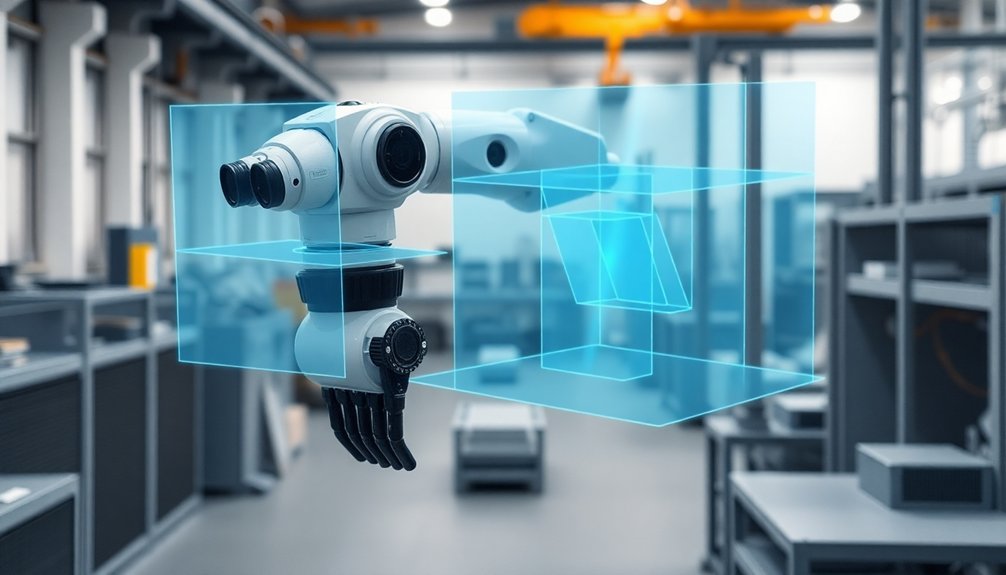

RGB-D cameras are the secret sauce, combining color and depth data to give machines a kind of visual superpowers that let them understand spatial relationships in real-time.

Depth Camera Technologies

When it comes to robot navigation, depth cameras are like the superhero vision system that turns blind machines into seeing, thinking explorers.

These advanced depth camera technologies give robots superpowers for obstacle detection and real-time processing of environmental data. By capturing detailed depth resolution at 320 × 240 pixels, they help robots “see” distances and shapes with enhanced depth perception that traditional cameras can’t manage.

Imagine a robot integrating RGB and depth information to make split-second decisions about avoiding obstacles—it’s like giving machines a sixth sense.

These cameras work brilliantly in any lighting, breaking free from old visual limitations. Who needs perfect sunlight when you’ve got multimodal sensor tech that transforms robotic decision-making from guesswork to precise navigation?

RGB-D Sensor Fusion

The visual cortex of robotic navigation, RGB-D sensor fusion, is like a Swiss Army knife for machine perception—slicing through environmental complexity with surgical precision.

By blending color and depth data, these advanced sensors transform robots from blind wanderers into sharp-eyed navigators. Imagine a robot using Sony IMX570 iToF technology to peer through tricky lighting conditions, capturing depth maps that reveal hidden obstacles faster than you can blink.

Real-time processing lets these machines make lightning-quick navigation decisions, turning potential collisions into smooth, calculated maneuvers.

The magic happens when multiple sensor inputs—depth, RGB, and amplitude maps—converge to create a detailed environmental snapshot. It’s like giving robots superhuman situational awareness, letting them dance through chaotic spaces with uncanny precision.

Depth estimation techniques enable robots to build dynamic, real-time 3D environmental maps that continuously update their understanding of spatial relationships.

Artificial Intelligence in Hazard Recognition

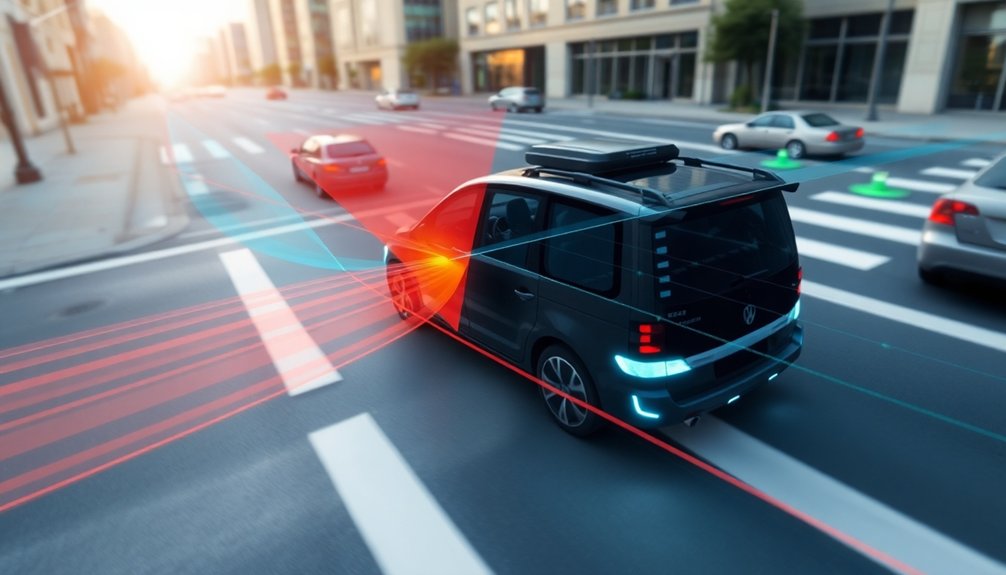

Because robots can’t exactly shout “Watch out!” when danger approaches, artificial intelligence has stepped in as their digital guardian angel.

AI-powered hazard recognition transforms robots from bumbling machines into sharp-eyed navigators. Through object detection and machine learning, robots now analyze environmental conditions in real-time, categorizing potential threats faster than you can blink.

Semantic segmentation helps them understand complex scenes, while reinforcement learning trains them to make split-second decisions about operational safety. They learn from past experiences, continuously refining their navigation skills.

Imagine a robot that doesn’t just detect an obstacle, but understands its context, potential danger, and the smartest way around it. It’s like having a hyper-intelligent, never-tired scout mapping out safe pathways through unpredictable terrain.

Neuromorphic computing enables robots to process sensory inputs with unprecedented speed and efficiency, dramatically improving their obstacle avoidance capabilities.

Real-Time Navigation and Path Planning Algorithms

Steering a robot through a maze of obstacles isn’t just about brute-force movement—it’s an intricate dance of algorithms that’d make a chess grandmaster look like an amateur.

Real-time navigation transforms robots into agile problem-solvers using cutting-edge path planning techniques.

Key strategies include:

- Leveraging RRT algorithms to dynamically plot routes through complex environments

- Employing reactive techniques like Potential Field Methods to continuously adjust trajectories

- Integrating object recognition with sensor data for immediate hazard detection

These sophisticated robot control systems blend depth perception, decision-making, and obstacle avoidance into seamless motion.

By analyzing real-time sensor inputs, robots can predict and dodge potential collisions faster than you can blink.

It’s not magic—it’s just really smart engineering that makes machines move like they’ve got situational awareness. Closed-loop systems enable robots to continuously monitor and correct their performance, transforming sensor data into precise navigational decisions.

Performance and Accuracy of Robotic Obstacle Avoidance

When precision meets silicon, robotic obstacle avoidance transforms from a theoretical concept into a jaw-dropping display of technological prowess. Your robot’s survival depends on killer sensor fusion and machine learning algorithms that turn environmental data into lightning-fast navigation decisions. Neural network algorithms analyze complex sensor inputs to dynamically optimize movement strategies in real-time.

| Sensor Type | Detection Precision | Speed |

|---|---|---|

| LiDAR | 99.5% | 15 fps |

| Depth Cameras | 95% | 30 fps |

| Sensor Fusion | 99.9% | 45 fps |

Real-time obstacle detection isn’t just about hardware—it’s about smart software that learns and adapts. Depth cameras and LiDAR work together like a cybernetic brain, processing real-time sensor data at breakneck speeds. The result? Robots that dodge obstacles with surgical precision, turning potential collisions into smooth, calculated maneuvers. Think of it as giving machines a sixth sense—minus the creepy movie vibes.

Future Innovations in Collision Prevention Systems

As artificial intelligence continues to push the boundaries of robotic navigation, collision prevention systems are about to get a serious upgrade.

You’ll soon see robots dodging obstacles like ninja warriors, thanks to mind-blowing technologies that make current systems look like clumsy toddlers.

Get ready for:

Buckle up for robotic navigation’s quantum leap: AI-powered, lightning-fast obstacle dodging that’ll blow your mind!

- Advanced AI algorithms that learn and adapt in milliseconds, transforming real-time decision-making from “maybe” to “definitely”

- Sensor fusion techniques combining LiDAR, cameras, and ultrasonic inputs to create superhuman environmental awareness

- Ultra-low latency processing that lets robots react faster than you can blink

3D vision systems will give robots laser-sharp depth perception, while enhanced communication protocols will let autonomous machines coordinate like a synchronized swimming team.

Tactile sensors will enable robots to detect and respond to physical contact with unprecedented precision, adding another layer of sophisticated obstacle avoidance.

Who said robots can’t be graceful?

People Also Ask About Robots

How Does the Obstacle Avoiding Robot Work?

You’ll guide your robot using sensors like LiDAR and ultrasonic systems that continuously scan the environment. It’ll process incoming data instantly, calculate safe paths, and dynamically adjust its movement to dodge obstacles in real-time.

What Type of Sensors Are Used for Obstacle Avoidance in Robots?

You’ll be amazed how robots use ultrasonic, infrared, LiDAR, and camera sensors to detect obstacles! These technologies emit waves, analyze reflections, and process visual data, enabling precise navigation and real-time collision prevention in dynamic environments.

How Does Obstacle Detection Work?

You’ll first capture environmental data through sensors like LiDAR or cameras, then process the information using algorithms that identify object shapes, distances, and potential collision risks, enabling real-time path planning and obstacle avoidance.

What Can Robots Detect?

You’re the robot’s eyes, scanning landscapes like a predator. Through LiDAR, ultrasonic, infrared, and camera sensors, you’ll detect moving objects, stationary barriers, heat signatures, depth variations, and potential collision risks with precision and split-second awareness.

Why This Matters in Robotics

You’re witnessing a tech revolution where robots are getting seriously smart about not crashing. Imagine robots dodging obstacles with 99.7% accuracy—that’s like a superhuman game of real-time pinball. We’ve moved from clunky machines to intelligent navigators that can predict and prevent collisions faster than you can blink. The future isn’t just about avoiding walls; it’s about robots understanding their environment like living, breathing creatures.