Robots navigate like tech-savvy explorers using advanced sensors and smart algorithms. They create real-time digital maps with LiDAR and cameras, processing environmental data faster than you can blink. Machine learning helps them adapt and predict obstacles, turning chaotic spaces into precise navigation playgrounds. Think of them as GPS ninjas that transform raw sensor inputs into intelligent movement strategies. Curious how deep this robotic rabbit hole goes?

The Science Behind Robot Navigation

While robots might seem like magical wandering machines, their navigation skills are actually rooted in cutting-edge science.

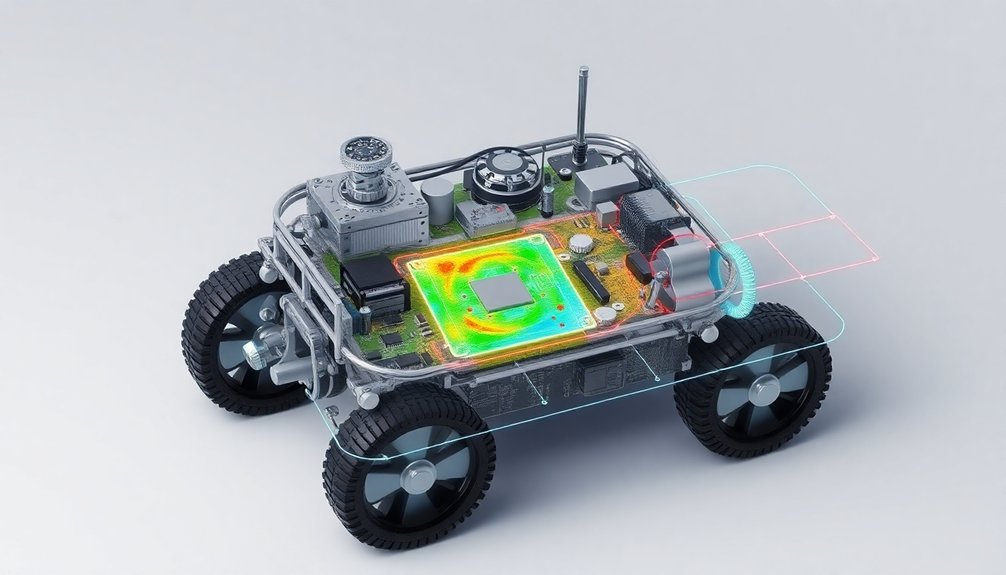

Navigation systems rely on complex mapping technologies like LiDAR and Simultaneous Localization and Mapping (SLAM) that transform raw sensor data into intelligent movement. These systems aren’t just fancy GPS—they’re sophisticated obstacle avoidance networks that help robots understand their environment in real-time.

Imagine a robot scanning its surroundings with laser beams, creating a 360-degree map faster than you can blink. It’s like giving machines a superhuman sense of spatial awareness. Machine learning algorithms continuously refine these perception capabilities, enabling robots to adapt and improve their navigation skills in real-time.

Mapping Technologies: Creating Digital Floor Plans

LiDAR uses spinning lasers to create razor-sharp 360-degree maps, letting robot vacuum cleaners dance around your furniture like precision ballet dancers.

Camera-based systems snap landmark images, though they struggle in dim lighting – not exactly night vision heroes.

Gyroscope and accelerometer sensors provide basic direction tracking, but they’re the awkward cousins of navigation tech.

Modern robot vacuums aren’t stumbling blind anymore.

They’re smart enough to combine multiple sensors, creating digital floor plans that remember every chair leg and carpet edge.

Depth estimation techniques allow robots to build increasingly precise spatial intelligence, enhancing their mapping capabilities.

Obstacle avoidance? Totally nailed it.

Want custom cleaning routes? There’s an app for that.

These little machines are turning your home into a mapped playground of algorithmic efficiency.

Sensors: The Eyes and Ears of Autonomous Robots

Because robots aren’t telepathic (yet), they rely on an arsenal of sensors to understand their world – think of these as their digital nervous system.

Your robot vacuum cleaner isn’t just blindly bumping around; it’s packed with sophisticated navigation systems that help it dodge obstacles like a tiny, determined ninja.

LiDAR and infrared sensors create detailed maps of your floor, while cliff sensors prevent tragic tumbles down stairs.

Want to know how they’re so precise? These robotic brainiacs constantly process data from multiple sensors, making split-second decisions about where to go and what to avoid.

Wall sensors help them hug edges perfectly, and wheel sensors track exact distances traveled.

It’s like giving a machine superhuman spatial awareness – without the cape.

Tactile sensors enable robots to detect subtle environmental changes and enhance their precision in navigation and object interaction.

SLAM: How Robots Understand Their Environment

Imagine a robot that can navigate a complex warehouse without bumping into shelves or getting lost—that’s the magic of SLAM, or simultaneous localization and mapping.

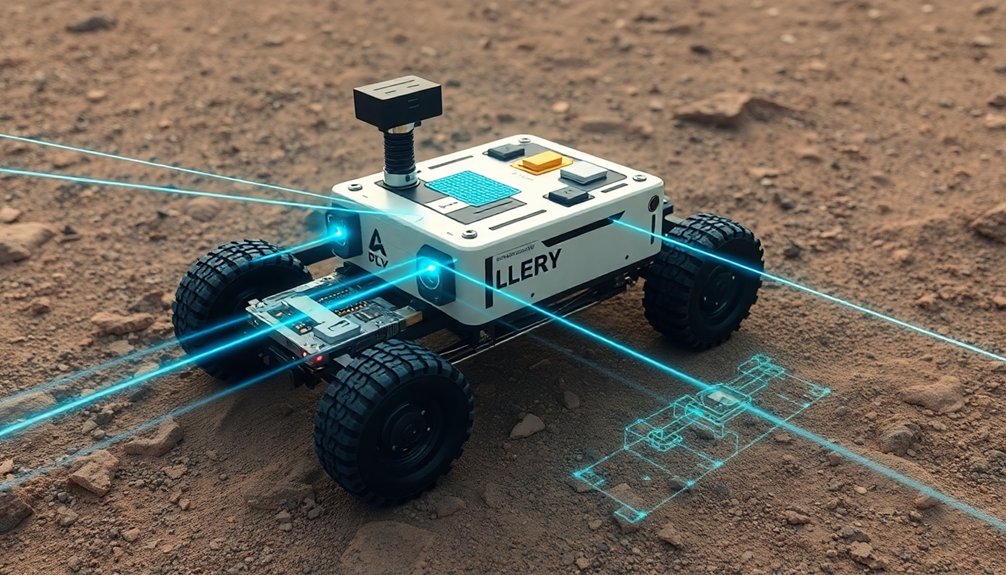

By combining sensors like cameras and LiDAR with AI algorithms, robots create real-time maps while tracking their precise location. Think of it as the robot’s internal GPS on steroids.

Semantic navigation takes this a step further, helping robots recognize and interact with specific objects in their environment. Whether it’s dodging obstacles in low-light conditions or understanding spatial relationships, SLAM turns clunky machines into smart navigators.

Advanced hardware like the Nvidia Jetson AGX Xavier processes sensor data at lightning speed, transforming raw information into actionable navigation insights.

Who said robots can’t be street-smart?

Path Planning and Obstacle Avoidance Strategies

You’re probably wondering how robots actually figure out where to go without constantly smacking into walls like a drunk Roomba.

Smart route selection isn’t magic—it’s about combining sensor data with predictive algorithms that let robots analyze their environment faster than you can say “obstacle detected.”

Robots leverage sensor fusion techniques that integrate multiple data sources like LiDAR and camera vision to create dynamic, real-time navigation strategies.

Smart Route Selection

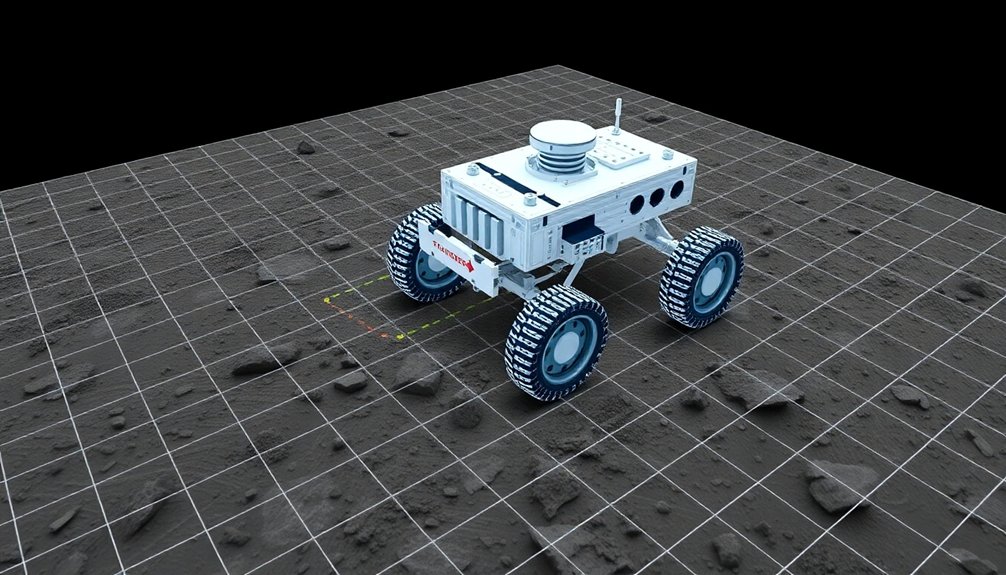

When robots hit the road—or floor, or warehouse, or Mars—they’ve got more brains in their navigation systems than most humans realize. Smart route selection isn’t just about moving from A to B; it’s a complex dance of obstacle avoidance and real-time navigation. Reinforcement learning algorithms enable robots to continuously optimize their movement strategies through advanced sensory feedback and digital trial-and-error. Using an arsenal of sensors and Artificial Intelligence, these mechanical explorers map their environment faster than you can say “autonomous.” LiDAR and cameras become their eyes, detecting everything from dust bunnies to unexpected furniture, while cloud-based directories help them learn and remember spaces. They’re constantly recalculating paths, dodging obstacles with the precision of a digital chess master. Think of them as the ultimate GPS—minus the passive-aggressive “recalculating” voice—adapting on the fly and making split-second decisions that keep them moving forward.

Dynamic Obstacle Detection

| Sensor Type | Detection Speed | Accuracy |

|---|---|---|

| LiDAR | 50x/second | High |

| Cameras | 30x/second | Medium |

| ToF Sensors | 60x/second | Very High |

Reactive AI means these machines don’t just see obstacles—they strategize around them. Cloud-based tech helps robots remember tricky spaces, rerouting smoothly through your home’s chaos. Think of them as navigation ninjas, adapting instantly to whatever random clutter you’ve got lying around. Who knew robots could be this smart? Voice command processing allows robots to integrate sensor data with real-time linguistic instructions, enhancing their navigation intelligence.

Predictive Path Optimization

Because traversing through a complex environment isn’t child’s play, robots need some seriously smart path-planning skills. Deep reinforcement learning enables robots to develop superhuman navigation capabilities by continuously learning from thousands of simulated scenarios.

Predictive path optimization is their secret sauce for navigation and mapping. These brainy machines use advanced algorithms to scout routes faster than you can say “obstacle avoidance.” By calculating probabilities of safe passage in real-time, robots can dodge potential roadblocks like ninja warriors.

Want to know how they boost cleaning efficiency? They’re constantly recalculating the most strategic path, weighing travel time against potential risks. Imagine a robot vacuum that doesn’t just bump randomly around your living room, but intelligently plots a course that minimizes wasted motion.

It’s like having a GPS with attitude—always one step ahead, always adapting. Who said robots can’t be smart and efficient?

Advanced Algorithms: Making Smart Navigation Decisions

If you thought robots were just mindless machines bumbling around like lost tourists, think again. Advanced algorithms have transformed robotic navigation from guesswork to genius-level problem-solving. Neuromorphic computing enables robots to learn and adapt using brain-inspired networks, enhancing their navigation capabilities.

These smart systems leverage cutting-edge technologies to make navigation decisions that would make GPS look primitive:

- Integrate multiple sensors like cameras and LiDAR to create real-time environmental mapping

- Utilize deep learning techniques for dynamic obstacle avoidance and route optimization

- Apply semantic segmentation to understand complex environments beyond simple obstacle detection

Robots now analyze probabilities, predict potential challenges, and adapt routes instantaneously.

They’re not just following pre-programmed paths; they’re learning, interpreting, and making split-second decisions that keep them moving efficiently.

Imagine a robot that can think on its wheels, recalculating its journey faster than you can say “recalculating.”

Real-World Applications of Robotic Navigation Systems

Smart navigation algorithms are cool in a lab, but where do robots really shine? Think robot vacuums gliding around your living room, mapping technology turning chaotic spaces into precise digital landscapes.

These machines use obstacle sensors and LiDAR to understand their world better than most humans. The U.S. Army’s SIGNAV project proves robots can navigate GPS-denied environments, mapping underground tunnels and dark spaces with crazy accuracy.

Real-time navigation isn’t just a sci-fi dream—it’s happening now. Imagine robots sharing location data instantly, reducing positioning errors and working together like a high-tech swarm.

By combining depth measurements with visual imaging, these mechanical explorers can now adapt to complex environments, from battlefield reconnaissance to household cleaning.

Who said robots are just fancy machines? They’re becoming navigation ninjas.

People Also Ask About Robots

How Do Robots Navigate?

You’ll navigate using advanced sensors like LiDAR and cameras, creating real-time maps while tracking your location. By constantly updating your environment, you’ll dynamically adjust your path and avoid obstacles efficiently.

How Do Robot Vacuums Map Your House?

You’ll hit the ground running when your robot vacuum scans your home, using LiDAR and cameras to capture thousands of data points, creating a detailed digital map that guides its precise, autonomous cleaning path.

How Do Robot Waiters Know Where to Go?

You’ll see robot waiters navigate using LiDAR and advanced sensors that create real-time 360-degree maps. They’ll dynamically track their location, detect obstacles, and adjust routes instantly through sophisticated AI-powered algorithms in busy restaurant environments.

How Does a Robot Follow a Line?

You’ll trace a line using infrared sensors that detect color contrast. By measuring the line’s position relative to the robot, you’ll adjust wheel speeds through a PID control algorithm, ensuring precise navigation.

Why This Matters in Robotics

You’ve seen how robots map, sense, and navigate like tiny, brainy explorers. They’re not just rolling around randomly—they’re calculating, predicting, and learning with every move. From warehouse robots to self-driving cars, these machines are transforming how we think about movement and intelligence. Will they eventually navigate our world better than we do? Keep watching—the future of robotic navigation is just getting started.