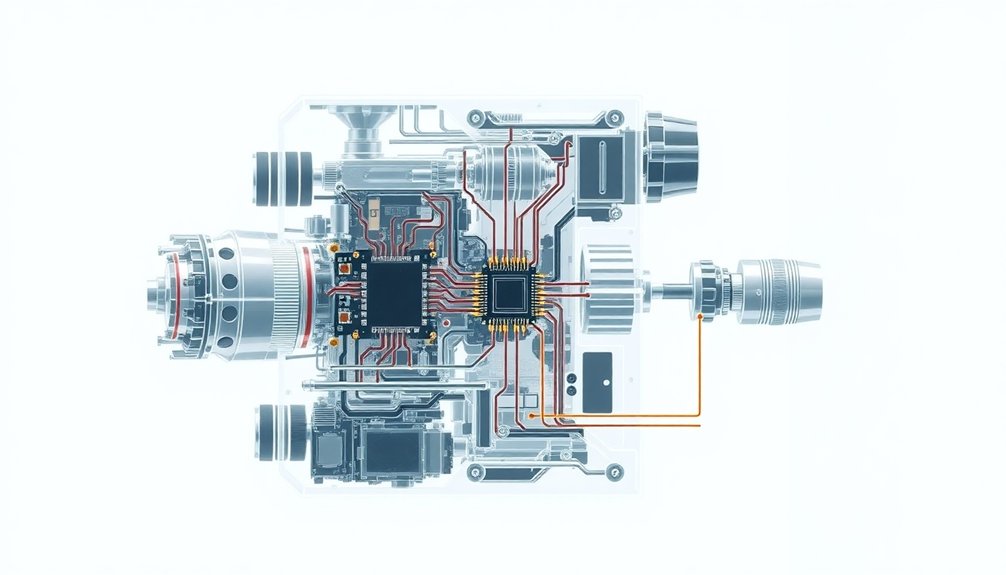

Robot control systems are your mechanical brain’s secret sauce. They’re not just circuits and code—they’re intelligent decision-makers that transform robots from clunky machines into adaptive problem-solvers. Imagine sensors as eyes, algorithms as reflexes, and machine learning as a brain constantly learning. Your robot doesn’t just move; it thinks, predicts, and adjusts in milliseconds. Want to see how metal becomes magic? Stick around.

The Foundations of Robotic Movement Control

While robots might seem like magical beings from sci-fi movies, their movement is actually a complex dance of sensors, algorithms, and split-second decisions. Control schemes aren’t just fancy tech—they’re the robot’s nervous system, constantly processing environmental data from cameras and LiDAR. Machine learning algorithms enhance the robot’s ability to adapt and learn from sensory inputs, transforming how these mechanical beings interact with their environment. Think of it like a super-smart GPS that doesn’t just map a route, but adjusts in real-time to unexpected obstacles. Early robotic projects laid the groundwork with basic navigation principles, but today’s systems use feedback loops that let robots correct their path instantly. Imagine a robot like a hyper-aware athlete, constantly monitoring its surroundings and making micro-adjustments to stay on track. Advanced techniques like SLAM have transformed how robots understand and move through space, turning clunky machines into nimble navigators.

Decoding Proportional-Integral-Derivative (PID) Algorithms

You’ve probably wondered how robots nail precision movements without turning into a chaotic dance machine. PID controllers are the secret sauce that lets machines translate error signals into smooth, calculated adjustments—think of them like a hyper-intelligent thermostat constantly fine-tuning your robot’s performance.

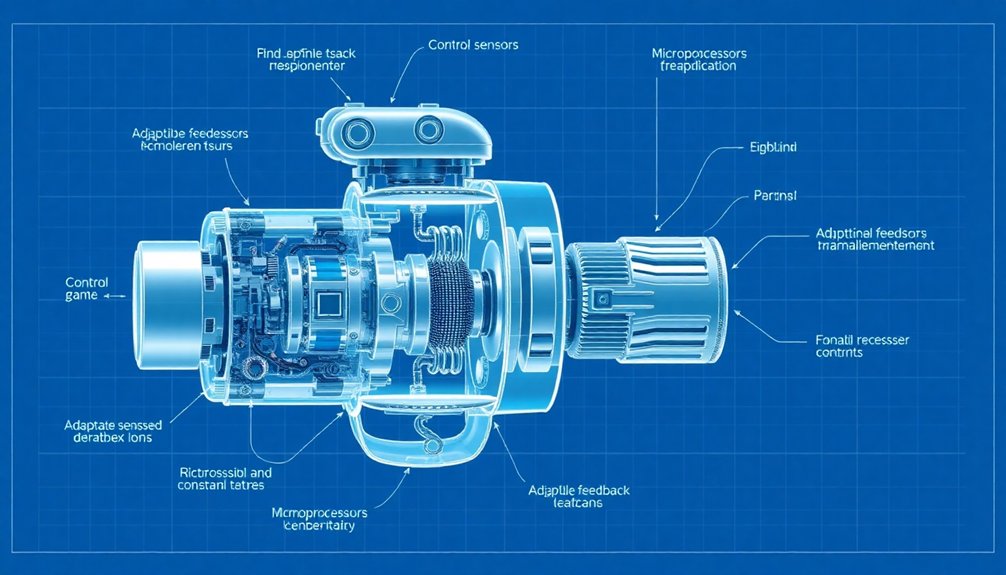

PID Controller Core Components

Because robots aren’t mind readers (yet), they need smart ways to correct their own movements and actions. Enter the PID controller—your robot’s built-in navigation genius. Think of it like a robotic GPS that constantly adjusts course.

The proportional component acts like an instant reaction force, where bigger errors trigger bigger corrections. The integral term is the memory bank, tracking past mistakes to eliminate persistent wobbles. Meanwhile, the derivative component works like a predictive windshield, sensing how quickly things might go wrong and dampening potential chaos.

When you’re fine-tuning robot control, these three components dance together, transforming jerky movements into smooth, precise actions. It’s like teaching a machine to have muscle memory—except with math instead of repetition. Cool, right?

Error Correction Mechanisms

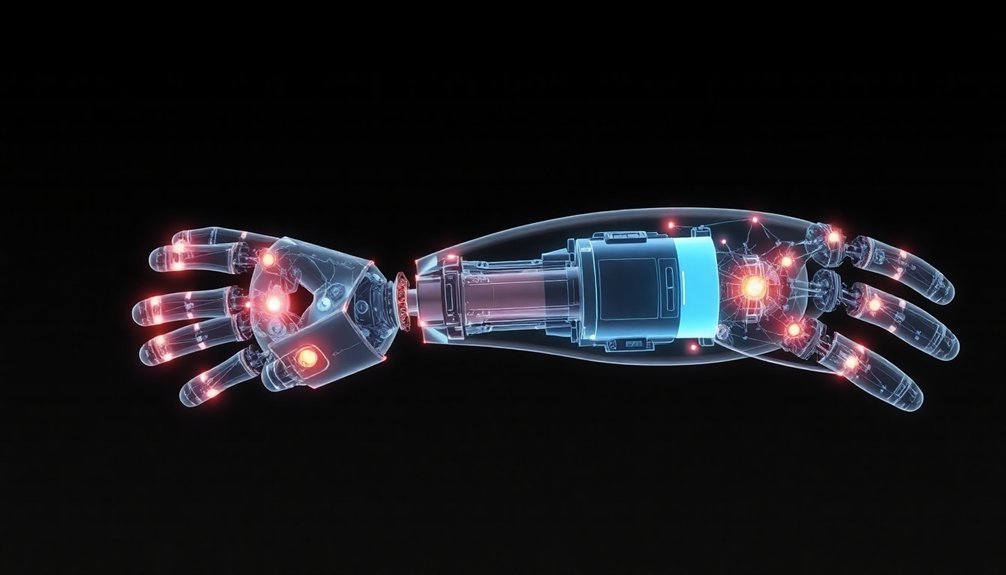

Robotics isn’t just about building fancy mechanical limbs—it’s about teaching those limbs to think on their feet, or wheels, or whatever locomotion system they’re rocking.

Error correction mechanisms are the secret sauce that keeps robots from looking like drunk bumper cars. PID controllers tackle control problems through three critical strategies:

- Real-time error tracking

- Predictive adjustment

- Dynamic parameter tuning

Imagine your robot encountering unexpected terrain—these mechanisms instantly analyze the deviation, accumulate past performance data, and proactively adjust movements.

It’s like having a neurotic GPS that’s constantly recalculating but actually gets you where you need to go. The magic happens when these algorithms predict potential errors before they occur, transforming robotic movement from rigid and mechanical to fluid and adaptive.

Humanoid robotic technologies have demonstrated remarkable potential in navigating complex environments by integrating advanced control systems that mimic human-like adaptability.

Who wouldn’t want a robot that learns and adapts faster than your average teenager?

Real-World Performance Optimization

Crack open the PID algorithm’s hood, and you’ll find the turbocharged engine of robotic precision. Your robot manipulators aren’t just moving; they’re calculating every microscopic adjustment with lightning-fast mathematical reflexes.

Think of PID like a hyper-intelligent cruise control that never sleeps. By tweaking those magical parameters—proportional, integral, and derivative—you transform clunky machines into surgical instruments.

Want a robotic arm that moves smoother than butter? Adjust the Kp, Ki, and Kd values. It’s like tuning a high-performance sports car, except your vehicle might be assembling microchips or performing delicate medical procedures.

The beauty? PID controllers learn. They’re not just following preset instructions; they’re constantly recalibrating, predicting, and correcting. Your robots become smarter with every millisecond of movement.

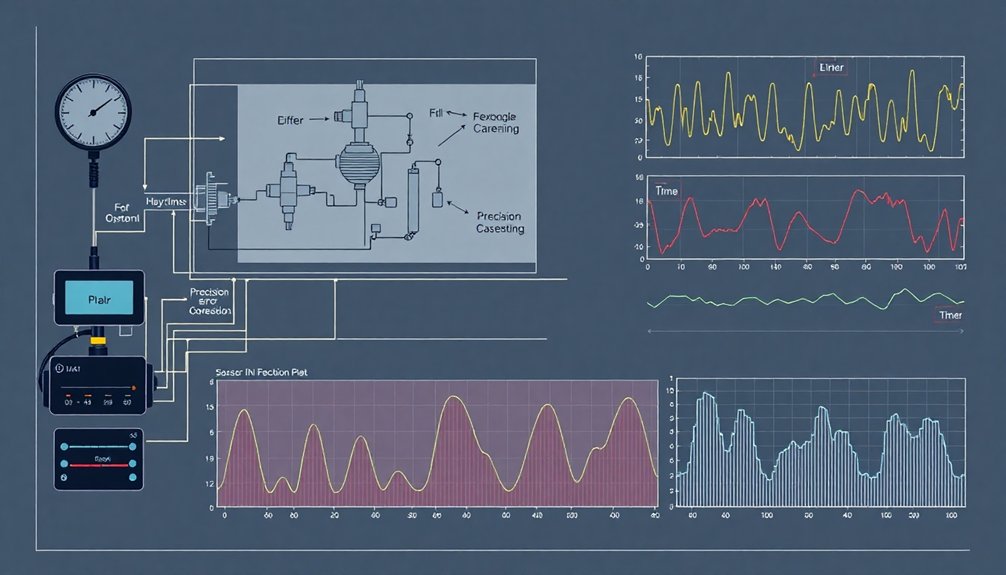

Sensor Fusion and Environmental Perception

When robots venture into complex environments, they need more than just a single set of eyes—they need a supercharged sensory network.

Sensor fusion isn’t just fancy tech talk; it’s how robots make sense of chaotic worlds. By blending data from cameras, LiDAR, and other sensors, these mechanical explorers can:

- Map their precise location with millimeter accuracy

- Predict potential navigation challenges

- Adjust movements in real-time

Think of it like having multiple brains working together, each contributing a unique perspective.

Exteroceptive sensors become the robot’s sixth sense, transforming raw environmental data into actionable insights. It’s not about collecting information—it’s about understanding it.

Feedback Loops: The Neural Network of Robotic Systems

Ever wondered how robots don’t totally wipe out when maneuvering complex environments?

Feedback loops are basically the robot’s built-in survival instinct, constantly checking their sensors and making split-second adjustments to avoid crashing into walls or tripping over unexpected obstacles.

Think of these systems like a super-smart GPS that not only tells you where to go, but instantly recalculates when you take a wrong turn — except in this case, the “wrong turn” could mean a multi-million dollar machine face-planting into concrete.

Neural networks enable robots to transform these feedback loops into adaptive learning experiences, allowing them to evolve and improve their movement strategies with each interaction.

Sensing Environmental Changes

How do robots actually know what’s happening around them? Sensing environmental changes isn’t magic—it’s pure technological wizardry. Your average robot is basically a sensor-packed detective, constantly gathering intel about its surroundings.

These mechanical brainiacs track their environment through three critical methods:

- Camera vision that processes spatial data in milliseconds

- LiDAR scanning that creates precise 3D landscape maps

- Algorithmic decision-making that transforms raw sensor inputs into intelligent movements

When a robot encounters an obstacle, its control system doesn’t just stop—it calculates, adapts, and reroutes faster than you can blink. Proprioceptive sensors enable robots to continuously recalibrate their movements and maintain precise environmental awareness.

Think of it like a GPS that doesn’t just tell you where to go, but actively navigates around traffic, construction, and unexpected challenges. The result? A robot that’s more responsive and agile than most humans could ever imagine.

Adaptive Control Mechanisms

The robotic brain’s secret sauce is its adaptive control mechanism—a neural network that transforms raw sensor data into lightning-fast decisions.

Think of it like a superhuman nervous system that’s constantly recalculating your every move. These mechanisms aren’t just tracking; they’re predicting and adjusting in milliseconds.

Imagine a robot zipping through a warehouse, dodging shelves and workers without breaking a sweat—that’s adaptive control in action. Sensors feed real-time information into complex algorithms that instantly recalibrate speed, direction, and trajectory.

It’s like having a microscopic strategist inside the machine, making split-second choices that keep the robot stable and precise. Humanoid robots like Figure 01 demonstrate how advanced control systems enable machines to navigate complex environments with unprecedented precision and adaptability.

Want to know how robots navigate chaotic environments? It’s all about those feedback loops, baby—the neural network that turns cold machinery into something wickedly smart.

Corrective System Responses

While robots might seem like precision machines that never miss a beat, they’re actually constantly course-correcting—just like you’d adjust your steering when a squirrel darts across the road.

These mechanical marvels rely on sophisticated feedback loops that act like a neural network, enabling rapid adjustments through their control law. How do they nail such precision? Consider these core mechanisms:

- Real-time sensor monitoring

- Instantaneous computational analysis

- Immediate corrective responses

PID controllers become the robot’s internal GPS, constantly comparing desired versus actual positions and making micro-adjustments faster than you can blink.

When unexpected obstacles or environmental shifts occur, these systems don’t just freeze—they adapt, recalculate, and keep moving with an almost uncanny intelligence.

It’s less about rigid programming and more about intelligent, dynamic problem-solving that makes robots feel eerily alive.

Real-Time Adaptive Control Mechanisms

Because robots aren’t just sci-fi fantasies anymore, real-time adaptive control mechanisms have become the secret sauce that makes modern machines think on their feet.

Imagine a robot smoothly avoiding obstacles by instantly recalculating its path—that’s these clever systems in action. Using feedback loops and smart algorithms, robots can now adjust their movements faster than you can blink.

PID controllers crunch numbers to minimize errors, while machine learning techniques help robots learn from past experiences. Want a robot that can dance through unpredictable environments?

These adaptive control mechanisms are your backstage pass. They’re like having a tiny, hyper-intelligent brain constantly tweaking movements, ensuring precision and stability.

It’s not magic—it’s just seriously cool engineering that’s turning robots from clunky machines into responsive, almost-alive technologies.

Navigational Intelligence and Path Planning

Robots aren’t just wandering aimlessly anymore—they’re becoming navigation ninjas with brains that could give GPS systems a serious run for their money.

Modern robots have cracked the code of intelligent movement through some seriously cool tech:

- Sensor fusion that combines camera and LiDAR data

- Real-time SLAM mapping that tracks position dynamically

- Adaptive path planning algorithms that optimize routes instantly

Your robotic buddy isn’t just moving; it’s thinking its way through complex spaces.

Control systems now allow machines to make split-second decisions, turning potentially chaotic environments into predictable pathways.

Imagine a robot that can dodge obstacles, recalculate routes, and adjust movements faster than you can blink.

Computer vision and mapping technologies have transformed these mechanical travelers from blind wanderers into strategic navigators, making every movement a calculated dance of precision and intelligence.

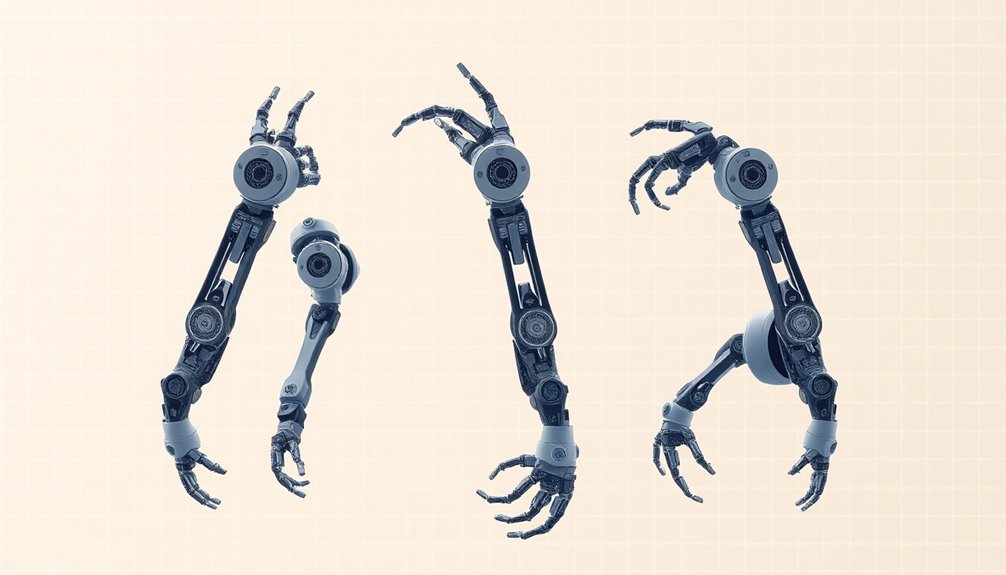

Biomimetic Approaches to Robot Coordination

Nature’s got some seriously slick design tricks, and engineers are totally stealing her playbook for robot coordination.

Biomimetic approaches are basically robots learning from nature’s killer playbook – think swarms of robots moving like ant colonies, making decisions faster than you can blink. Neural networks now mimic animal decision-making, letting robot teams solve complex problems without a boss calling the shots.

Imagine robots with muscle dynamics that move as smoothly as a cheetah, adapting to terrain like living creatures. They’re picking up sensory tricks from biological systems, responding to changes in milliseconds.

These aren’t your clunky old robots – they’re dynamic, responsive machines learning from millions of years of evolutionary design. Who knew insects could teach robots how to work together?

Welcome to the future of robotic coordination.

Advanced Control Strategies in Autonomous Systems

You’ve heard about robots learning to think on the fly, right?

Adaptive neural network control is basically giving machines a brain that can rewire itself in real-time, letting robots adjust their movements faster than you can blink.

Imagine a robot that doesn’t just follow a pre-programmed script, but actually learns and improves its performance with every single task – that’s the wild frontier of autonomous system control.

[LIST OF 2 SUBHEADING DISCUSSION POINTS IN ENGLISH

When diving into the world of autonomous systems, advanced control strategies aren’t just fancy tech—they’re the secret sauce that transforms robots from clunky machines into intelligent problem-solvers.

Your industrial future depends on understanding these game-changing approaches:

- Machine learning integration allows robots to adapt in real-time

- Predictive control models anticipate complex environmental challenges

- Hybrid control techniques bridge traditional and cutting-edge methodologies

These applications in industry aren’t just theoretical—they’re revolutionizing manufacturing, logistics, and precision engineering.

Imagine robots that can learn from mistakes, predict potential obstacles, and seamlessly adjust their movements without human intervention.

The magic happens when sophisticated algorithms meet sensor data, creating systems that think and react almost like living organisms.

Who wouldn’t want a robot that’s smarter than your average machine?

Adaptive Neural Network Control

As robots become increasingly complex, Adaptive Neural Network Control (ANNC) emerges as the brain’s secret weapon for transforming mechanical systems from rigid followers to intelligent problem-solvers.

Imagine a robot that learns and adapts in real-world scenarios, tweaking its own performance like a savvy tech ninja. ANNC isn’t just another control system; it’s a game-changing approach that lets robots navigate unpredictable environments by constantly adjusting their strategies.

Think of it as giving robots a built-in learning algorithm that helps them improve with every movement and interaction. By leveraging neural networks, these systems can manage complex tasks that would make traditional controllers short-circuit.

From autonomous vehicles to precision robotic arms, ANNC is quietly revolutionizing how machines understand and respond to their surroundings, turning what once seemed like science fiction into today’s cutting-edge technology.

Error Correction and Dynamic System Stabilization

Robots aren’t perfect—but they’re getting scary close. Error correction is their secret sauce, turning clunky machines into precision performers. How do they pull this off? Here’s the inside scoop:

- Sensors like cameras and LiDAR act as robotic eyes, constantly scanning the environment.

- Advanced control algorithms (hello, PID controllers!) analyze tiny deviations in real-time.

- Dynamic adjustments happen faster than you can blink, keeping robots on course.

Imagine a robot maneuvering a complex warehouse, instantly correcting its path when an unexpected box appears. That’s not luck—that’s smart engineering.

Model Predictive Control lets robots basically predict the future, adjusting movements before problems even happen. It’s like having a tiny, hyper-intelligent guide inside each machine, making split-second decisions that keep everything running smooth and steady.

Machine Learning Integration in Control Architecture

Machine learning isn’t just a buzzword—it’s the brain boost that’s turning robots from predictable automatons into adaptive problem-solvers.

By integrating machine learning into control architecture, real robots can now learn from raw sensory data, making split-second decisions without waiting for pre-programmed instructions. Imagine a robot that adapts on the fly, like a quick-witted improviser.

Reinforcement learning techniques let these mechanical marvels optimize their behavior through trial and error, fundamentally teaching themselves how to navigate complex environments.

Simulation platforms like OpenAI Gym provide a safe playground for these digital learners, letting them practice before hitting the real world.

The secret sauce? Clever reward engineering that guides robots toward desired behaviors, turning them from rigid machines into flexible, learning systems that can tackle challenges we can’t even predict yet.

Future Horizons: Emerging Robotic Control Technologies

While most people imagine robots as clunky, predictable machines, emerging control technologies are about to flip that script completely.

These emerging robotic control technologies are transforming how machines perceive and interact with the world. Your future robot companions will navigate complex environments by:

- Creating real-time maps using SLAM technology

- Learning tasks through advanced machine learning algorithms

- Adapting dynamically to unpredictable scenarios

Imagine robots that don’t just follow pre-programmed instructions, but actually learn and adjust on the fly.

Vision-based control systems are making robots more intuitive, allowing them to “see” and respond like never before.

Multi-robot coordination algorithms mean these smart machines can now collaborate seamlessly, turning what used to be science fiction into everyday reality.

The line between human and machine intelligence is blurring, and trust me, it’s going to get wild.

People Also Ask About Robots

What Are the Four Types of Control Systems Used in Robotics?

You’ll encounter four key robot control systems: open-loop (direct commands), closed-loop (with feedback), adaptive (self-adjusting), and robust (performing reliably under varied conditions), each designed to handle different robotic operational challenges and environments.

How Does a Robot Control System Work?

Imagine a dancer gracefully adjusting steps: your robot’s control system similarly uses sensors and feedback loops. You’ll receive real-time environmental data, process it through algorithms, and continuously adjust movement, ensuring precise navigation and responsive performance.

What Keeps a Robot Running?

You’ll keep a robot running through continuous feedback loops, precise sensor data, adaptive control algorithms, and strategic navigation techniques that dynamically adjust its movements, ensuring responsive and accurate performance in changing environments.

What Is Robot Motion Control?

You’ll find robot motion control guides a machine’s precise movements through strategic algorithms that manage speed, direction, and path tracking, ensuring it navigates complex environments with calculated efficiency and adaptive responsiveness.

Why This Matters in Robotics

You’re standing at the edge of a robotic revolution where control systems aren’t just circuits—they’re the brain and nervous system of intelligent machines. Imagine algorithms dancing with sensors, learning and adapting in milliseconds. Right now, robots are evolving from rigid automatons to dynamic, responsive entities that can think on their feet—or wheels. The future isn’t about replacing humans, it’s about collaborating in ways we’re just beginning to understand.