Deep reinforcement learning turns robots from clunky machines into adaptive learners. You’ll watch AI teach humanoid robots to move like athletes, processing millions of scenarios in digital playgrounds. They’ll stumble, learn, and improve faster than you’d imagine—think of a toddler on digital steroids. Neural networks help robots decode movement patterns, turning complex environments into playground challenges. Curious about how machines might outsmart human limitations? Stick around.

The Foundations of Deep Reinforcement Learning

The robot revolution starts with a brain, not just metal and circuits. Deep Reinforcement Learning isn’t some sci-fi fantasy—it’s how machines learn to think like adaptable problem-solvers. Machine learning algorithms enhance the robot’s ability to process sensory inputs and dynamically adjust its learning strategies.

Imagine a robot that learns from its mistakes, just like you would. By combining neural networks with trial-and-error strategies, these systems can navigate complex environments and make split-second decisions. They’re not following preset instructions; they’re evolving through experience.

The magic happens when algorithms turn raw data into intelligent action, letting robots interpret high-dimensional inputs and respond with remarkable precision. Think of it as teaching a machine to think sideways, to see possibilities beyond linear programming.

It’s like giving a robot intuition—the ability to improvise, learn, and transform raw potential into intelligent behavior.

Neural Networks and Robotic Decision Making

When neural networks meet robotic decision-making, magic happens—and we’re not talking about Hollywood sci-fi fantasies.

These brainy algorithms transform robots from clunky machines into adaptive learners. By modeling complex environments through Multi-Layer Perceptrons and Convolutional Neural Networks, robots can now decode intricate movement patterns like a digital choreographer.

Imagine a humanoid robot learning to walk by practicing in high-fidelity simulations, tweaking its approach with each awkward step. Humanoid robotic platforms are increasingly integrating advanced AI to navigate complex environments with unprecedented precision.

Neural networks help it understand not just movement, but context—distinguishing between efficient locomotion and energy-wasting stumbles. The secret sauce? Reward signals that guide learning, turning trial-and-error into precision.

It’s like having a coach inside the robot’s brain, constantly whispering, “You’ve got this” while nudging toward peak performance.

Simulating Complex Environments for Robot Training

Because robot training isn’t just about coding algorithms—it’s about creating digital universes where machines learn faster than humans ever could—simulation environments have become the secret laboratory of modern robotics.

By generating thousands of virtual scenarios, engineers can train humanoid robots to tackle complex challenges without risking expensive hardware. Imagine a digital playground where robots navigate impossible terrains, learn intricate movements, and adapt to wildly different conditions—all before taking their first real-world step.

Domain randomization transforms these simulations into adaptive learning universes, varying physical properties and scenarios to build truly resilient robotic intelligence. Think of it like a video game where each level tests slightly different skills, preparing robots to handle anything from smooth sidewalks to treacherous mountain trails.

Simulation isn’t just training—it’s robot evolution on hyperdrive.

Reward Structures: Guiding Robotic Behavior

If robots are going to learn how to move like graceful, intelligent machines, they need more than just lines of code—they need a motivation system that tells them exactly what good behavior looks like.

Reward structures are the robot’s internal compass, guiding them toward desired actions like a digital coach. Think of it as training a puppy, but instead of treats, you’re using mathematical feedback that shapes robotic movement.

Dense rewards help robots learn faster by providing constant performance signals, while sparse rewards can make training feel like watching paint dry.

The trick is designing rewards that encourage human-like walking—balancing velocity, power efficiency, and stability.

Get this wrong, and your robot might develop some seriously weird movement strategies that look more like a drunk zombie than a smooth, adaptive machine.

Challenges in Translating Simulated Skills to Physical Robots

Despite cutting-edge algorithms that make robots look brilliant in simulations, the real world is a cruel mistress that loves to prove software engineers wrong. Translating simulated skills to physical robots isn’t just challenging—it’s a high-stakes engineering puzzle with landmines everywhere.

- Physics doesn’t play nice: Simulation dynamics rarely match real-world chaos

- Robots struggle to generalize learned behaviors across different environments

- Actuator limitations create unexpected performance bottlenecks

- High-precision control demands superhuman computational adaptability

Domain randomization helps bridge these translation challenges, fundamentally training robots to expect the unexpected. By varying physical parameters during simulation, engineers create more robust policies that can handle real-world unpredictability.

It’s like teaching a robot to dance not just on a perfect stage, but on shifting, uncertain ground—where one wrong move means a spectacular mechanical faceplant.

Learning Locomotion: From Simulation to Real-World Movement

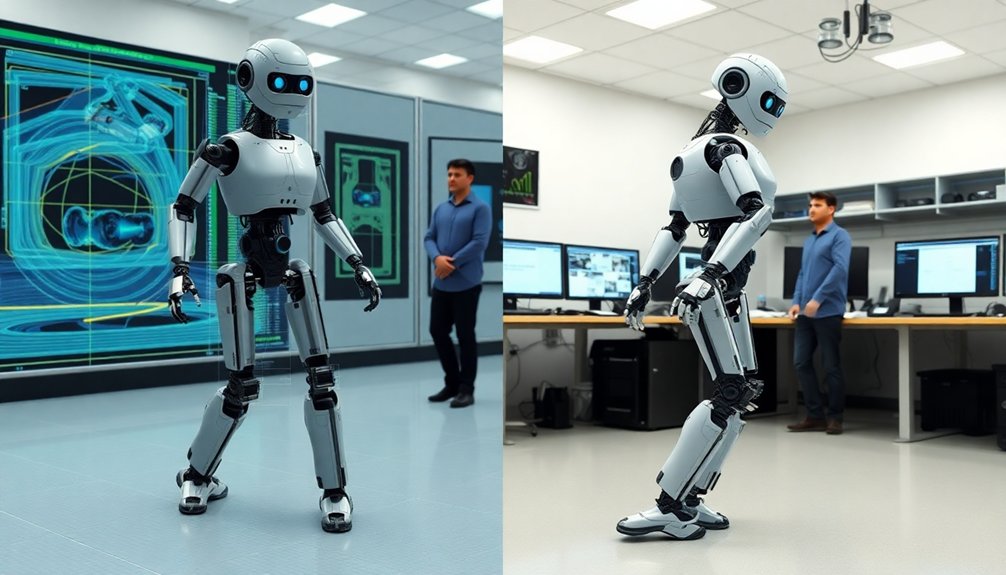

When robots learn to walk, they’re basically toddlers with titanium legs—awkward, determined, and prone to spectacular wipeouts. Proprioceptive sensors help robots fine-tune their movements, enabling precise environmental adaptation and balance during locomotion.

Deep reinforcement learning transforms these mechanical munchkins from stumbling experiments into graceful movers. By running thousands of simulated humanoids in parallel, researchers fundamentally compress decades of walking practice into mere hours. You’ll witness robots learning complex locomotion through relentless trial and error, mimicking human movement patterns with shocking precision.

Domain randomization acts like a robotic boot camp, training these mechanical athletes to adapt to wildly different terrains and physical conditions. High-frequency torque feedback becomes their neural muscle memory, bridging the gap between simulation and reality.

The result? Robots that don’t just walk—they strut with an almost human-like confidence, turning awkward algorithms into smooth, intelligent movement.

Adaptive Strategies for Unpredictable Scenarios

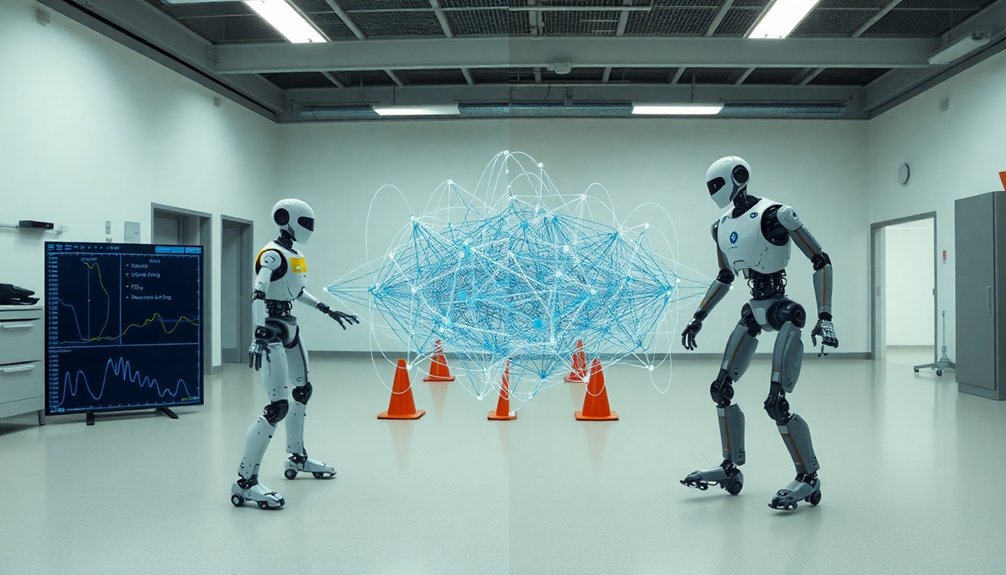

From smooth walking simulations to maneuvering real-world chaos, humanoid robots now face their ultimate challenge: handling the unexpected.

These adaptive strategies transform robots from predictable machines into dynamic problem-solvers.

From rigid algorithms to fluid intelligence, robots transcend programmed limits and embrace creative problem-solving dynamism.

- Real humanoid robots learn like toddlers: through constant trial and epic fails

- Domain randomization creates superhuman flexibility in movement

- Deep reinforcement learning lets robots improvise like jazz musicians

- Unpredictable scenarios become playgrounds for robotic intelligence

Breakthrough Techniques in Humanoid Robot Control

The cutting edge of humanoid robot control isn’t just about making machines move—it’s about teaching them to think on their feet, literally. Deep Reinforcement Learning is revolutionizing how robots learn complex movements through trial-and-error simulations. You’ll be amazed how neural networks help robots adapt faster than traditional programming ever could. The development of emotional AI frameworks is pushing the boundaries of how robots can understand and respond to complex human interactions.

| Technique | Key Advantage | Performance Impact |

|---|---|---|

| Domain Randomization | Environment Adaptation | High Robustness |

| Natural Actor-Critic | Policy Optimization | Efficient Learning |

| Multi-Layer Perceptrons | Complex Decision Modeling | Precise Control |

Imagine robots learning locomotion skills like toddlers—except these “kids” can process millions of scenarios in seconds. By integrating advanced neural architectures with explainable AI techniques, researchers are creating machines that don’t just move, but understand why they’re moving. It’s not science fiction anymore; it’s happening right now.

Performance Metrics and Success Evaluation

Because measuring the success of deep reinforcement learning in humanoid robotics isn’t just about fancy graphs and percentages, you’ll want to understand how researchers actually determine if a robot’s learned behavior is more than just a cool party trick.

Performance metrics reveal the true capability of robotic systems across different complexity levels.

You’ll quickly notice that evaluating DRL isn’t straightforward:

- Success levels range from basic simulation (Level 0) to commercial product deployment (Level 5)

- Real-world adaptability depends on training diversity and policy stability

- Locomotion and navigation skills dramatically impact performance assessment

- Subjective reporting makes standardized evaluation challenging

Researchers dig deep to understand whether a robot can genuinely solve problems or just mimic pre-programmed responses.

Beyond algorithms and code, the quest is to distinguish true problem-solving from mere robotic mimicry.

They’re not just tracking numbers; they’re hunting for genuine intelligence that can navigate unpredictable environments with human-like flexibility and quick thinking.

Future Horizons of Intelligent Robotic Systems

You’re standing at the edge of a robotic revolution where AI isn’t just learning—it’s transforming how machines think and adapt in real-world chaos.

Imagine humanoid robots that can instantly recalibrate their strategies, almost like a street-smart kid who figures out new playground rules on the fly, powered by increasingly sophisticated deep reinforcement learning techniques.

These intelligent systems won’t just follow rigid programming; they’ll develop nuanced decision-making skills that blur the line between programmed response and genuine adaptive intelligence.

AI-Driven Robotic Evolution

While the world’s been busy debating whether robots will take over, deep reinforcement learning is quietly transforming humanoid machines from clunky metal statues into adaptive, learning creatures that can navigate complex environments like nimble athletes.

These AI-driven robots are reshaping our understanding of machine intelligence through:

- Superhuman locomotion skills that mimic human movement

- Instantaneous learning from thousands of virtual simulations

- Adaptive behaviors across unpredictable terrains

- Autonomous problem-solving without explicit programming

Imagine robots that learn like curious children—exploring, falling, recovering, and improving with each interaction.

Deep reinforcement learning isn’t just teaching machines to move; it’s giving them the ability to understand and adapt to their surroundings.

Adaptive Machine Intelligence

When artificial intelligence decides to stop playing chess and start reinventing locomotion, something magical happens: robots transform from programmed machines into learning organisms that can literally think on their feet.

Humanoid robotics isn’t just about building cooler machines anymore—it’s about creating adaptive intelligence that learns, adjusts, and survives in unpredictable environments.

Imagine robots that don’t just follow scripts but actually understand and respond to challenges in real-time.

Deep reinforcement learning is the secret sauce making this possible, enabling machines to experiment, fail, and improve through continuous interaction.

By randomizing training scenarios and pushing computational boundaries, researchers are teaching robots to navigate complex terrains, handle unexpected obstacles, and develop something eerily close to intuition.

The future isn’t about perfect programming—it’s about creating robots smart enough to figure things out on their own.

Intelligent System Design

Because the future of robotics isn’t about creating perfect machines, but adaptive intelligent systems that can think and learn, intelligent system design represents the bleeding edge of human-machine collaboration.

Deep Reinforcement Learning (DRL) isn’t just code—it’s the brain-training gym where robots develop real-world smarts.

- Robots that learn from mistakes faster than humans

- Adaptive algorithms mimicking neural plasticity

- Complex movement patterns emerging through trial-and-error

- Autonomous navigation across unpredictable terrains

You’re looking at a technological revolution where humanoid robots won’t just follow instructions—they’ll understand context, adapt dynamically, and make split-second decisions.

DRL transforms robotic systems from rigid programmable tools into flexible, learning entities.

Imagine machines that grow smarter with every interaction, bridging the gap between programmed behavior and genuine intelligence.

The future isn’t about replacing humans—it’s about creating collaborative partners who can think on their feet.

People Also Ask About Robots

How Is Reinforcement Learning Used in Robotics?

You’ll use reinforcement learning to train robots by letting them learn through trial and error, where they receive rewards for successful actions and gradually optimize their behavior to achieve complex tasks autonomously.

How Do You Explain Deep Reinforcement Learning?

Ever wondered how machines learn to make smart decisions? You’ll find deep reinforcement learning combines neural networks with reward-based learning, enabling AI agents to optimize actions through continuous trial-and-error interactions with complex environments.

Does Chatgpt Use Reinforcement Learning?

Yes, you’ll find ChatGPT uses Reinforcement Learning from Human Feedback (RLHF), a technique that fine-tunes its responses by learning from human rankings and preferences, helping it generate more natural and contextually appropriate dialogue.

What Are the 4 Components of Reinforcement Learning?

Like a chess player strategizing moves, you’ll navigate reinforcement learning through four key components: the agent (learner), environment, actions (choices), and rewards (feedback), which together help you enhance decision-making and achieve peak performance.

Why This Matters in Robotics

You’ve seen how deep reinforcement learning is turning robots from clunky machines into adaptive learners. Think of these systems like curious kids who learn by trial and error, except they’re powered by neural networks instead of peanut butter and playground experiments. The future isn’t about perfect robots, but smart robots that can improvise, adapt, and maybe—just maybe—understand the messy complexity of our world. Buckle up: the robotic revolution is just getting started.