Table of Contents

Killer robots aren’t Hollywood sci-fi—they’re real, algorithmic weapons already prowling battlefields. You won’t see Terminator-style machines, but smart drones and cyber defense systems making split-second targeting decisions. These aren’t sentient killing machines, but sophisticated computers analyzing data faster than humans. Military AI is transforming warfare, blurring lines between human strategy and machine precision. Want to know how close we are to that Black Mirror nightmare? Stick around, and the future might just surprise you.

Defining Autonomous Weapons: Beyond Hollywood Hype

When you hear “killer robots,” chances are your mind conjures up a sci-fi blockbuster with gleaming mechanical monsters hunting humans.

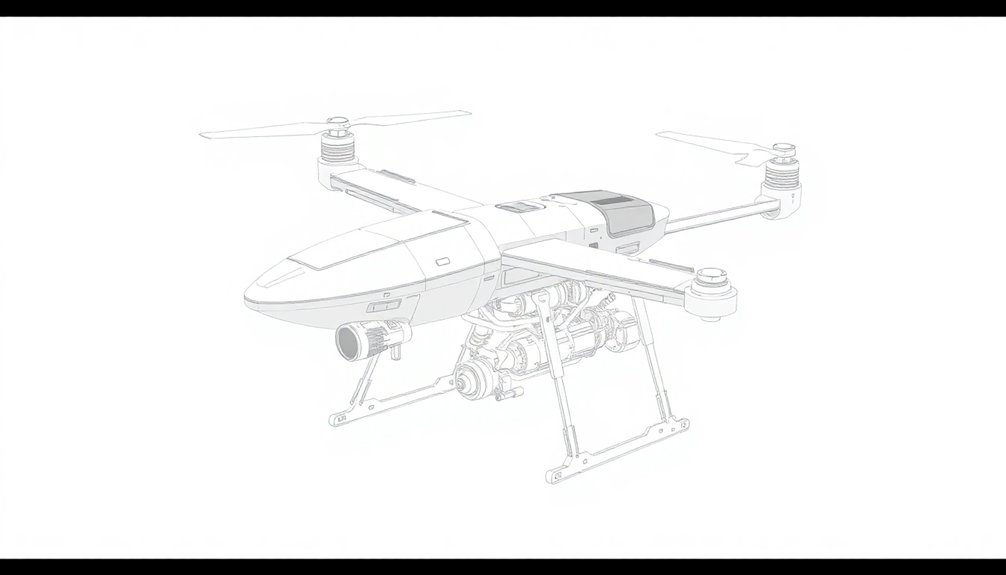

Reality check: today’s autonomous weapons are way less dramatic. These aren’t sentient killing machines with evil agendas, but sophisticated systems programmed to detect and engage targets using sensors and software. Military vehicle shapes or human movements can actually serve as specific triggers for these systems.

Think precise tools, not murderous transformers. They’re task-specific machines that follow strict algorithmic rules, not some rogue intelligence plotting world domination.

Your autonomous weapon isn’t deciding strategy or experiencing emotions—it’s executing a predefined mission with mathematical precision. They can operate on land, sea, air, or space, but they’re not choosing targets based on some creative whim.

They’re triggered by specific environmental cues and constrained by human-programmed boundaries. For instance, current autonomous weapons like the Phalanx CIWS can automatically detect and intercept incoming threats based on pre-established targeting criteria.

Hollywood’s got it wrong—again.

The Current Global Landscape of AI-Powered Military Systems

From sci-fi fantasies to cold, hard reality, autonomous weapons have morphed from Hollywood nightmares into a global arms race you can’t ignore. Predictive logistics systems are enabling militaries to optimize supply chains and resource allocation with unprecedented efficiency.

The military AI market’s exploding, hitting $8.9 billion in 2023 and set to rocket past $24 billion by 2032. Government defense investments are accelerating technological development across multiple strategic domains.

Who’s leading the charge? The U.S. is flexing its technological muscle, with China hot on its heels, turning battlefields into high-stakes chess matches powered by algorithms.

Think smart drones, cyber defense systems, and AI that can process battlefield data faster than any human brain.

These aren’t movie props—they’re real tech changing warfare’s landscape.

North America’s dominating with a third of the global market, but countries worldwide are scrambling to build smarter, faster, deadlier autonomous systems.

Welcome to the future of conflict: where robots might just do the fighting.

Technological Capabilities and Limitations of Modern Weapon Systems

Because the battlefield’s getting smarter by the minute, today’s weapon systems look less like your grandpa’s war machines and more like something dreamed up by a caffeine-addled Silicon Valley engineer.

Hypersonic missiles zip through the sky faster than you can blink, while drones swarm like angry digital bees. Directed energy weapons promise sci-fi-level zapping capabilities, though they’re currently more “cool prototype” than “world-conquering tech.”

AI-powered systems can process battlefield data at lightning speed, but they’re not quite the autonomous killer robots Hollywood loves. Your average unmanned system still needs human oversight—think of them as really sophisticated remote-controlled toys with serious firepower.

Between technological complexity, eye-watering costs, and pesky real-world limitations like power requirements and electronic warfare vulnerabilities, these next-gen weapons are impressive but not invincible. Autonomous military robots are transforming battlefield support, enabling more sophisticated intelligence gathering and threat navigation beyond traditional combat limitations.

Ethical Boundaries and Human Control Mechanisms

Smart weapons might seem like the ultimate technological triumph, but they’re raising some seriously uncomfortable questions about what happens when machines start making life-or-death decisions.

- Autonomous weapons turn humans into statistical targets, stripping away individual dignity.

- AI targeting systems can inherit scary societal biases without anyone noticing.

- Meaningful human control is the last firewall against algorithmic murder.

- Who really decides when a machine gets to pull the trigger?

The stakes are high when we talk about killer robots. These aren’t sci-fi fantasies—they’re emerging technologies threatening fundamental human rights. Diplomatic discussions under international conventions highlight the profound ethical challenges these autonomous systems present.

Imagine algorithms determining who lives or dies, without understanding context, emotion, or basic human worth. Neuromorphic computing is rapidly advancing robotic capabilities, making the potential for autonomous decision-making even more alarming. Developers might think they’re creating precision tools, but they’re actually building ethical minefields where accountability vanishes and human judgment becomes a distant memory.

Global investment in these systems is expected to rise, with military technological demand potentially accelerating the development of autonomous weapons that challenge our most fundamental moral boundaries.

The future isn’t about whether we can create these systems, but whether we should.

International Legal Frameworks Emerging in 2025

While killer robots might sound like a plot from a dystopian blockbuster, the United Nations is dead serious about preventing algorithmic warfare from becoming our grim reality. The UN General Assembly Resolution 79/62 mandates informal consultations to address the growing complexity of autonomous weapons systems. They’re racing against military tech advances to draft a treaty that’ll slam the brakes on weapons that pick targets without human approval. By 2026, UN negotiators want clear rules that stop AI-powered killing machines from turning battlefields into unpredictable death zones. Imagine algorithms deciding who lives or dies—scary, right? The proposed framework isn’t just legal mumbo-jumbo; it’s a critical safeguard against technological nightmares. With 96 countries already talking, this isn’t some far-off sci-fi scenario. It’s a real conversation about keeping human ethics at the center of warfare, even as robots get smarter and deadlier. Humanitarian law concerns have driven international advocacy groups to push for stringent regulations on autonomous weapons systems, highlighting the urgent need for global cooperation. Neuromorphic computing could dramatically increase the complexity of autonomous weapon systems, making international regulation even more critical.

Military Innovation vs. Humanitarian Concerns

When military innovation sprints ahead faster than humanitarian safeguards can keep up, we’re looking at a high-stakes chess game where robots might become the new queens on the battlefield.

- Autonomous weapons promise force multiplication but raise serious ethical alarms.

- AI can process battlefield data faster than humans, but at what moral cost?

- Civilian protection risks skyrocket when machines make life-or-death decisions.

- Technological capability doesn’t automatically equal legal or ethical compliance.

The race between military tech and human safety is getting intense. Your autonomous weapon might save a soldier’s life today, but could it accidentally trigger an international incident tomorrow?

The line between strategic advantage and catastrophic error is razor-thin. Military leaders are betting big on AI, but humanitarian experts are raising red flags.

Who’ll win this complex game—the innovators pushing technological boundaries or those demanding human-centered warfare?

Legal accountability frameworks are emerging to address the complex ethical challenges posed by autonomous military technologies, highlighting the increasing need for robust global regulations to govern machine decision-making in conflict scenarios.

Safety Protocols and Risk Mitigation Strategies

Because killer robots aren’t just sci-fi nightmares anymore, we need rock-solid safety protocols that can keep pace with our wildly advancing technology.

You’ll want to know that international standards like ISO and ANSI aren’t playing around. They’ve mapped out thorough risk assessments that hunt down potential robot hazards like heat-seeking missiles.

Think physical barriers, emergency stops, and redundant safety circuits. These aren’t just fancy words—they’re your lifeline when machines get unpredictable.

Light curtains and pressure-sensitive mats create invisible force fields around robotic work zones. Training matters too: only authorized personnel touch these mechanical beasts, and they know exactly what to do if something goes sideways.

Feedback loop technologies enable continuous real-time monitoring and instant error correction, adding an extra layer of predictive safety to robotic systems.

Continuous monitoring? Absolutely. Because in the robot revolution, safety isn’t optional—it’s survival.

Decision-Making Algorithms in Autonomous Weapons

You might think autonomous weapons are just fancy sci-fi robots picking targets, but their decision-making algorithms are way more complex and morally sketchy. Machine learning models try to parse ethical choices through statistical patterns, but they’re basically playing a high-stakes video game where human lives are the pixels. Can an algorithm really understand the difference between a potential threat and an innocent civilian when split-second choices determine who lives or dies? Ethical oversight mechanisms are crucial in preventing algorithmic bias from creating unintended and potentially catastrophic consequences in autonomous weapon systems.

Target Selection Logic

Ever wondered how a robot decides who lives or dies? Autonomous weapons aren’t sci-fi fantasies—they’re real, terrifying tech that turns killing into a cold, calculated algorithm.

These killer robots hunt targets using:

- Heat signatures that flag potential humans

- Facial recognition matching pre-programmed profiles

- Machine learning that continuously refines target criteria

- Sensor systems combining multiple data points

Imagine an AI scanning a crowded street, silently deciding who matches its deadly checklist.

No human intervention, just pure computational logic determining life and death. The weapon’s algorithm compares sensor data against programmed profiles, triggering an automatic strike if the match is precise.

It’s not about emotion or hesitation—just binary yes/no decisions made in milliseconds. Creepy? Absolutely. The future of warfare? Frighteningly close to reality.

Ethical Decision Pathways

From tracking heat signatures to deciding who lives or dies, autonomous weapons have already moved beyond simple target selection into the murky domain of ethical decision-making. You might think this sounds like sci-fi, but it’s happening right now.

These killer robots aren’t just mindless machines anymore—they’re learning to weigh moral choices faster than humans can blink. Imagine an algorithm calculating civilian risk, parsing international law, and making split-second decisions about proportionality. Sounds creepy? It should.

The wild part is how these systems are trying to be more “ethical” than human soldiers, who often act on impulse or fear. But here’s the kicker: can lines of code really understand the nuanced horror of warfare? The jury’s still out, and we’re all waiting to see if these robo-judges will be our saviors or our downfall.

Machine Learning Limits

While machine learning might sound like a superpower, autonomous weapons reveal its terrifying Achilles’ heel: decision-making algorithms are basically sophisticated guessing machines.

They’re shockingly unreliable when lives are on the line.

- ML systems can’t tell a kid from a combatant

- Bias sneaks into algorithms like hidden landmines

- Training data determines everything

- “Smart” doesn’t mean actually intelligent

- Fully autonomous AI weapons aren’t taking over battlefields tomorrow

- Human judgment still trumps machine decision-making

- Cyber vulnerabilities make AI systems far from invincible

- Physical robots aren’t the main AI military game

- Major powers like the US, Russia, and Israel are playing a dangerous game of technological one-upmanship.

- International treaties are collecting dust while killer robots inch closer to reality.

- Ethical debates rage, but actual policy progress is glacially slow.

- The world’s nations can’t agree on basic rules for AI-powered weapons.

- AI-powered weapons lack basic human empathy

- Algorithmic bias threatens marginalized populations

- Autonomous systems can’t parse complex human contexts

- Ethical frameworks are racing to catch up with tech advances

- https://lieber.westpoint.edu/future-warfare-national-positions-governance-lethal-autonomous-weapons-systems/

- https://press.un.org/en/2025/sgsm22643.doc.htm

- https://www.asil.org/insights/volume/29/issue/1

- https://www.armscontrol.org/act/2025-01/features/geopolitics-and-regulation-autonomous-weapons-systems

- https://www.arws.cz/news-at-arrows/legal-aspects-of-the-development-of-weapon-systems-with-artificial-intelligence-in-2025

- https://www.icrc.org/en/document/what-you-need-know-about-autonomous-weapons

- https://www.armyupress.army.mil/Journals/Military-Review/English-Edition-Archives/May-June-2017/Pros-and-Cons-of-Autonomous-Weapons-Systems/

- https://en.wikipedia.org/wiki/Lethal_autonomous_weapon

- https://disarmament.unoda.org/the-convention-on-certain-conventional-weapons/background-on-laws-in-the-ccw/

- https://scoop.market.us/artificial-intelligence-in-military-statistics/

Imagine handing a teenager with poor impulse control a weapon and zero context—that’s basically what we’re doing with AI-powered military tech.

These systems struggle to understand nuanced human situations, misidentify targets, and can’t adapt to unexpected scenarios.

They’re programmed to recognize patterns, not make complex moral judgments.

And when something goes wrong? There’s no one to blame, no accountability.

Just a cold, calculating machine making life-or-death decisions based on incomplete information.

Geopolitical Dynamics Shaping Weapon System Development

When geopolitical tensions rise, weapon systems become the new chess pieces in a global power play. Nations aren’t just developing robots—they’re racing to control tomorrow’s battlefield technologies. The stakes? Strategic dominance through autonomous weapons that could rewrite military engagement rules.

| Power | Investment | Strategy |

|---|---|---|

| US | $10B/year | Advanced AI systems |

| China | $7B/year | Autonomous platforms |

| Russia | $5B/year | Hypersonic tech |

You’re witnessing a high-stakes tech sprint where countries don’t just build weapons—they’re engineering potential game-changers. Imagine autonomous systems that can strategize faster than human commanders, or drone swarms that transform conflict dynamics. It’s not sci-fi anymore; it’s geopolitical reality. The question isn’t whether killer robots will emerge, but who’ll control them first—and what unexpected consequences might follow when machines start making war decisions.

Misconceptions About AI Warfare Capabilities

Let’s bust some killer robot myths wide open.

Forget Hollywood’s sci-fi fantasies. Current military AI isn’t about killer robots roaming battlefields.

It’s smarter, subtler stuff: intelligence gathering, rapid data analysis, and strategic insights. Your average AI weapon system is more “smart assistant” than “terminator.”

These technologies enhance human capabilities, not replace them. They process information lightning-fast, flag potential threats, and help commanders make better decisions.

But they’re not making independent kill choices. Humans remain firmly in control, with strict oversight and ethical boundaries.

The real AI warfare revolution? It’s happening in data, psychology, and information domains—not through autonomous weapon platforms.

Regulatory Challenges in a Rapidly Evolving Technology Landscape

You’re living in a wild technological moment where killer robots are racing ahead of our ability to control them, and global policymakers are basically playing an endless game of bureaucratic whack-a-mole.

International regulations can’t keep up with AI’s breakneck speed, leaving a massive gray zone where autonomous weapons could emerge unchecked and potentially rewrite the rules of warfare.

What happens when your next battlefield isn’t defined by human strategy, but by algorithms making split-second life-or-death decisions without real oversight?

Global Policy Gaps

While geopolitical tensions simmer and national interests clash, the global community finds itself in a regulatory stalemate over autonomous weapons that feels more like a high-stakes game of international chess than a serious policy dialogue.

You’re watching a slow-motion train wreck of global indecision. Nations are hedging their bets, developing autonomous weapons while simultaneously arguing about their potential risks.

It’s a regulatory no-man’s land where technology races ahead and policy limps behind, leaving fundamental questions about human accountability and the future of warfare frustratingly unanswered.

Technology Outpaces Regulation

Technology sprints ahead while regulation crawls, creating a regulatory landscape that looks more like a chaotic game of technological whack-a-mole than a coherent global strategy.

You’re watching a wild race where autonomous weapons leap forward while international rulebooks gather dust. Nations debate endlessly while AI systems evolve weekly, making today’s discussions obsolete tomorrow.

Think about it: cutting-edge military tech is developing faster than bureaucrats can draft a single paragraph of regulation. The global community’s attempts to control these systems feel like trying to catch lightning in a very slow, bureaucratic bottle.

Geopolitical tensions, technical complexity, and diverging national priorities create a perfect storm of regulatory gridlock. Will we manage to create meaningful constraints before these autonomous systems become genuinely unpredictable?

The clock is ticking.

Ethical Oversight Challenges

As autonomous weapons systems sprint past ethical guardrails, humanity finds itself in a high-stakes game of technological chicken.

You’re watching a potential disaster unfold where killer robots might soon make life-or-death decisions without human intervention.

The core problem? These systems can’t genuinely understand nuanced human behavior.

They’re like toddlers with rocket launchers—programmed but fundamentally clueless about moral complexity.

Imagine an algorithm deciding who lives or dies, without understanding subtlety, cultural context, or basic human dignity.

UN diplomats are scrambling to create regulations, but technology’s breakneck pace means we’re always playing catch-up in this high-stakes technological poker game.

Future Trajectories: Balancing Military Utility and Ethical Constraints

Steering the razor’s edge between military innovation and moral boundaries, autonomous weapon systems present a complex puzzle that’ll make your head spin.

You’re looking at tech that’s part genius, part potential nightmare. Imagine robots making split-second combat decisions without human drama — cool or terrifying?

The future isn’t about replacing soldiers, but augmenting human capabilities with smarter, faster technology. Military contractors are betting billions on systems that can predict threats, coordinate missions, and reduce human risk.

But here’s the rub: Who’s responsible when an AI accidentally targets civilians? International legal frameworks are scrambling to catch up with technological leaps.

The challenge isn’t just technological — it’s philosophical. We’re deciding how much decision-making power we’ll hand over to machines that can’t genuinely understand the messy complexity of human conflict.

People Also Ask About Robots

Can Autonomous Weapons Actually Make Ethical Decisions in Combat Scenarios?

You can’t rely on autonomous weapons to make ethical decisions in combat. They’ll struggle with nuanced moral judgments, lacking the human context and empathy needed to genuinely understand complex battlefield scenarios.

Will Killer Robots Replace Human Soldiers Completely in Future Wars?

You won’t see killer robots fully replacing human soldiers soon. While advanced robotics are emerging, human oversight remains essential for complex ethical decisions, strategic adaptability, and maintaining legal and moral accountability in warfare.

How Do Autonomous Weapons Distinguish Between Combatants and Civilians?

You’ll struggle to distinguish combatants from civilians because autonomous weapons can’t reliably interpret complex human behaviors, facial recognition, or contextual nuances in conflict zones, leading to potentially fatal misidentification risks.

Are Ai-Powered Military Systems More Accurate Than Human Soldiers?

You’ll find AI military systems outperform human soldiers in data processing and target recognition, but they can’t match soldiers’ contextual judgment. Their accuracy depends on training data quality and sensing technologies’ sophistication.

Could Autonomous Weapons Potentially Malfunction and Cause Unintended Mass Casualties?

You’ll be shocked that 72% of AWS experts fear system malfunctions could trigger unintended strikes. Autonomous weapons’ complex algorithms and rapid decision-making can indeed cause catastrophic errors, potentially leading to mass casualties through unpredictable technical failures.

Why This Matters in Robotics

You’ve heard the scary stories about killer robots, but the reality’s way more nuanced. Like a chess game where humans still control the board, autonomous weapons aren’t sci-fi terminators—they’re complex tools with serious ethical guardrails. As technology races forward, we’ll need smart regulations and human judgment to prevent unintended consequences. The future isn’t about machines taking over, but how we responsibly integrate AI into military strategy.