Imagine robots that can actually feel what they touch, not just grab stuff. Soft sensors and machine learning are teaching robots to sense texture, pressure, and material differences—almost like they’ve got superpowered fingertips. They’re learning to distinguish silk from sandpaper, handle fragile objects, and even recognize emotional nuances through touch. These aren’t your grandpa’s clunky machines anymore; they’re becoming sensitive collaborators that can adapt and respond in real-time. Curious about how deep this rabbit hole goes?

The Science Behind Tactile Sensing

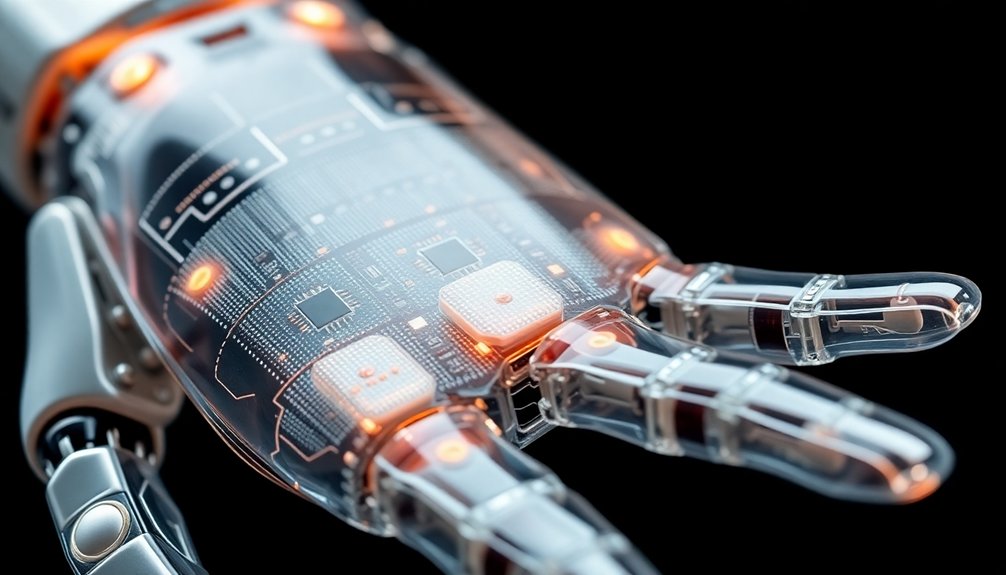

Imagine touch as the secret sauce of robotic intelligence. Tactile sensing isn’t just about feeling—it’s about understanding. Robotic systems are learning to decode surfaces like high-tech detectives, using machine learning algorithms that transform raw sensor data into meaningful insights.

Think of it like giving robots superhuman perception: they can now recognize material types through the triboelectric effect and map surface textures with incredible precision. CNNs (convolutional neural networks) are the brainy translators, turning complex touch signals into actionable knowledge with up to 97% accuracy.

Want to know how rough a surface is? These smart sensors can tell you faster and more reliably than human fingers. It’s not science fiction anymore—it’s cutting-edge robotics turning touch into a superpower.

Developing Soft Sensors for Robotic Interaction

While traditional robotics treated touch like a binary on/off switch, soft sensors are rewriting the rulebook of mechanical perception.

These cutting-edge tactile technologies blend triboelectric and magnetoelastic effects, giving robots superhuman sensing capabilities. Imagine a robot that can distinguish between silk and sandpaper without even touching it—that’s the magic of soft sensors in robotic interaction.

By generating electrical signals during contact and non-contact interactions, these sensors let robots “feel” their environment with unprecedented precision. A neural network interprets these signals, achieving a mind-blowing 97% accuracy in identifying object properties.

The result? Robots that can autonomously grip, sort, and assess materials without constant human micromanagement. Machine learning algorithms are now enabling robots to develop increasingly sophisticated perceptual abilities beyond traditional sensory limitations.

The future isn’t just about robots that move—it’s about robots that truly understand their world through touch.

Machine Learning and Signal Interpretation

You’ve probably wondered how robots actually “feel” what they’re touching, right?

Turns out, neural networks are the secret sauce that translates raw sensor signals into meaningful insights, letting machines decode tactile information like digital mind readers.

Neural Network Decoding

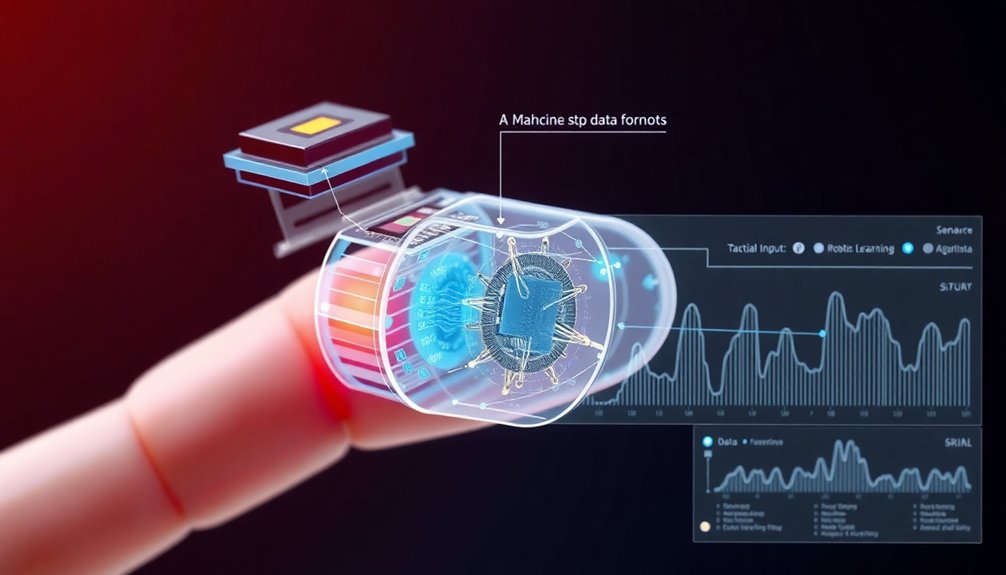

Because machine learning has transformed how robots understand touch, neural network decoding represents a groundbreaking leap in robotic perception.

You’ll be amazed at how these systems work:

- High-bandwidth tactile sensors capture intricate contact patterns

- Convolutional neural networks analyze raw signal data

- Machine learning algorithms correlate touch signatures with object properties

- Robots learn to differentiate materials, shapes, and textures with unprecedented accuracy

Imagine a robot feeling its way through a complex environment, interpreting subtle touch variations like a digital fingertip detective.

The neural network doesn’t just process information; it learns, adapts, and refines its understanding with each interaction.

It’s not just about collecting data—it’s about transforming raw sensory input into meaningful insights that allow robots to interact with the world more intelligently and intuitively.

Who knew robots could develop such a nuanced sense of touch?

Signal Processing Techniques

Signal processing techniques are the secret sauce that transforms raw tactile data into robotic intelligence, turning sensor inputs from a jumble of electrical noise into meaningful insights. These algorithms inspired by human perception decode complex touch interactions, allowing robots to understand object properties like never before.

| Sensor Input | Processing Method | Outcome |

|---|---|---|

| Tactile Data | Neural Networks | Object Recognition |

| Contact Patterns | Machine Learning | Texture Assessment |

| Electrical Signals | Signal Correlation | Material Prediction |

You’re witnessing the evolution of robotic touch – where machines don’t just sense, but truly comprehend. By correlating intricate signal patterns, these sophisticated techniques enable robots to interpret hardness, shape, and texture with near-human precision. Who knew electrical impulses could translate into such nuanced understanding? Tactile sensors are no longer just collecting data; they’re telling stories about the physical world, one touch at a time.

Sensory Data Interpretation

When machines start learning how touch works, they’re not just collecting data—they’re decoding an entire sensory language. Your robotic pals are getting smarter through tactile sensors and machine learning, turning raw sensations into meaningful insights:

- CNNs crunch incoming signals like linguistic code, identifying object properties with near-perfect accuracy.

- Robots train by interacting with known objects, building a touchable encyclopedia of sensory data interpretation.

- Algorithmic processing transforms tactile interactions into actionable intelligence.

- Adaptive feedback loops let machines adjust responses based on what they’ve “felt.”

Imagine a robot that doesn’t just grab things, but understands them—texture, shape, material—like a curious child exploring the world.

These aren’t just machines anymore; they’re becoming sensory detectives, translating the language of touch into digital understanding. Deep reinforcement learning enables robots to evolve beyond simple programmed responses, transforming their sensory interactions into adaptive, intelligent experiences.

Enhancing Robotic Manipulation Capabilities

You’ve probably wondered how robots might soon handle objects with the same nuanced touch as human hands.

The evolution of sensory systems means machines are learning to perceive texture, pressure, and material properties through advanced tactile sensors that basically give robots a supercharged sense of “touch”.

As these adaptive interaction technologies improve, robots will shift from clumsy mechanical grabbers to precision instruments that can manipulate everything from fragile glassware to rough industrial materials with remarkable finesse.

Sensory System Evolution

As robotic technologies push beyond their clunky, predictable roots, sensory system evolution represents the quantum leap that’ll transform machines from mere programmed tools into adaptive, almost-alive collaborators. Humanoid robot technologies are pioneering advanced sensory integration across multiple industries, from manufacturing to healthcare.

Tactile sensors are rewriting the rules of what robots can do, turning them into beings with a sense of touch that goes way beyond basic programming.

Here’s how these systems are getting smarter:

- Advanced sensors now mimic human-like physical interactions with unprecedented precision

- Machine learning algorithms decode complex touch signals with near-perfect accuracy

- Soft, responsive technologies like GelSight enable robots to “feel” material properties

- Real-time feedback loops allow autonomous adaptation to changing environmental conditions

Who’d have thought robots would someday understand texture and hardness better than your average toddler?

Welcome to the future of robotic touch.

Adaptive Robotic Interaction

Imagine robots that can feel their way through complex tasks like a surgeon’s steady hand, adapting instantaneously to the subtlest changes in material and texture. Tactile sensors are revolutionizing robotic interaction, turning metal machines into hyper-intelligent touch experts. Your future robotic assistant won’t just see the world—it’ll feel it with unprecedented precision. Cognitive architectures transform these sensors into advanced learning systems that continuously refine their understanding of touch and interaction.

| Sensor Type | Detection Capability | Accuracy |

|---|---|---|

| Triboelectric | Touchless Interaction | 95% |

| Magnetoelastic | Material Properties | 97% |

| Convolutional Neural | Object Differentiation | 96% |

These adaptive robotic hands can now distinguish between silk and sandpaper in milliseconds, selecting tools autonomously and learning from each touch. Machine learning algorithms transform raw sensory data into sophisticated interactions, enabling robots to navigate complex environments with a sensitivity that’ll make your smartphone look like a caveman’s rock.

Environmental Challenges and Sensor Optimization

When robotic touch encounters the real world, things get messy. Tactile sensors aren’t just fancy tech—they’re delicate creatures struggling with environmental factors. Here’s the deal:

- Humidity and temperature can throw sensors into total chaos, making them as unpredictable as a teenager’s mood.

- Sensor optimization isn’t just smart; it’s survival. We’re talking materials that can handle real-world drama without breaking a sweat.

- Advanced algorithms are the bouncers, keeping environmental fluctuations from crashing the robotic party.

- Adaptive sensing means robots won’t just react—they’ll predict and adjust like weather-savvy street performers.

Want robots that can feel without freaking out? We need sensors that are less prima donna and more resilient street fighter. The future isn’t about perfect conditions—it’s about conquering imperfection.

Future Applications in Robotics and Human Collaboration

Imagine a healthcare robot that can sense your emotional state through touch, or a manufacturing assistant that adapts its grip based on material sensitivity. Artificial intelligence is making these scenarios possible by teaching robots to “feel” in ways we never thought possible. Humanoid robots and companions are pioneering advanced tactile interactions that blur the lines between machine functionality and emotional intelligence.

Human-robot collaboration isn’t science fiction anymore—it’s happening now. Tactile sensors are bridging the gap between machine precision and human intuition.

They’re learning to distinguish between a delicate touch and a firm grip, understanding nuanced interactions that once seemed impossible. Who knew robots could become such sensitive collaborators?

People Also Ask About Robots

What Are Tactile Sensors in Robotics?

You’ll find tactile sensors are robotic devices that mimic human touch, enabling machines to perceive pressure, texture, and surface properties by converting physical interactions into electrical signals that help robots understand their environment.

What Is the Sensor That Allows the Robot to Interpret Touch and Feel Motions?

You’ll feel the pulse of innovation in a fusion of triboelectric and magnetoelastic sensors, where delicate electrical signals dance across surfaces, transforming robotic touch through advanced neural networks that decode material properties with remarkable precision.

What Is the Difference Between Touch Sensor and Tactile Sensor?

You’ll find that a touch sensor simply detects contact, while a tactile sensor provides detailed information about pressure, texture, and shape, enabling more sophisticated interaction and nuanced sensory feedback in robotic systems.

How Do Robots Feel Touch?

You’ll feel the heartbeat of innovation as robots sense touch through internal force-torque sensors, transforming cold machinery into responsive beings that interpret physical contact with machine-learned precision and adaptive intelligence.

Why This Matters in Robotics

You’ve created robots that can feel, but let’s be real – they’re still not asking for back rubs or understanding heartbreak. These tactile sensors might revolutionize machine interaction, but they’re basically sophisticated skin with an algorithm instead of actual empathy. Will they truly understand touch, or just mimic it perfectly? The future’s looking less sci-fi drama, more precise mechanical ballet – where robots know exactly how soft is soft, without the messy human emotions.