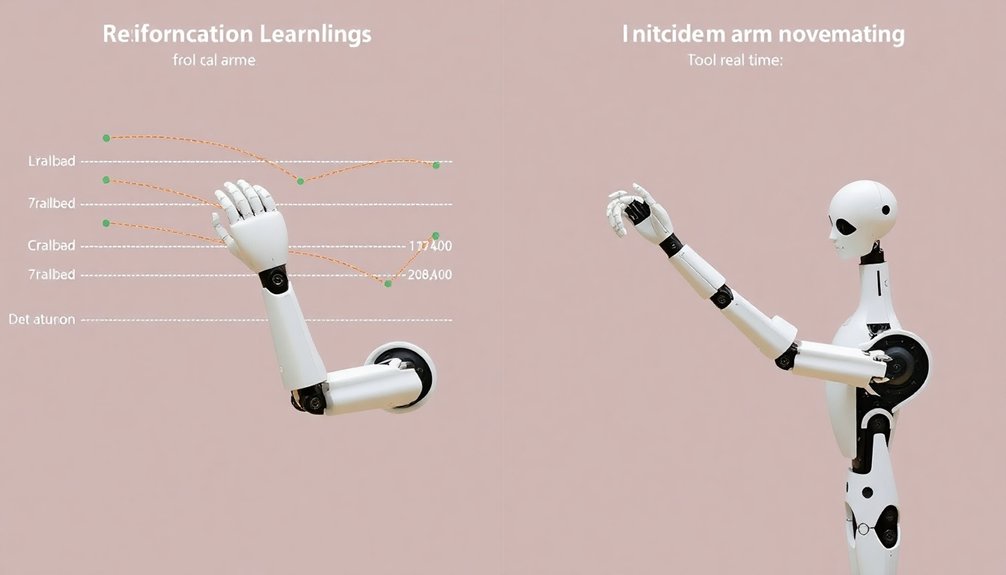

Robots learn to move like awkward toddlers, but smarter. Reinforcement learning has them stumbling through trial and error, getting tiny rewards for not face-planting. Imitation learning is their copycat phase, where they watch expert movements and mimic them with increasing precision. Think of it as robot dance classes: some learn by bumbling around, others by watching the pros. Curious how they’ll nail those moves? Stick around.

The Robot Learning Challenge

When it comes to teaching robots new tricks, you’d think we’d have cracked the code by now. But nope—robot learning is a beast. Reinforcement learning algorithms sound cool, but they’re like toddlers trying to learn ballet: lots of awkward falling and endless practice.

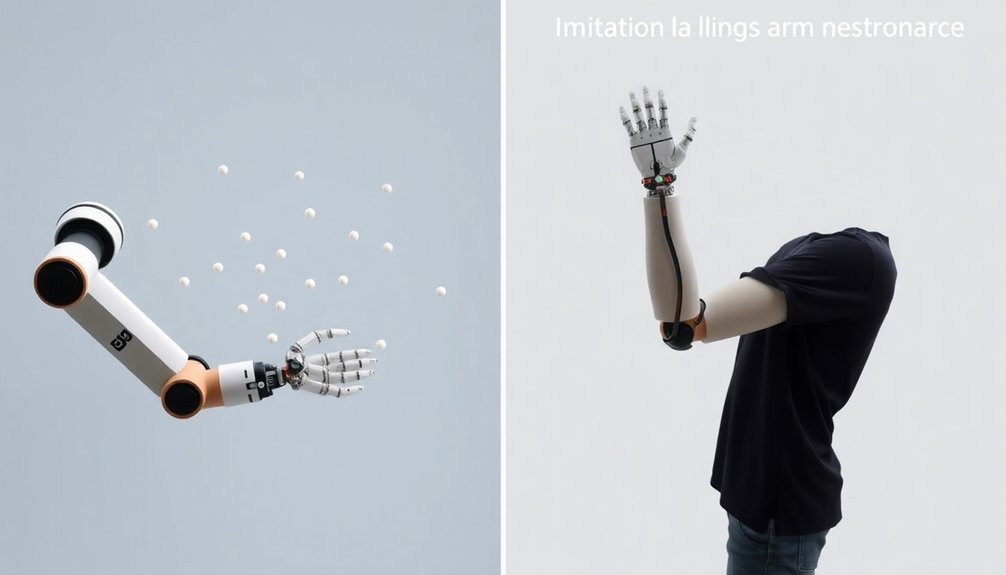

Imitation learning offers a smarter shortcut, letting robots watch and mimic expert demonstrations instead of blindly fumbling through thousands of failed attempts. Imagine a robot watching a master chef and instantly copying precise knife skills—that’s the dream.

The real challenge? Teaching machines to adapt when things go sideways. Can they handle unexpected obstacles or weird environmental quirks? Not easily. Right now, robots are smart but not quite street-smart, still struggling to translate pristine simulations into messy real-world scenarios. Deep reinforcement learning enables robots to transform potential into intelligent behavior by evolving through experience and trial-and-error strategies.

Understanding Reinforcement Learning Mechanics

You’ll want to understand how robots actually learn through reward signals – think of it like training a puppy, but with algorithms instead of treats.

The core mechanics involve creating a policy that helps an AI figure out which actions maximize its chances of scoring big rewards, fundamentally turning learning into a strategic game where every move counts.

When you’re wrestling with the classic exploration-exploitation challenge, you’re basically asking your robot to be both a curious adventurer and a calculated strategist, constantly weighing the thrill of discovering something new against the comfort of proven successful actions.

Reward Signal Mechanics

Because machines aren’t mind readers, reinforcement learning needs a way to tell robots what “good” looks like—and that’s where reward signals come in. These digital breadcrumbs guide robots through complex decision landscapes, fundamentally teaching them which actions deserve a gold star. Think of reward signal mechanics like a sophisticated GPS for learning a policy—constantly recalibrating based on performance.

| Reward Type | Learning Impact |

|---|---|

| Positive | Encourages Action |

| Negative | Discourages Mistake |

| Sparse | Rare but Essential |

| Dense | Frequent Guidance |

The magic happens when algorithms translate these signals into smarter choices. By balancing exploration and exploitation, robots learn to navigate challenges like seasoned adventurers. They’re not just following instructions—they’re improvising, adapting, and evolving with each calculated risk and reward.

Policy Optimization Strategies

Policy optimization strategies are the secret sauce that transforms robotic learning from clumsy trial-and-error into razor-sharp decision-making. Your robotic friend isn’t just randomly flailing anymore—it’s strategically learning how to move.

Here’s how these strategies work their magic:

- Q-learning decodes the best actions by constantly updating action-value functions, like a chess master predicting multiple moves ahead.

- Actor-Critic methods create a dynamic duo where one part of the AI critiques while another adapts, turning raw reinforcement signals into precise movements.

- Deep Reinforcement Learning uses neural networks to handle complex environments, fundamentally giving robots a brain that can process incredibly nuanced decision landscapes.

These approaches turn robotic policy from rigid programming into adaptive, intelligent behavior. Want a robot that learns like a pro? This is how it happens.

Exploration Versus Exploitation

When machines learn, they face the same dilemma humans do: should they play it safe or take a risk? In reinforcement learning, this translates to the exploration versus exploitation challenge.

Imagine a robot deciding whether to try a completely new movement or stick to what’s already working. Exploration means venturing into unknown territory, potentially discovering breakthrough strategies. Exploitation, on the other hand, means doubling down on proven tactics.

The magic happens when algorithms balance these competing impulses. Techniques like ε-greedy help robots navigate this tightrope, randomly sampling new actions while mostly leveraging known successful approaches.

It’s like a calculated gambling strategy where the machine gradually learns which risks are worth taking. The goal? Developing smarter, more adaptable robotic behaviors that can innovate without completely falling apart. Machine learning algorithms enable robots to process sensory inputs and dynamically adjust their movements, enhancing their ability to learn complex tasks in real-time.

Decoding Imitation Learning Strategies

If you’ve ever watched a robot mimic a human’s precise movements and thought, “Whoa, that’s eerily smooth,” you’re witnessing the magic of imitation learning strategies. Humanoid robots leverage advanced technologies to transform their learning capabilities across various industries.

These strategies transform robots from clunky machines into eerily intelligent copycats. Here’s how imitation learning and behavior cloning work their mind-blowing magic:

From mechanical bumbling to eerily precise mimicry, robots now learn by watching and copying with uncanny intelligence.

- 🤖 Direct Mimicry: Robots learn by watching and precisely replicating human actions, like a digital monkey-see-monkey-do.

- 🧠 Smart Observation: Advanced algorithms decode complex movements, turning demonstrations into actionable robotic instructions.

- 🚀 Performance Evolution: Systems like DAGGER guarantee robots continuously refine their skills, preventing performance drift.

The result? Robots that don’t just follow instructions but understand and adapt, blurring the lines between programmed movement and genuine learning.

Who knew copying could be so revolutionary?

Similarities and Differences Between Learning Methods

Three core learning strategies sit at the cutting edge of robotic intelligence, each with its own superpower: reinforcement learning, imitation learning, and traditional programming.

Reinforcement learning’s secret sauce? Trial and error with constant reward feedback. Think of it like training a puppy—lots of treats for good behavior.

Imitation learning, on the other hand, is more like watching a master chef and copying their moves precisely.

Both methods aim to make robots smarter, but they take wildly different paths. RL builds skills through countless attempts, while IL fast-tracks learning by mimicking expert behaviors.

The cool part? Sometimes these approaches blend, creating robot brains that can both learn from scratch and quickly adapt by watching.

Imagine a robot that’s part student, part copycat—that’s the future.

Case Study: Walking Algorithms

Imagine walking into a robotics lab where mechanical legs are learning to navigate the world, one stumble at a time.

Walking algorithms are like robot bootcamps, where machines train using two primary methods:

Robot bootcamps: where mechanical learners dance between digital obstacles, transforming stumbles into graceful navigation strategies.

- Reinforcement learning throws robots into a digital obstacle course, rewarding successful steps and punishing spectacular face-plants.

- Imitation learning lets robots watch and copy expert movements, basically becoming mini-me versions of walking champions.

- Self-imitation techniques help robots remember their best moves, turning past experiences into future navigation skills.

Deep reinforcement learning takes this a step further, blending body dynamics with visual perception.

It’s like giving robots a brain and eyes simultaneously, allowing them to adapt to uneven terrain in real-time.

Think of it as teaching a machine to walk not just by following rules, but by understanding the subtle art of movement itself.

Proprioceptive sensors enable robots to fine-tune their movements with remarkable precision, transforming raw algorithms into fluid, adaptive locomotion.

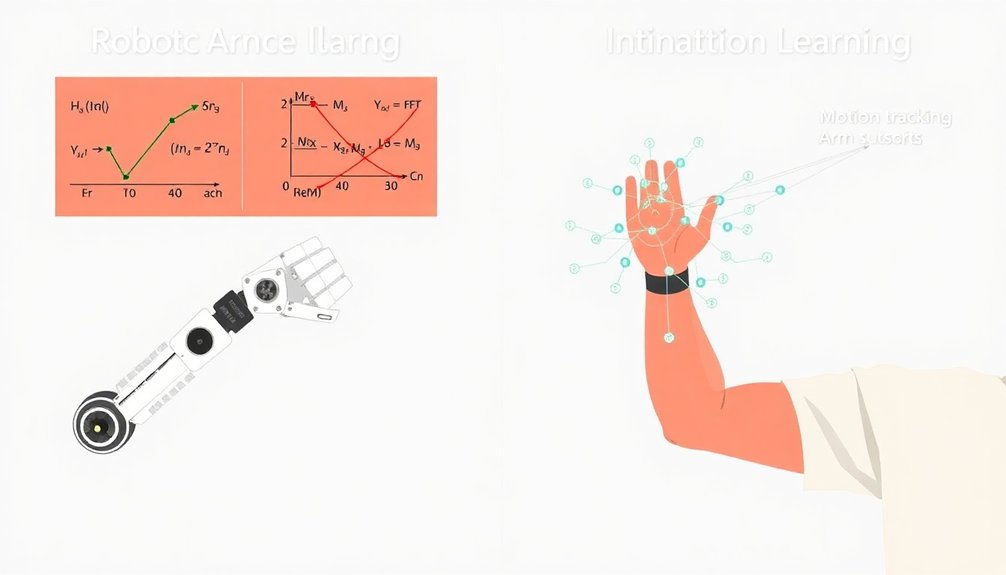

Precision in Grasping and Manipulation

You’ve probably wondered how robots might one day grab your coffee mug without shattering it into a thousand pieces.

Robot hand geometry isn’t just about having fingers that look human-like; it’s about understanding how those mechanical digits can extract visual features and translate complex spatial information into precise movements.

Think of it like teaching a toddler to pick up a fragile teacup — except in this case, the toddler is a learning algorithm that’s getting smarter with every attempted grasp.

Robot Hand Geometry

Robotic precision starts with hand geometry—the secret sauce of machine manipulation. Your robot’s fingers aren’t just metal appendages; they’re sophisticated tools designed to conquer complexity.

Consider these game-changing insights:

- Shape matters more than brute force—delicate finger configurations trump raw strength.

- Machine learning transforms robot hands from clumsy grabbers into surgical instruments.

- Real-world adaptability emerges through intelligent geometric design.

Robot control isn’t about mimicking human hands; it’s about reimagining manipulation skills through strategic engineering.

Imagine a robotic hand that can cradle a fragile egg or grip a wrench with equal finesse. By integrating advanced sensing technologies and data-driven algorithms, researchers are creating hands that don’t just move—they understand.

Who knew geometry could be so revolutionary? Your future robotic assistant is learning to touch the world with unprecedented precision.

Visual Feature Extraction

Precision’s secret weapon? Visual feature extraction. It’s how robots learn to see the world like you do, turning blurry camera images into razor-sharp understanding.

Imagine a robot hand that doesn’t just grab, but strategically identifies object features and predicts the perfect grasp. Deep learning techniques are the magic behind this transformation, autonomously extracting visual cues that transform robotic movements from clumsy to calculated.

Your future robot assistant isn’t just following programmed instructions—it’s learning. By integrating RGB camera data and sophisticated algorithms like Behavior Transformers, these machines can now predict actions directly from visual input.

They’re bridging the gap between human dexterity and mechanical precision, turning robot learning from a scientific pipe dream into an everyday reality. Humanoid robot companions are pioneering advanced visual perception that transforms how machines understand and interact with their environment.

Who said robots can’t see?

Performance Metrics and Evaluation

The Achilles’ heel of robot learning lies in its performance evaluation—a critical battlefield where algorithms prove their mettle or falter spectacularly.

When diving into performance metrics for reinforcement learning, you’ll discover a complex landscape of challenges:

- Success Rates: Algorithms like SILP+ boast impressive 90% achievements in motion planning tasks.

- Training Efficiency: Measuring how quickly robots adapt and learn without losing their digital minds.

- Adaptability Quotient: Testing whether robots can smoothly shift between environments without throwing a computational tantrum.

Your evaluation toolkit becomes a forensic lab for robotic intelligence.

Robotic intelligence dissected: a methodical forensic toolkit decoding the intricate algorithms of machine learning.

Convergence time, extrapolation errors, and skill transfer reveal whether an algorithm is a learning champion or just another silicon pretender.

Imitation learning adds another layer, comparing robot behaviors against expert demonstrations with ruthless precision.

Real-World Robotic Applications

You’ve probably wondered how robots are actually changing the world beyond sci-fi fantasies – and the answer’s in some seriously cool real-world applications.

From robotic surgeons performing micro-precise incisions to warehouse robots zipping around fulfilling orders faster than any human could, these machines are transforming industries with mind-blowing efficiency.

Autonomous vehicles are also pushing the boundaries, maneuvering through complex urban landscapes and promising a future where transportation isn’t just about getting from point A to point B, but doing it with algorithmic intelligence that makes human drivers look like clumsy amateurs.

Surgical Robot Precision

When surgical robots roll into operating rooms, they’re not just fancy metal assistants—they’re precision instruments that could make human surgeons look like clumsy amateurs.

Through reinforcement learning and imitation learning, these mechanical marvels transform complex surgical techniques into data-driven dance moves.

These robots aren’t playing around:

- They absorb surgical demonstrations like digital sponges

- They process real-time visual data with superhuman accuracy

- They reduce complications by learning from thousands of expert performances

Imagine a robot that can predict anatomical variations faster than you can blink.

By continuously refining their control policies, surgical robots are bridging the gap between human skill and machine precision.

They’re not replacing surgeons—they’re supercharging them, turning operating rooms into high-tech performance stages where every movement is calculated, every cut is strategic.

Warehouse Automation Tasks

Because robots are taking over warehouses faster than you can say “automation revolution,” the world of logistics is getting a high-tech makeover that would make your grandpa’s inventory clipboard look like a museum artifact.

Reinforcement learning lets robots learn through trial-and-error, fundamentally turning warehouse floors into massive training playgrounds where robots figure out the most efficient ways to move pallets and pick orders.

Imitation learning takes this a step further by having robots watch and mimic human movements, like a robot apprentice studying its human mentor.

These smart machines aren’t just moving boxes; they’re strategically maneuvering through complex environments, learning safety protocols, and optimizing every single movement.

Who knew robots could be such quick studies in the art of warehouse gymnastics?

Autonomous Vehicle Navigation

The autonomous vehicle landscape is quickly transforming from sci-fi fantasy into everyday reality, with reinforcement and imitation learning turning roads into massive classroom laboratories for robotic intelligence.

These cutting-edge algorithms are teaching vehicles how to navigate complex environments with shocking precision.

- Safety isn’t just a feature—it’s a mathematical equation solved through intelligent learning

- Real-time decision-making becomes a dance between sensor data and algorithmic prediction

- Vehicles transform from passive machines to active learners, adapting faster than human drivers

Reinforcement learning allows vehicles to optimize navigation strategies through continuous trial and error, while imitation learning dramatically reduces training complexity by learning directly from expert demonstrations.

The result? Smarter vehicles that can anticipate obstacles, predict traffic patterns, and make split-second decisions that could mean the difference between a safe journey and a potential disaster.

Technical Challenges in Machine Learning

Technical challenges in machine learning aren’t just academic puzzles—they’re the razor-sharp obstacles standing between today’s clunky AI and the sci-fi future we’ve been promised.

Reinforcement learning and imitation learning are wrestling matches between algorithms trying to outsmart complex environments. Imagine a robot learning to grasp objects: it’s not just about moving an arm, but understanding nuanced constraints like shape closure and maneuvering through obstacle-rich scenarios without wiping out.

Machine learning’s high-wire act: algorithms wrestling complex environments, turning robotic precision into intelligent navigation.

These methods burn through computational resources like a teenager with a new credit card, exploring countless trial scenarios to nail down perfect movement strategies.

The real magic? Balancing exploration with precision, avoiding catastrophic failures while pushing the boundaries of what machines can learn. It’s a high-stakes game of algorithmic chess, where every move could mean the difference between breakthrough and burnout.

Hardware and Computational Requirements

Computational muscle isn’t just a luxury in machine learning—it’s the difference between a robot that stumbles like a drunk toddler and one that could potentially replace your most skilled worker.

When diving into reinforcement learning and imitation learning, hardware becomes your make-or-break factor:

- Deep RL demands monster GPUs that’ll make your electricity bill weep.

- Imitation learning can cruise on more modest machines, saving you serious cash.

- Complex neural networks eat processing power like a teenager devours pizza.

You’ll need serious tech firepower for reinforcement learning’s trial-and-error approach, which burns through computational resources faster than a startup burns venture capital.

Imitation learning offers a leaner alternative, learning from pre-collected demonstrations without the massive energy and processing overhead.

Want a smart robot without selling a kidney for computing power? Choose wisely.

Future Trajectories in Robotic Intelligence

Where robotic intelligence is headed might just blow your mind—and not in the sci-fi killer robot way, but in a “holy smarts, these machines are getting weirdly good” kind of way.

Reinforcement learning and imitation learning are about to get a major upgrade. Imagine robots that learn like curious toddlers—exploring, adapting, and mastering tasks through self-improvement.

Robots are becoming brainy explorers, learning and adapting like mini-geniuses with an insatiable curiosity.

They’ll soon develop policies that let them tackle complex motions with crazy efficiency. Think multitask learning where one robot policy works across different environments, or curiosity-driven methods that make machines autonomous explorers.

The future isn’t about perfect programming, it’s about creating systems that can basically teach themselves. Self-imitation learning algorithms are already showing promise, turning robot training from a painful crawl into a strategic sprint.

Wild, right?

People Also Ask About Robots

Do Robots Use Reinforcement Learning?

You’ll find robots increasingly use reinforcement learning to develop motion policies, learning through trial and error by receiving rewards or penalties during interactions with their environment, enabling adaptive and intelligent movement strategies.

What Is the Difference Between RL and IRL?

You’ll find that RL learns through trial and error with rewards, while Inverse Reinforcement Learning (IRL) tries to infer the underlying reward function by observing an expert’s demonstrated behavior.

How Does the Machine Learn in Reinforcement Learning?

You’ll learn through trial and error, where an agent explores actions, receives rewards or penalties, and gradually refines its strategy by maximizing cumulative rewards through repeated interactions with an environment.

Does Tesla Use Reinforcement Learning?

You’ll find Tesla doesn’t purely use reinforcement learning, but they’ve integrated RL techniques with self-supervised learning, training neural networks on millions of driving miles to improve autonomous vehicle decision-making and performance.

Why This Matters in Robotics

Sure robots might seem clunky now, but they’re learning faster than you think. Imitation and reinforcement learning aren’t just sci-fi fantasies — they’re transforming how machines understand movement. You’ll see walking, climbing, and adapting robots that learn like curious kids, not programmed machines. Think less “stiff metal puppet” and more “intelligent apprentice”. The future isn’t about replacing humans, it’s about creating collaborative technologies that extend our capabilities. Get ready.