Voice commands turn your speech into robotic magic through a wild tech dance. Sound waves hit a microphone, get digitized into computer language, and break down into tiny phonetic pieces. Neural networks translate those fragments into precise instructions, mapping your words to mechanical actions. Imagine telling a robot to grab a coffee—it’ll parse your request, understand the intent, and potentially serve you a brew. Curious how deep this rabbit hole goes?

Sound Waves to Digital Signals: Capturing Human Voice

When you think about robots understanding human speech, it might seem like magic, but it’s actually a pretty nifty process of transforming sound waves into digital commands.

Your voice kicks off this technological dance – those invisible vibrations from your vocal cords get captured by a microphone that transforms them into electrical signals. Think of it like translating your native language into robot-speak.

An analog-to-digital converter then steps in, chopping up those continuous sound waves into discrete digital bits that computers can actually understand. It’s basically turning your spoken words into a mathematical code that robots can process.

Imagine your voice as a musical score, with each sound wave representing a unique note that gets translated into instructions a robot can follow. Pretty cool, right?

Breaking Down Speech: Phonemes and Language Processing

When your robot buddy starts listening to your voice, it’s not just hearing noise—it’s breaking down your speech into tiny sound building blocks called phonemes, like a linguistic detective cracking a code.

You’ll want to know that these microscopic sound units are the secret sauce of language recognition, helping machines understand whether you’re saying “grab that tool” or “grab that towel” with razor-sharp precision.

Sound Wave Deconstruction

Because robots aren’t mind readers (yet), they’ve got to break down human speech into bite-sized sound chunks called phonemes. Speech recognition systems transform sound waves into digital signals faster than you can say “Hey, robot!” Deep learning models like Long Short-Term Memory (LSTM) networks help robots connect these sound fragments into coherent linguistic patterns.

Acoustic models analyze these waves, converting them into phonetic representations that machines can understand. Think of it like translating human gibberish into robot language.

Neural networks dive deep into these sound fragments, mapping acoustic patterns and predicting likely word sequences. Noise injection techniques help robots handle real-world audio chaos, making them surprisingly good at parsing your mumbled commands.

Want your robot to genuinely get you? It’s all about breaking down speech into microscopic sound building blocks that transform random noise into actionable instructions.

Language Pattern Recognition

After dissecting sound waves into microscopic acoustic fragments, robots face their next challenge: making sense of those phonetic puzzle pieces. Natural language processing kicks into high gear, transforming raw sound into meaningful commands. Hidden Markov models crunch the data, predicting word sequences with mathematical precision.

Imagine a robot brain rapidly matching phonemes like a linguistic detective, decoding your every mumbled instruction.

But it’s not just about hearing—it’s about understanding. Advanced algorithms inject noise-resistant training, helping robots parse through background chatter and imperfect speech. They’re learning to catch nuance, context, and intent.

Will they soon understand sarcasm? Probably not. But they’ll definitely know when you’re telling them to vacuum the living room or grab you a cold drink.

Language recognition: turning robot ears into smart interpreters.

Command Signal Translation

Turning raw sound waves into actionable robot commands isn’t just technical wizardry—it’s linguistic judo. Your spoken words become a complex dance of neural networks and language processing magic. How? By breaking down speech into tiny phonetic puzzle pieces that machines can actually understand.

- Phonemes get segmented and analyzed like linguistic DNA

- Encoder-decoder models predict intended meanings

- NLP techniques decode syntactic structures

- Noise injection trains systems to handle messy human speech

Each command translation is a precision operation where your jumbled human language gets transformed into crisp robotic instructions.

These sophisticated systems don’t just hear words—they interpret intentions, filtering out background noise and recognition errors. It’s like having a hyper-intelligent translator living inside your robot, turning your casual “move that thing” into an exact mechanical choreography.

From Words to Meaning: Natural Language Understanding

While robots might seem like they’re just fancy machinery, their ability to understand human speech is nothing short of magical. Natural language understanding (NLU) transforms your spoken commands into something robots can actually comprehend. Think of it like a translator between human babble and robot brain waves.

These smart systems break down your words using automatic speech recognition, converting sound into text, then parsing that text for meaning. Machine learning algorithms help robots recognize specific keywords and phrases that trigger actions—like “move forward” or “turn left”.

They’re designed to interpret commands even when speech isn’t perfect, using neural networks that learn and adapt. So when you bark an order at your robot buddy, it’s not just hearing noise—it’s decoding your intent with impressive precision.

Translating Commands: How Robots Interpret Instructions

When you tell a robot to “grab the blue cup,” it doesn’t just hear noise—it transforms your sound waves into a precise text translation through sophisticated speech recognition technology.

Your verbal command gets broken down into a computational puzzle, where algorithms dissect each phonetic fragment to understand not just the words, but their deeper semantic meaning and intended action.

The robot’s brain then matches these decoded instructions against its programmed capabilities, deciding whether it can actually execute what you’ve asked—turning human speech into robotic movement with remarkable, almost magical precision.

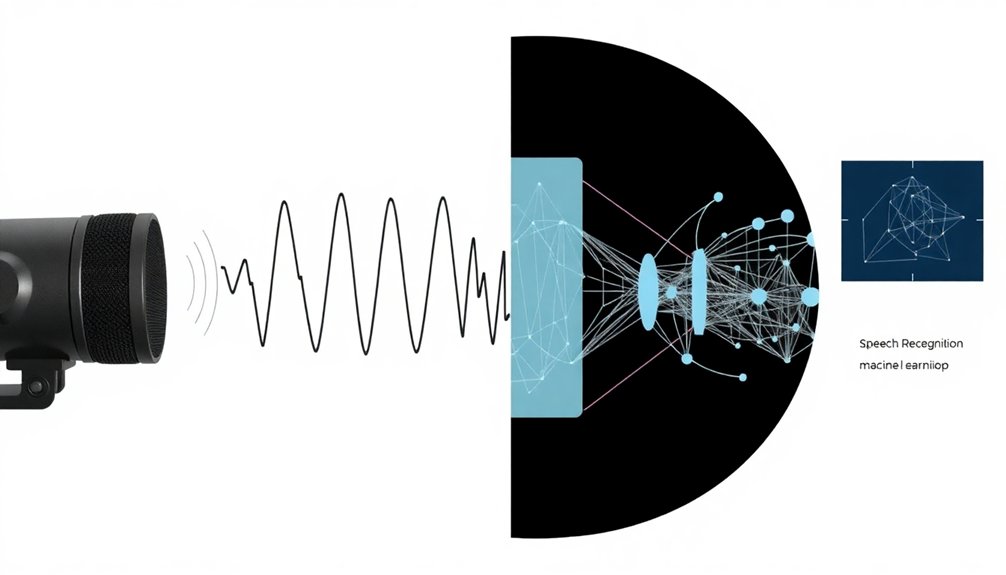

Speech Recognition Process

Because robots aren’t mind readers (yet), they rely on complex speech recognition systems to understand what humans are actually saying. These high-tech translators break down your vocal commands into microscopic pieces, transforming sound waves into actionable instructions. But how do they do it?

- Audio signals get captured and transformed into tiny sound units called phonemes.

- Advanced algorithms analyze these phonemes for recognizable language patterns.

- Neural networks help predict and correct potential misunderstandings.

- Semantic parsing converts transcribed text into specific robot actions.

Imagine your robot as a linguistic detective, piecing together contextual clues from your spoken words. It’s not perfect—background noise, accents, and mumbling can throw off its precision.

But with continuous training and smart noise injection techniques, these systems are getting scarily good at decoding human communication.

Command Translation Mechanics

Ever wondered how robots transform your mumbled instructions into precise mechanical actions? Command translation mechanics are where artificial intelligence gets seriously cool. Neural networks mimic biological learning by processing sensory input and transforming raw data into intelligent behavior through advanced computational techniques.

Neural networks act like linguistic alchemists, turning your spoken words into robot-friendly instructions through complex encoder-decoder systems. Think of it like a translator who doesn’t just convert words, but understands intent.

Noise injection techniques during training mean robots can handle your garbled commands like a pro. When you say “move forward” (or something that sounds vaguely like it), an ASR system decodes your speech, transforms it into text, and sends crisp instructions to a microcontroller.

An Arduino might then interpret “F” as “forward” and—boom—your robot springs into action.

It’s language processing meets robotic precision. Pretty slick, right?

Robot Decision Making: Matching Commands to Actions

Since robots aren’t mind readers (yet), they’ve got to transform your voice commands into something they can actually understand. Your spoken words get converted into text, then mapped to specific actions through a clever translation process. Think of it like teaching a very literal friend a new language. Neural networks process sensory inputs like human cognition, enabling robots to refine their command interpretation strategies.

The robot’s decision-making involves:

Robot commands decoded: neural networks translating human speech into precise mechanical choreography.

- Matching specific text inputs to predefined character commands

- Using neural networks to improve command interpretation accuracy

- Implementing noise injection techniques to handle recognition discrepancies

- Leveraging Bluetooth communication for wireless real-time interactions

Essentially, when you bark “Move forward!” the robot breaks down your voice command into a simple ‘F’ directive. It’s not magic—just sophisticated pattern recognition that turns your spoken words into precise mechanical movements.

Who knew robots could be such good listeners?

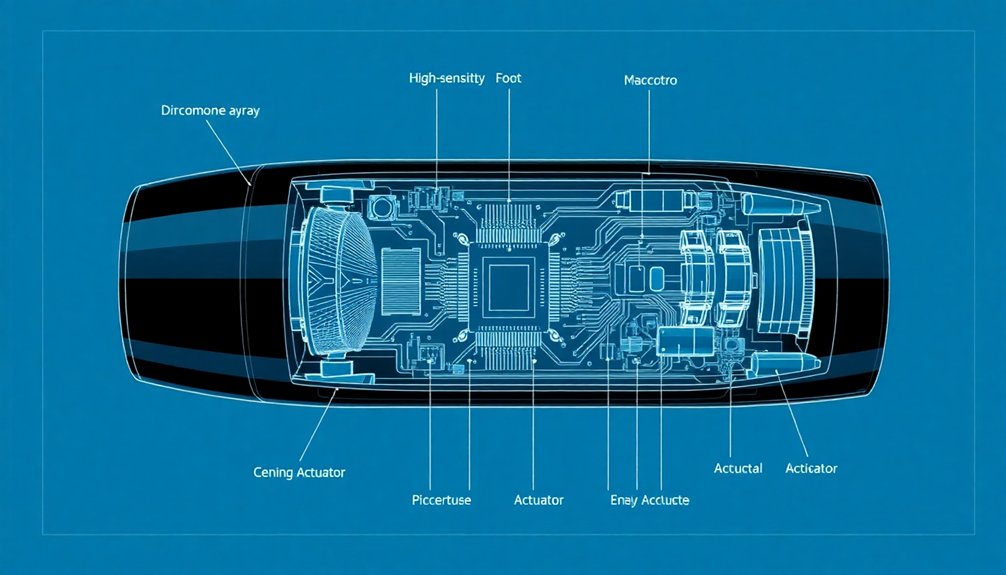

Hardware Components: Microphones, Processors, and Actuators

Turning voice commands into robot actions isn’t just software magic—it’s a hardware ballet of precision components working in perfect sync.

Microphones are your robot’s ears, capturing sound waves and converting them into electrical signals with noise-canceling precision.

Processors—think tiny Arduino brains—then crunch these signals, decoding whether you want the robot to move left, right, or do a victory dance.

Actuators are the muscle, transforming electrical commands into physical motion through motors and servos.

Want wireless control? Bluetooth modules let you bark orders from your smartphone, creating a seamless communication highway between human and machine.

It’s like having a high-tech translator that turns your spoken words into robotic actions, making science fiction feel suspiciously close to reality.

Real-World Examples: Voice Control in Home and Service Robots

Three groundbreaking robots are already transforming how we interact with technology through voice commands, turning sci-fi fantasies into everyday reality. Voice control has become surprisingly practical and intuitive across multiple robotic platforms.

- Amazon Echo translates spoken requests into smart home device management.

- Robotic vacuum cleaners activate and navigate via hands-free vocal instructions.

- Service robots in hospitality settings respond to guest inquiries instantly.

- Neural network-enhanced robots understand commands even in noisy environments.

Imagine telling your vacuum to clean while you’re lounging on the couch, or instructing a hotel robot to fetch extra towels without lifting a finger. These aren’t wild dreams anymore—they’re happening right now.

Advanced speech recognition technology has bridged the gap between human communication and robotic execution, making our interaction with machines smoother and more natural than ever before. Who would’ve thought talking to robots could be this easy?

Challenges and Future of Robotic Speech Interaction

While robotic speech interaction sounds like a technological dream, the reality is more like a frustrating game of robot telephone. Science and Engineering are wrestling with complex challenges in making robots genuinely understand human language. The goal? Create machines that don’t just hear words, but comprehend intent.

| Challenge | Impact |

|---|---|

| Noise Recognition | Misunderstood Commands |

| Semantic Parsing | Robotic Confusion |

| Training Complexity | Limited Skill Adaptation |

| Language Variability | Unpredictable Responses |

| User Intent Decoding | Potential Task Failures |

Cutting-edge research is pushing boundaries, using noise injection techniques and unified neural models to improve robotic comprehension. Imagine a future where service robots can seamlessly interpret your mumbled, caffeine-fueled morning instructions. We’re not quite there yet, but the path is getting smoother, one misinterpreted command at a time.

People Also Ask About Robots

How Does a Robot Voice Work?

You’ll hear your voice captured by microphones, which transform sound waves into digital signals. Then, advanced algorithms decode your speech, converting it to text that the robot’s software interprets and translates into specific actions.

How Do Talking Robots Work?

You’ll interact with talking robots through speech recognition technology that converts your voice into text, which a microcontroller then interprets and transforms into specific actions using semantic parsing and wireless communication.

Are 1X Robots Voiced by Humans?

With 80% of user interactions driven by voice, you’ll find 1X robots aren’t directly voiced by humans. Instead, teleoperators train them using voice commands, enabling the robots to learn and execute complex tasks autonomously.

How to Make a Voice Command Robot?

You’ll need an Arduino Nano, L298n motor driver, HC05 Bluetooth module, and DC motors. Design a chassis, program your Arduino to interpret commands, and create an Android app that’ll convert speech to text for controlling your robot.

Why This Matters in Robotics

Voice tech is wild, right? By 2025, over 75% of households will have voice-activated devices. Think about it: robots are basically learning to understand our weird human chatter, turning sound waves into precise instructions. They’re not just listening—they’re interpreting, deciding, and acting. Sure, it sounds like sci-fi, but we’re living it now. The future isn’t just talking to machines; it’s machines talking back—and actually getting it.