Robots aren’t just metal puppets anymore—they’re sensing machines that map their world through high-tech eyes, ears, and touch. Imagine cameras that decode visual landscapes in milliseconds, microphones translating sound waves into digital instructions, and pressure sensors feeling textures like a surgeon’s fingertips. AI algorithms transform raw sensor data into intelligent insights, letting robots navigate complex environments with increasing precision. Want to know how they’re evolving from blind machines to perceptive beings? Stick around.

The Sensory Symphony: Understanding Robotic Perception

When you think about robots, you probably imagine cold, mechanical beings blindly bumping into walls. But here’s the truth: modern robots are sensory masterpieces. They don’t just move—they perceive.

Vision sensors transform cameras into robotic eyeballs, scanning environments with laser-like precision. Tactile sensors give them a sense of touch more nuanced than you’d expect, allowing them to handle delicate objects like surgical instruments or fragile glassware.

Robotic senses revolutionize perception: cameras as razor-sharp eyes, touch sensors handling precision beyond human capability.

These sophisticated sensors let robots understand their world in ways humans can’t. Imagine a robot that can detect toxic gases before you even smell them or navigate complex spaces with millimeter-perfect accuracy. Through machine learning algorithms, robots continuously adapt and improve their sensory perception, transforming raw data into intelligent environmental understanding.

They’re not just machines; they’re intelligent systems constantly processing data, making split-second decisions that keep them—and potentially you—safe. Pretty cool, right?

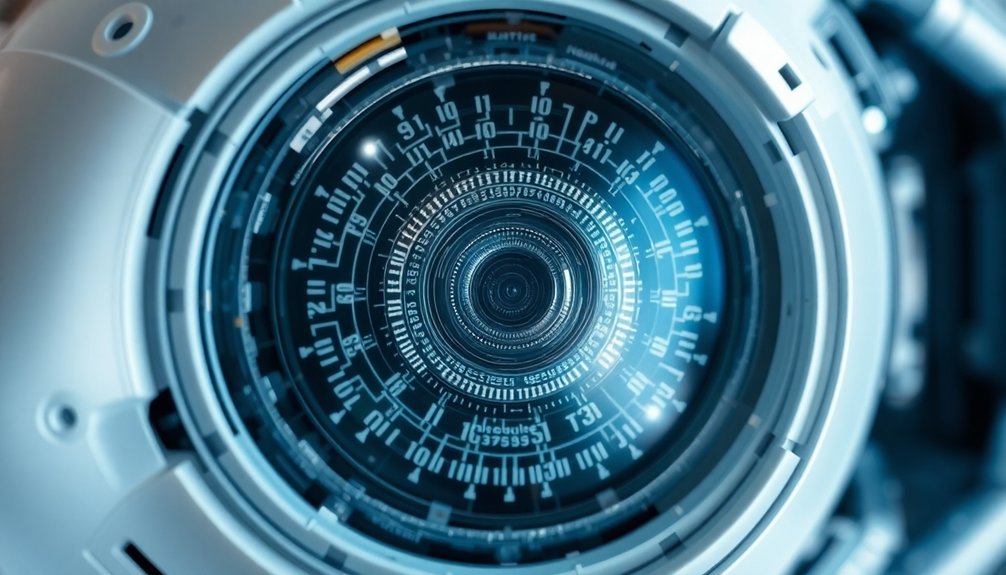

Seeing Beyond Sight: Camera Technologies in Robotics

You’ve probably wondered how robots actually see the world, and trust me, it’s way more complex than your smartphone camera.

Vision processing algorithms and multi-spectral imaging systems are the secret sauce that transform robotic cameras from simple image collectors into intelligent perception machines that can interpret depth, color, and context in milliseconds.

Imagine a robot that can’t only see a chair, but understand its material, structural integrity, and potential usefulness – that’s the crazy future we’re racing towards.

Vision Processing Algorithms

Because robots need more than just metal and circuits to navigate our wild, unpredictable world, vision processing algorithms have become the digital eyeballs transforming mechanical machines into intelligent explorers.

These algorithms turn basic sensors into sophisticated vision systems, decoding visual data with lightning-fast precision. Imagine a robot that can instantly recognize objects, calculate depth perception, and plot navigation capabilities in milliseconds – that’s not science fiction, that’s today’s reality.

Object recognition isn’t just about seeing; it’s about understanding. Advanced algorithms slice through complex visual landscapes, segmenting images and extracting critical features that help robots make split-second decisions.

They’re like brainy translators, converting raw camera inputs into meaningful insights that transform how machines interact with their environment. Neuromorphic computing is revolutionizing machine perception by enabling more adaptive and energy-efficient vision processing technologies.

Multi-Spectral Imaging Systems

If traditional cameras are like human eyes squinting through a keyhole, multi-spectral imaging systems are like having x-ray vision superpowers.

These robot perception technologies can totally revolutionize how robots perceive their environment by:

- Detecting temperature variations invisible to humans

- Analyzing chemical compositions through specialized wavelengths

- Identifying material properties beyond surface appearances

- Enhancing navigation in challenging visual conditions

Multi-spectral imaging systems let robots see what we can’t, transforming their decision-making capabilities.

By integrating machine learning algorithms, these cameras capture data across multiple electromagnetic spectrum ranges, turning robots into sensing superheroes.

They’ll detect hidden features, classify materials with crazy precision, and navigate environments that would leave human eyes totally confused.

Want to know the future of robotics? It’s not about seeing — it’s about perceiving.

Listening and Learning: Sound Interpretation Mechanisms

When sound meets silicon, robots transform from mere mechanical devices into listening, learning machines. Sound sensors detect sound waves like high-tech ears, allowing robots to interpret auditory information with impressive precision. They’re not just hearing—they’re understanding. Acoustic signal processing enables robots to break down sound waves into detailed audio fingerprints, translating complex human communication into precise digital instructions.

Robots equipped with these sophisticated systems can follow verbal commands, dramatically enhancing their adaptability in complex environments. Imagine a robot that doesn’t just respond to programming, but actually listens and adapts.

Robots evolve from rigid machines to adaptive listeners, transforming complex commands into intelligent, nuanced responses.

But here’s the real kicker: some robots are pushing boundaries by using laser technologies to detect sound wave vibrations, fundamentally giving them superhuman hearing capabilities. They’re bridging the gap between mechanical processing and genuine comprehension.

Who’d have thought silicon could be so… attentive? By integrating sound interpretation with vision sensors, these machines are becoming less like cold, unfeeling robots and more like intelligent companions.

Touch, Feel, and Respond: Tactile Sensor Networks

Fingertips of steel, meet the future of robotic perception. Tactile sensors are revolutionizing how robots understand their world, turning cold machinery into intelligent explorers.

Consider these mind-blowing capabilities:

- Measuring pressure with microscopic precision

- Detecting material differences in milliseconds

- Maneuvering complex environments seamlessly

- Responding to physical objects like living organisms

Imagine a robot vacuum cleaner that doesn’t just bump around randomly, but actually feels and understands its surroundings. Advanced neural networks are enabling robots to interpret complex touch signals with unprecedented accuracy.

These sensors transform machines from clumsy tools into sophisticated explorers, enhancing their ability to interact with the environment. They convert physical touch into electrical signals, allowing robots to “feel” just like humans do – except without the emotional baggage.

Want to know the future of robotics? It’s all about touch. Literally.

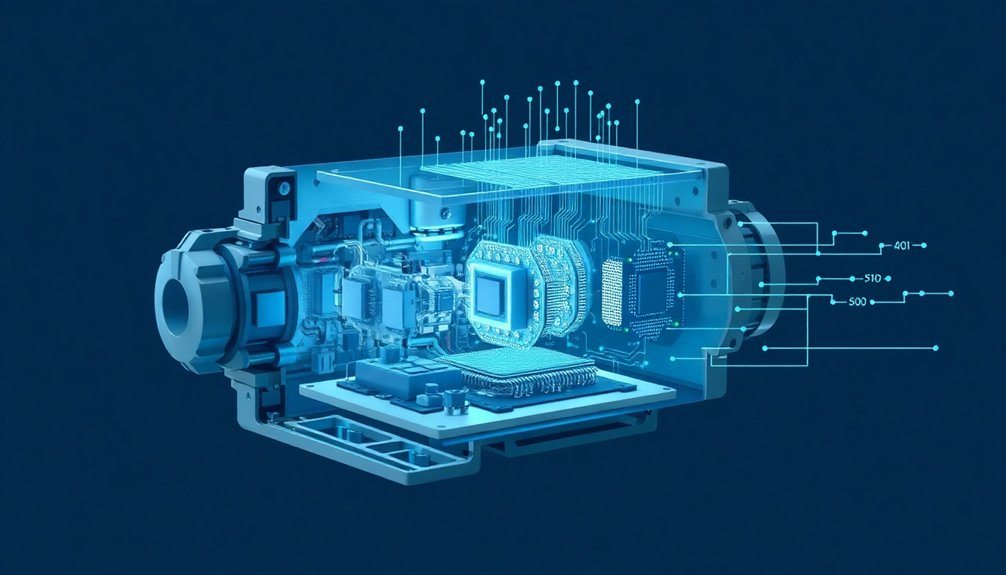

From Raw Data to Intelligent Insight: AI Sensor Processing

You’ve seen robots bumble around like toddlers with limited awareness, but data translation algorithms are changing the game, turning raw sensor inputs into razor-sharp insights.

Intelligent sensor fusion lets machines stitch together information from cameras, infrared, sound, and touch sensors faster than you can blink, creating a thorough understanding of their environment that would make a sci-fi movie director weep.

Think of it like your brain processing multiple streams of information simultaneously—except these robot brains can filter, analyze, and respond with machine-level precision that makes human perception look like a flickering candle next to a searchlight.

Deep reinforcement learning enables robots to transform sensor data into adaptive, intelligent behaviors that continuously improve through experience and simulation.

Data Translation Algorithms

Because robots aren’t mind readers (yet), they rely on data translation algorithms to make sense of their crazy, complex world. Sensors allow robots to decode environmental conditions through clever computational magic:

- Measure the distance between objects with laser-precision

- Recognize objects faster than you can blink

- Fuse multi-sensor data into coherent insights

- Transform raw inputs into actionable intelligence

These algorithms aren’t just processing numbers—they’re creating digital understanding. Sensor fusion techniques enable robots to integrate multiple sensory inputs, creating a more comprehensive understanding of their environment.

Imagine your robot buddy scanning a room, filtering out noise, and interpreting its surroundings with machine learning wizardry. It’s like giving a computer brain superhuman perception, turning scattered sensor signals into a crisp, thorough environmental snapshot.

The result? Robots that don’t just see the world, but truly comprehend it, making split-second decisions that can navigate complexity with shocking accuracy.

Intelligent Sensor Fusion

When robots need to make sense of their world, intelligent sensor fusion becomes their secret superpower. It’s like giving machines a superhuman ability to combine data from cameras, LiDAR, and gas sensors into one epic perception package.

AI algorithms transform raw sensory inputs into meaningful insights, helping robotic systems understand their environment with mind-blowing precision.

Imagine a robot that doesn’t just see, but truly comprehends. By blending external and internal data streams, these smart machines can recognize patterns, dodge obstacles, and adapt on the fly.

Machine learning kicks this capability into overdrive, continuously refining how robots perceive and interact with complex surroundings. Who wouldn’t want a sidekick that gets smarter with every single movement?

Sensor technologies like accelerometers and gyroscopes enable robots to detect environmental shifts with incredible accuracy, enhancing their ability to process and respond to complex sensory information.

Navigating Complex Environments: Sensor Integration Strategies

If robots are going to survive in the wild world beyond controlled laboratory environments, they’ll need some seriously smart sensing strategies. Here’s how they’ll do it:

- Combine multiple sensors like LiDAR, cameras, and gas detectors

- Fuse proprioceptive and exteroceptive data for better awareness

- Process sensor information with machine learning algorithms

- Adapt movements based on real-time environmental changes

Robotic navigation isn’t just about avoiding walls—it’s about understanding the world. By integrating sensors used across different domains, robots can synthesize complex information faster than you’d imagine. Reinforcement learning techniques enable robots to continuously refine their sensing and decision-making capabilities through advanced simulation technologies.

Sensor fusion transforms raw data into actionable insights, allowing machines to “see” depth, detect hazards, and make split-second decisions. Think of it like giving a robot superhuman perception: stereo cameras measuring distances, LiDAR mapping 3D spaces, internal sensors tracking movement.

The result? Robots that don’t just move through environments—they comprehend them.

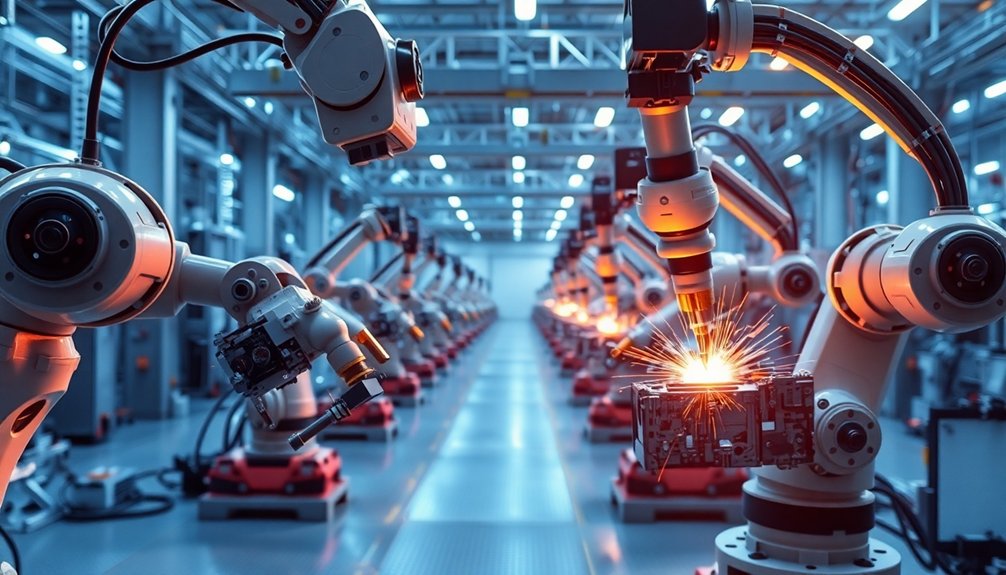

Real-World Applications: Sensors Transforming Industries

While sensors might sound like boring tech jargon, they’re actually the secret sauce transforming entire industries from the ground up.

From operating rooms to factory floors, robots use incredible sensor technologies to see, touch, and navigate like never before. Visual sensors help industrial robots inspect products with eagle-eye precision, catching defects humans might miss.

In healthcare, these tiny technological marvels let robots assist surgeons with superhuman accuracy. Self-driving cars rely on sensor networks that map environments in milliseconds, turning roads into intelligent pathways.

Home robots like vacuum cleaners now dance around furniture using proximity sensors that make old-school cleaning look downright prehistoric. Machine learning algorithms enable robots to continuously improve their sensor-driven perception and adaptability across various environments.

Want to know the crazy part? We’re just scratching the surface of how robots will interact with our world through these mind-blowing sensor technologies.

Challenges and Limitations of Current Robotic Sensing

Despite the incredible promise of robotic sensing technologies, today’s robots aren’t exactly superhuman — yet.

Sensors are used in complex environments, but they’re wrestling with some serious limitations:

- Accuracy and reliability drop when environmental factors like weird lighting or interference mess with their perception.

- Computational limits make real-time decision-making feel like watching a GPS recalculate during rush hour.

- Advanced algorithms struggle to filter out sensor noise, turning pristine data into a messy signal.

- Limited sensor range means robots can barely navigate spaces humans find trivial.

Think of current robotic sensing like a teenager learning to drive — lots of potential, but plenty of awkward moments.

The technology’s improving, but we’re not quite at the “robot overlords” stage. For now, these mechanical explorers are more like curious toddlers, constantly learning but prone to hilarious mistakes.

Deep reinforcement learning enables robots to evolve through experience, gradually improving their sensing and decision-making capabilities.

The Future of Robotic Perception: Emerging Sensor Technologies

As robotic sensing technologies stumble through their awkward adolescence, a revolution is brewing in sensor design that’ll make today’s clunky machines look like prehistoric relics.

Emerging sensor technologies are transforming how robots perceive their world. Imagine LiDAR systems creating ultra-detailed 3D maps that help robots navigate like seasoned explorers, while multi-sensor perception lets them recognize objects with near-human precision.

Robotic vision systems are getting smarter, using Explainable AI to show exactly how they’re thinking. Tactile sensors now mimic human touch so precisely that robots can feel the difference between silk and sandpaper.

Chemical sensing capabilities are expanding too, with e-tongue technologies that can analyze substances faster than any human lab tech. These sensors aren’t just measuring—they’re understanding.

People Also Ask About Robots

How Do Automated Robots Perceive Their Surrounding Environment?

You’ll perceive your environment through sensors like cameras, LiDAR, and gas detectors, which capture visual, spatial, and chemical data, helping you navigate, recognize objects, detect hazards, and make informed decisions in complex surroundings.

How Do Robots Interact With the Physical World via Sensors?

In a warehouse, an autonomous forklift uses proximity sensors to detect obstacles, you’ll navigate around shelves and workers. You’ll convert sensor data into real-time decisions, transforming raw environmental information into precise mechanical actions.

How Do Robots Sense Their Surroundings?

You’ll sense your surroundings through cameras, LiDAR, and gas sensors that capture visual, spatial, and chemical data. These technologies enable you to navigate, detect obstacles, recognize objects, and monitor environmental conditions with precision.

How Do Robots Use Sensors to Detect and Respond to Their Environment?

You’ll hit the ground running as robots use diverse sensors to gather environmental data, converting analog signals to digital insights. They quickly process information, making split-second decisions to navigate, detect obstacles, and interact safely with their surroundings.

Why This Matters in Robotics

You’ve seen how robots are evolving from clunky machines to sophisticated sensory beings. They’re not just collecting data anymore; they’re interpreting the world like living, breathing entities. As sensor technologies advance, you’ll witness machines that can feel, hear, and understand environments with unprecedented depth. The line between artificial and organic perception is blurring, and you’re watching it happen in real-time. Get ready for a revolution where robots truly see, not just look.