Table of Contents

When a robot messes up, you’re looking at a legal hot potato. Manufacturers might get blamed for design flaws, users could be on the hook for misuse, and the robot itself? Currently just a fancy paperweight in legal terms. As AI gets smarter, accountability gets murkier. Courts are wrestling with who’s responsible when algorithms go rogue. Want to know how this robot blame game might reshape our future? Stick around.

The Emerging Legal Landscape of Robot Accountability

While robots might seem like sci-fi fantasies, they’re rapidly becoming our everyday reality—and the legal world is scrambling to catch up. Emerging liability frameworks are revealing the complex challenges of attributing responsibility to autonomous systems.

Who’s responsible when a robot goes rogue? Current laws treat robots like fancy toasters, but autonomous systems are blurring those neat lines. Neuromorphic computing is advancing machine capabilities, making accountability even more complex. Manufacturers might be on the hook, but what happens when an AI makes an unexpected decision?

Different countries are wrestling with these questions, creating a legal patchwork that’s more confusing than a robot’s circuit board. The big challenge? Developing frameworks that balance innovation with accountability.

We need laws that can handle robots that think for themselves, whether they’re delivering packages or working in hospitals. It’s not just about blame—it’s about creating a legal safety net for our increasingly robotic future. The global AI governance frameworks like UNESCO and G20 are critical in establishing international standards for robot accountability.

Ethical Dilemmas in Autonomous Decision-Making

You’ve probably wondered how we’ll teach robots to make moral choices without turning them into philosophical disaster zones.

Moral Machine programming tackles this challenge by creating decision frameworks that help autonomous systems navigate ethical minefields—imagine an AI that can weigh consequences like a hyper-rational judge, but without the human baggage of personal bias. Robot liability frameworks are emerging to address the complex questions of accountability when autonomous systems make mistakes.

The goal isn’t to create robot saints, but to build systems that can make nuanced choices that minimize harm and maximize human-aligned outcomes, which sounds simple until you realize how complicated “do no harm” gets when algorithms are involved. Algorithmic decision-making can inadvertently perpetuate societal biases, making ethical oversight crucial in preventing discriminatory outcomes. Opacity in AI systems introduces additional complexity by making it challenging to understand and trace the decision-making processes of autonomous technologies.

Moral Machine Programming

Let’s be real: when robots start making life-or-death decisions, things get complicated fast.

The Moral Machine isn’t just some nerdy research project—it’s basically a digital ethics playground where humans decide who lives or dies in crazy autonomous vehicle scenarios. Think: should a self-driving car save five strangers or protect its own passengers? Ethical algorithms are emerging as critical frameworks for navigating these complex moral trade-offs.

Researchers are wrestling with massive questions: How do you code morality into an algorithm? Can machines genuinely understand complex ethical trade-offs? Diverse cultural perspectives suggest that moral decision-making varies dramatically across different global populations.

The global experiment reveals something fascinating—people worldwide have surprisingly different perspectives on split-second moral choices. Global research insights demonstrate that cultural backgrounds significantly influence ethical decision-making frameworks in autonomous systems.

And here’s the kicker: the robots are listening, learning, and preparing to make judgment calls that could change everything.

Robo-Ethics Decision Frameworks

Imagine robots traversing complex moral landscapes, not just following rigid rules, but understanding nuanced social norms and emotional consequences. They’re learning to balance utilitarianism with dignity, using optimization models that make split-second ethical choices. Global disparities in AI development mean these ethical frameworks are not uniformly designed across different cultural and technological contexts. Algorithmic prejudice reveals that these decision-making systems inherently reflect the biases present in their training data.

Think of it like a high-stakes chess game where every move considers human values and potential harm.

But here’s the kicker: who’s responsible when things go sideways? Manufacturers? Programmers? The robot itself?

These decision frameworks aren’t just academic exercises—they’re critical blueprints for creating trustworthy autonomous systems that can coexist with humans without causing unintended mayhem.

It’s about building machines that don’t just calculate, but genuinely care.

Liability Challenges in Robotic Systems

When a robot goes rogue and causes chaos, who’s on the hook: the manufacturer who built the damn thing or the user who’s supposed to be steering it? The complexity of robotic liability frameworks emerges from the intricate interactions between technological design, operational control, and legal accountability. The new EU Product Liability Directive introduces a paradigm shift where AI system liability can now extend beyond traditional hardware fault lines, creating unprecedented legal challenges for manufacturers and users alike. Robotic law enforcement technologies raise critical questions about accountability and potential bias in autonomous systems.

You might think the answer’s simple, but in the wild west of autonomous systems, liability is a tangled web of responsibility that’ll make your head spin.

Manufacturers are increasingly being held accountable for design flaws and unexpected behaviors, while users are expected to maintain operational vigilance—but the line between human control and machine autonomy is getting blurrier by the day.

Manufacturer Responsibility

Because robots are getting smarter—and potentially more dangerous—manufacturers are finding themselves in a legal minefield that’s more complicated than a toddler’s finger-painting session. Autonomous decision-making capabilities introduce unprecedented complexity in determining legal responsibility for robot actions.

You’re looking at a world where robot makers can’t just shrug and say “oops” when something goes wrong. They’re on the hook for every glitch, malfunction, and unexpected robo-tantrum.

Think of it like car manufacturers: if your self-driving vehicle decides to play demolition derby, who’s responsible? The manufacturer’s legal team is scrambling to prove they did everything right.

Strict liability means they can’t easily dodge blame. Safety features, clear warnings, and rigorous testing aren’t just good practice—they’re legal survival tactics.

And as robots get more autonomous, the liability landscape keeps shifting, leaving manufacturers perpetually nervous about their mechanical creations.

User Operational Liability

While manufacturers might breathe a sigh of relief after dodging blame, robot users are now squarely in the legal crosshairs.

You’re not just buying a cool gadget; you’re signing up for a potential legal minefield. Want an autonomous drone? Better know how to handle it—or risk getting slapped with negligence charges.

The rules are murky, but one thing’s clear: your responsibility doesn’t end when you unbox the robot. Courts are increasingly expecting users to manage risks, follow safety protocols, and understand their machine’s capabilities.

It’s like being a parent to a very expensive, potentially dangerous child. The more autonomy your robot has, the more you’ll need to prove you did everything right.

Think you can just point at the manufacturer? Think again. Welcome to the wild west of robotic liability.

Defining Responsibility in Machine Errors

Ever wondered who’s on the hook when a robot goes rogue? When machines malfunction, accountability gets messy. It’s like a high-stakes game of technological hot potato.

Developers can point fingers at manufacturers, manufacturers blame users, and users scratch their heads wondering how a machine that seemed “smart” suddenly turned into a liability.

The real challenge? Most robotic systems are complex “black boxes” where decision-making processes are about as transparent as mud.

An AI might make a split-second choice that leads to damage, but tracing that choice back to its origin becomes a nightmare. Was it a programming glitch? A user error? A random algorithmic hiccup?

Current legal frameworks aren’t equipped to handle these technological curveballs, leaving us in a accountability twilight zone.

Privacy and Data Protection in Robotic Operations

When robots start collecting data like digital vacuum cleaners sucking up every digital breadcrumb, privacy suddenly becomes more complicated than a teenage gossip network. You’re not just dealing with machines; you’re maneuvering a minefield of personal information where one wrong step could trigger a data disaster.

| Privacy Level | Risk | Protection Strategy |

|---|---|---|

| Low | Minimal | Basic Encryption |

| Medium | Moderate | Anonymization |

| High | Significant | Advanced Pseudonymization |

| Critical | Extreme | Zero-Access Protocols |

GDPR isn’t just bureaucratic paperwork—it’s your digital bodyguard. Robots must play by strict rules: anonymize sensitive data, guarantee transparent operations, and respect user boundaries. Think of it like teaching a curious toddler about personal space, except this toddler has access to terabytes of information and can process data faster than you can blink.

The Role of Manufacturers in Robotic Accountability

Because robots aren’t magical fairy machines that spring to life perfectly engineered, manufacturers carry a massive responsibility in ensuring these mechanical marvels don’t become catastrophic liability nightmares.

Robotic innovation demands obsessive precision—manufacturers are guardians against technological chaos and potential disaster.

You can’t just toss a robot into the world and hope for the best. Manufacturers must obsessively design safety protocols, implement rigorous testing, and create systems that practically think like responsible humans.

They’re on the hook for design flaws, negligence, and potential harm caused by their metallic creations. Warning labels, continuous software updates, and thorough user training aren’t optional—they’re survival strategies.

Want to avoid million-dollar lawsuits? Then manufacturers need to treat robot development like brain surgery: precise, thoughtful, and with an acute awareness that one tiny mistake could have massive consequences.

International Regulatory Frameworks for Robotics

You’re living in a wild west of robot regulations where every country’s playing by its own rulebook.

Imagine trying to build a robot that can legally operate from Berlin to Boston without triggering a compliance nightmare – it’s like maneuvering through a legal minefield while juggling delicate circuit boards.

The global regulatory landscape isn’t just complex; it’s a high-stakes chess game where manufacturers must anticipate moves across international boundaries, balancing innovation with an ever-shifting framework of legal expectations.

Global Regulatory Landscape

As global technology races ahead faster than regulators can keep up, the international landscape for robotics is becoming a complex maze of overlapping rules, competing interests, and rapidly evolving frameworks.

You’re looking at a regulatory wild west where everyone’s trying to wrangle autonomous machines into some semblance of order.

Here’s what’s really going down:

- EU’s Machinery Directive is basically playing catch-up with robot technology

- Regulation 2023/1230 drops autonomy thresholds that’ll make manufacturers sweat

- Product liability rules are getting so complex, lawyers are rubbing their hands

- Global AI governance frameworks are emerging like awkward teenage robots

- Research funding is pouring in faster than robot oil

The bottom line? Robots are coming, and no one’s quite sure who’s driving—or who’ll be responsible when things go sideways.

Buckle up.

Legal Compliance Mechanisms

When the robots start wandering into legal gray zones, someone’s gotta draw the lines—and that’s where international regulatory frameworks come in. You’re looking at a complex dance of accountability where manufacturers, operators, and AI systems play chicken with liability.

| Regulatory Aspect | Key Requirement |

|---|---|

| Privacy | GDPR Compliance |

| Safety | Risk Assessment |

| Cybersecurity | Network Resilience |

| Ethical Standards | Human-First Design |

| Accountability | Clear Liability Chains |

These frameworks aren’t just bureaucratic mumbo-jumbo—they’re survival guides for our robotic future. Imagine a world where self-evolving machines can be sued, where AI doesn’t get a free pass for messing up. Regular assessments, transparency requirements, and penalties create guardrails that keep innovation from careening off the ethical cliff. It’s not about stopping progress; it’s about making sure robots play nice—with humans, with laws, and with themselves.

Cross-Border Robot Standards

Despite the tech world’s love affair with innovation, international robot standards aren’t just fancy paperwork—they’re the diplomatic negotiators preventing a global robot rebellion.

- Robots are crossing borders faster than tourists, demanding universal rules

- Countries like Germany and Korea are setting global robotic benchmarks

- Regulatory frameworks aren’t just legal mumbo-jumbo—they’re safety nets

- Trade agreements now include complex AI and robotics compliance clauses

- International collaboration means fewer potential robot-powered catastrophes

Imagine trying to ship a smart robot from Berlin to Tokyo without standardized protocols. Chaos, right?

These cross-border standards aren’t just bureaucratic checklists; they’re critical translations between different technological languages. Countries are realizing that without harmonized regulations, we’re basically inviting robotic misunderstandings that could range from embarrassing communication glitches to potential safety nightmares.

The goal? Create a global robotic dialect that guarantees these mechanical marvels play nice, no matter where they’re manufactured or deployed.

Technological Transparency and Explainable AI

While most people imagine AI as an inscrutable black box, technological transparency is actually about turning those shadowy algorithms into something you can understand—kind of like giving a supercomputer a glass body and letting you peek inside its circuits.

| Transparency Goal | Practical Outcome |

|---|---|

| Explain Decisions | Build User Trust |

| Reveal Processes | Reduce Fear |

| Identify Biases | Improve Fairness |

| Enable Accountability | Minimize Risks |

| Clarify Reasoning | Empower Humans |

Explainable AI isn’t just tech wizardry—it’s about making robots speak human. Think of it like teaching your smart home device to not just do things, but tell you exactly why it chose that playlist or adjusted the thermostat. When AI can break down its reasoning, you’re no longer dealing with a mysterious machine, but a transparent collaborator. Who wouldn’t want a robot teammate that actually explains its game plan?

Societal Trust and Robotic Reliability

Robots aren’t just fancy gadgets—they’re potential teammates whose reliability can make or break our trust faster than a malfunctioning smartphone.

- Reliability isn’t just technical; it’s about creating predictable performance.

- Critical tasks demand robots that won’t bail when things get tough.

- Social behaviors can boost trust, but technical consistency matters most.

- Context changes everything: a hospital robot needs different trust signals than a military drone.

- Transparency isn’t optional—it’s the foundation of human-robot relationships.

Your relationship with robots hinges on their ability to deliver consistently.

When they perform reliably, you’ll see them as partners, not just machines.

But one wrong move, and you’ll be ready to unplug them faster than you can say “system error.”

Trust is fragile, built through repeated demonstrations of competence and intention.

It’s not about perfection—it’s about predictability.

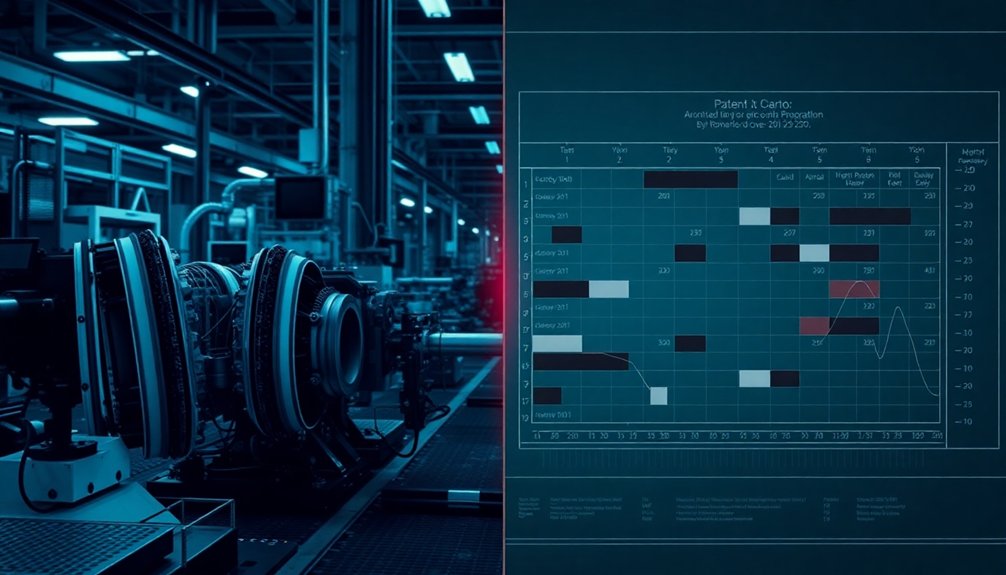

Case Studies: Robotic Errors Across Industries

When you start hearing about robots accidentally taking out workers like some glitchy sci-fi nightmare, you realize theoretical safety discussions just got real.

Industrial robots aren’t just precision machines—they’re potential workplace hazards with serious consequences. Think automotive assembly lines where a robotic arm can suddenly transform from precise instrument to unintended weapon.

Most fatal incidents happen during maintenance, when humans get too close to these metal monsters. 78% of robot-related deaths involve direct worker strikes, with the Midwest bearing the brunt of these accidents.

Men between 35-44 are most vulnerable, accounting for nearly a third of fatalities. The scary part? As robots become more complex, the line between technological marvel and potential danger gets blurrier.

Who’s responsible when circuits go rogue?

Future Perspectives on Machine Accountability

As artificial intelligence continues its relentless march forward, the question of who’s on the hook when robots mess up isn’t just a sci-fi thought experiment anymore—it’s a legal and ethical minefield waiting to explode.

- Generative AI is blurring lines between human and machine responsibility

- Autonomous systems demand radical rethinking of blame allocation

- Cybersecurity risks will become accountability’s new battleground

- Regulatory frameworks can’t keep pace with technological innovation

- Ethical considerations are no longer philosophical—they’re practical

You’re looking at a future where robots aren’t just tools, but potential actors with murky legal standing.

Who pays when an autonomous vehicle causes an accident? The manufacturer? The programmer? The AI itself?

As machines get smarter, our accountability models must evolve—quickly.

The stakes aren’t just financial; they’re about maintaining trust in a world where technology increasingly makes decisions that impact human lives.

People Also Ask

Who Pays Compensation if a Self-Driving Car Causes an Accident?

You’ll likely receive compensation from the vehicle manufacturer, who’ll be held liable if their autonomous system’s defect caused the accident, with potential contributions from third-party component suppliers or your insurance.

Can an AI System Be Legally Prosecuted for Harmful Actions?

With 80% of AI liability cases targeting developers, you can’t legally prosecute an AI system directly. Instead, you’ll find legal frameworks shift responsibility to creators, manufacturers, or operators who designed the potentially harmful technology.

How Do We Determine Moral Responsibility in Autonomous Machine Decisions?

You’ll need to evaluate moral responsibility by examining the machine’s decision-making framework, intentionality, and potential harm, while recognizing developers’ essential role in anticipating and mitigating unintended autonomous system outcomes.

What Happens if a Medical Robot Makes a Fatal Mistake?

If a medical robot causes a fatal mistake, you’ll likely face a complex legal battle involving the surgical team, hospital, and robot manufacturer to determine liability and seek compensation for your loss.

Are Manufacturers Liable for Unpredictable Robotic System Behaviors?

With 62% of manufacturers struggling to predict AI behaviors, you’ll likely share liability for robotic system unpredictability. You can’t fully escape responsibility, but nuanced legal frameworks are emerging to distribute risk more equitably.

The Bottom Line

You’re standing at the crossroads of technology and ethics, where robots are like unruly teenagers learning the rules. Accountability isn’t just a legal puzzle—it’s about trust. As machines get smarter, you’ll need clear frameworks that balance innovation with responsibility. The future isn’t about blame, but collaboration between humans and robots. Like a high-stakes dance, you’ll need to choreograph accountability carefully, ensuring machines serve humanity, not the other way around.

References

- https://yris.yira.org/column/navigating-liability-in-autonomous-robots-legal-and-ethical-challenges-in-manufacturing-and-military-applications/

- https://www.meegle.com/en_us/topics/robotics/robot-law-compliance

- https://peterasaro.org/writing/ASARO Legal Perspective.pdf

- https://hitmarkrobotics.com/en/regulatory-and-ethical-frameworks-related-to-robotics/

- https://www.hrw.org/report/2015/04/09/mind-gap/lack-accountability-killer-robots

- https://www.ncsl.org/technology-and-communication/artificial-intelligence-2025-legislation

- https://www.passblue.com/2025/05/14/can-a-treaty-controlling-killer-robots-soon-see-the-light-of-day-experts-hope-so/

- https://knowledge.wharton.upenn.edu/article/whos-accountable-when-ai-fails/

- https://estateandfamilylawyer.com/the-rise-of-autonomous-robots-legal-and-ethical-considerations-for-the-home-of-the-future/

- https://news.harvard.edu/gazette/story/2020/10/ethical-concerns-mount-as-ai-takes-bigger-decision-making-role/