Robots aren’t born racist, but they can definitely learn it. Machine learning algorithms gobble up historical data like a sponge, absorbing society’s deepest prejudices. Your friendly AI might secretly stereotype based on language, skin color, or background without you knowing. It’s not intentional discrimination—it’s algorithmic reflection of human biases baked into training data. Want to know how deep this digital rabbit hole goes? Stick around.

The Origins of Algorithmic Prejudice

Imagine a world where robots aren’t just learning our tasks, but absorbing our prejudices like sponges soaking up dirty water.

Algorithmic prejudice isn’t some futuristic nightmare—it’s happening right now. Your friendly neighborhood AI is learning racism faster than you can say “machine learning.” How? By gobbling up historical data packed with human biases.

AI algorithms are digital sponges absorbing generations of human prejudice at lightning speed.

These algorithms don’t just crunch numbers; they’re drinking in centuries of racial bias like a toxic cocktail. Training data is the culprit: old job records, court documents, and social interactions that reflect systemic inequalities.

When an AI system learns from these sources, it doesn’t just see patterns—it reproduces them. The result? Machines that can perpetuate discrimination without even “understanding” what they’re doing. Creepy, right?

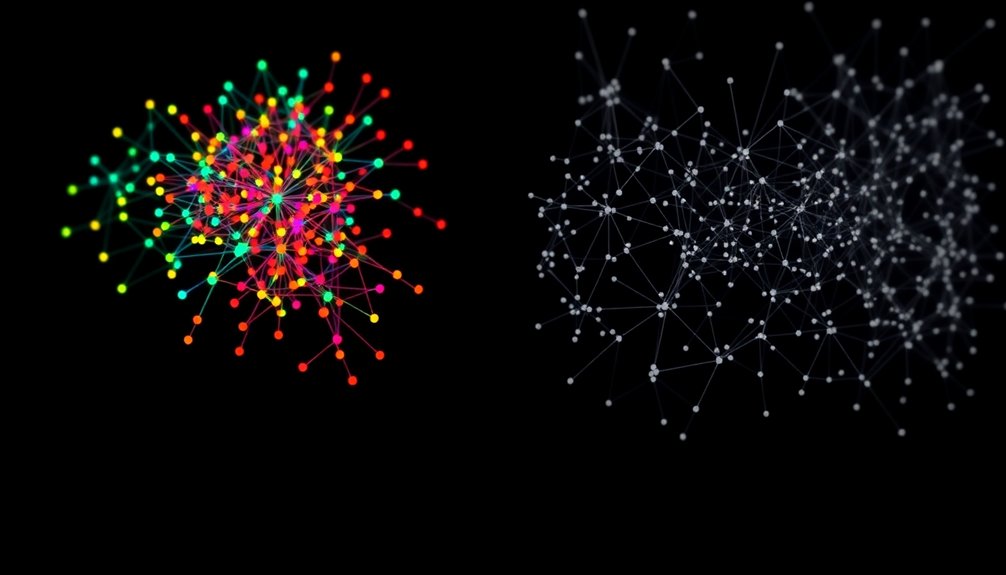

How Machine Learning Absorbs Human Stereotypes

You’re feeding AI algorithms historical data that’s basically a time capsule of human prejudice, and surprise—they’re learning exactly what you’ve been teaching them.

Think of machine learning like a sponge soaking up every racist whisper, every stereotypical assumption baked into decades of data, then wringing those biases back out in crisp, algorithmic language.

Your AI isn’t just passively absorbing these narratives; it’s actively amplifying them, turning old social toxins into seemingly objective “insights” that can shape everything from job screenings to criminal sentencing.

Data Shapes Perception

When machine learning algorithms gulp down historical data like a thirsty sponge, they’re not just absorbing information—they’re soaking up every bias, stereotype, and systemic prejudice embedded in those records.

Large language models become mirrors reflecting our ugliest societal assumptions, turning racial stereotypes in language into digital gospel. Imagine an AI that links African American English with negative traits or assigns higher criminal risk to Black faces—not because it understands complexity, but because its training data whispers these toxic narratives.

You’re witnessing perception hijacked by historical prejudice, where algorithms don’t just process information, they amplify existing discrimination. The scary part? These systems look neutral, presenting biased outputs with cold, computational confidence.

But they’re not objective—they’re just really good at recycling our worst inherited misconceptions.

Bias Breeds Bias

Machine learning doesn’t just crunch numbers—it swallows entire cultural narratives whole, spitting out digitized prejudices like a biased fortune cookie. Your AI isn’t neutral; it’s a mirror reflecting society’s deepest, darkest stereotypes.

- Bias seeps into language models like invisible ink

- Racist algorithms reproduce historical inequalities

- AI chatbots inherit human prejudices without questioning them

- Machine learning amplifies systemic discrimination

When you train an algorithm on biased data, you’re fundamentally teaching a robot to perpetuate harmful stereotypes. It’s like playing a twisted game of cultural telephone, where each iteration of the data becomes more distorted.

Your seemingly objective AI system is actually a sophisticated prejudice generator, quietly reinforcing societal biases under the guise of mathematical neutrality. The scariest part? Most people won’t even realize it’s happening.

Real-World Examples of Robotic Discrimination

While robots might seem like neutral machines, they’re learning some seriously problematic human biases—and fast.

Racial discrimination isn’t just a human problem anymore; it’s being coded right into machine learning algorithms. Imagine an AI that’s more likely to flag a Black man’s face as “criminal” or assign women to stereotypical roles like homemaker.

These aren’t hypothetical scenarios—they’re happening now. From judicial systems that convict African American English speakers more harshly to product recommendations that consistently favor white males, robots are becoming mirrors of our worst societal prejudices.

With the robotics industry projected to explode from $18 billion to $60 billion, we’re fundamentally mass-producing discrimination machines.

The question isn’t just “Can a robot be racist?” but “How do we stop robots from becoming racism’s most efficient delivery system?”

Unmasking Bias in Artificial Intelligence Systems

Because bias doesn’t just happen by accident, artificial intelligence systems are becoming sophisticated discrimination machines hiding behind a veneer of algorithmic neutrality.

Language models aren’t neutral—they’re absorbing and amplifying societal prejudices like sponges soaking up toxic water.

Key ways AI reveals its hidden biases:

- Associating Black individuals with criminality

- Reinforcing stereotypes about marginalized groups

- Displaying reduced empathy towards non-white users

- Perpetuating discriminatory job and legal outcomes

You might think robots are objective, but they’re learning from deeply flawed human datasets.

These systems encode historical inequities, transforming statistical patterns into seemingly scientific judgments.

The “coded gaze” isn’t just a glitch—it’s a feature, reflecting the narrow perspectives of those who design and train these technologies.

Who’s really programming whom: humans or machines?

The Societal Impact of Prejudiced Algorithms

You’ve heard of systemic racism, but what happens when robots start picking up society’s worst habits?

Algorithmic discrimination isn’t just a tech problem—it’s a mirror reflecting our deepest societal prejudices, where machines learn to categorize humans through biased data and perpetuate harmful stereotypes.

When AI starts deciding who gets hired, who looks “suspicious,” or what opportunities are available, you’re not just looking at a technological glitch, but a potential amplification of human discrimination that could reshape entire social landscapes.

Algorithmic Discrimination Exposed

If algorithms could blush, they’d be turning crimson right now. Algorithmic discrimination isn’t just a tech problem—it’s a mirror reflecting our deepest societal prejudices. Language models are picking up stereotypes faster than a toddler learns bad words, linking certain demographics with harmful narratives.

Consider how AI perpetuates bias:

- Associating Black faces with criminality

- Relegating women to low-status jobs

- Reducing empathy for non-white users

- Stereotyping linguistic patterns as indicators of capability

Your seemingly neutral robot isn’t neutral at all. It’s absorbing decades of systemic racism and sexism, then spitting those toxic assumptions back into the world.

The algorithms aren’t just learning—they’re amplifying existing discrimination with terrifying efficiency. Who programmed these digital bigots, anyway? And more importantly, how do we hit the reset button?

Technological Bias Consequences

When algorithms start playing judge, jury, and executioner in our most critical systems, we’re not just talking about a glitch—we’re witnessing a digital dystopia unfolding in real-time.

These prejudiced machines aren’t just processing data; they’re amplifying centuries of systemic discrimination with cold, calculated precision. Your job prospects, healthcare recommendations, and legal outcomes are now being filtered through algorithmic interactions that carry deep-rooted societal biases.

Imagine an AI that decides your future based on historical injustices, perpetuating racism without even understanding what it’s doing. Black individuals face higher risk ratings, AAE speakers get stereotyped, and marginalized groups get pushed further to the margins—all under the guise of technological neutrality.

The robot isn’t just racist; it’s weaponizing prejudice through lines of code.

Systemic Prejudice Mechanisms

Algorithmic prejudice isn’t just a glitch—it’s a systemic cancer eating away at the promise of technological neutrality.

You’re witnessing how AI absorbs societal biases like a sponge, transforming discriminatory outcomes into seemingly “objective” decisions. These algorithmic mechanisms perpetuate systemic prejudice through:

- Training data that reflects historical inequalities

- Automated risk assessments that amplify existing biases

- Language models that stereotype minority communication

- Decision-making processes that inherently disadvantage marginalized groups

When AI systems reproduce human prejudices, they’re not just reflecting society—they’re actively weaponizing bias.

Imagine algorithms sentencing individuals based on deeply entrenched racial stereotypes, or job screening tools that quietly filter out candidates from certain demographics.

The machine isn’t just a mirror; it’s a magnifying glass that intensifies our most toxic social patterns.

And you thought robots were supposed to be neutral? Think again.

Strategies for Mitigating Robotic Bias

Because robots aren’t born with prejudices, we can actually train them to be less biased than humans. The key? Diverse and representative training datasets that capture the full spectrum of human experience.

Think of AI learning like raising a kid—you’ve got to expose them to different perspectives, cultures, and backgrounds to prevent narrow-minded thinking.

Auditing algorithms becomes vital. Companies need to rigorously examine their robotic systems, hunting down hidden biases before they cause real-world damage. This means bringing together ethicists, sociologists, and tech nerds to create robust guidelines.

Continuous monitoring is essential—you can’t just set and forget.

Explicit instructions matter too. By telling AI systems to take demographic factors into account, we nudge them toward more equitable interactions. It’s like teaching empathy, but with code.

Ethical Imperatives in AI Development

Mitigating robotic bias isn’t just a technical challenge—it’s a moral imperative that demands our full attention.

Ethical considerations in AI development are like traversing a minefield of potential discrimination, where biased training data can silently perpetuate societal inequalities.

Key ethical priorities include:

- Rigorous algorithm audits

- Diverse dataset incorporation

- Continuous model evaluation

- Transparent bias detection

You can’t simply ignore the problem and hope it’ll magically disappear. AI systems aren’t neutral—they’re mirrors reflecting our most deeply embedded prejudices.

Want to create truly equitable technology? It means confronting uncomfortable truths about representation, challenging existing data paradigms, and committing to radical transparency.

The future of AI isn’t just about sophisticated algorithms—it’s about building systems that genuinely respect human complexity and dignity.

People Also Ask About Robots

Can Robots Intentionally Choose to Be Racist, or Is It Accidental?

You can’t intentionally choose racism in robots; bias emerges accidentally through training data. Machine learning algorithms reflect human prejudices unconsciously, absorbing societal stereotypes without deliberate malice or programmed discrimination.

Do AI Developers Deliberately Program Discriminatory Algorithms?

Imagine Amazon’s hiring AI rejecting resumes with minority names. You’ll find that some developers don’t intentionally create discriminatory algorithms, but unconscious biases in training data can inadvertently embed prejudiced decision-making patterns into machine learning systems.

Are Certain Machine Learning Models More Prone to Bias?

You’ll find that neural networks trained on imbalanced datasets can inadvertently perpetuate societal biases. Deep learning models with limited diverse training data are more likely to reflect systemic prejudices embedded in their initial information sources.

How Quickly Can Racist AI Behaviors Be Detected and Corrected?

You’ll need robust testing protocols and diverse data sets to quickly identify AI bias. By continuously monitoring algorithm outputs, reviewing training data, and implementing inclusive machine learning practices, you can swiftly detect and correct discriminatory behaviors.

Is It Possible to Create Completely Unbiased Artificial Intelligence?

You’ll battle an impossible mountain trying to create perfectly unbiased AI. Since human programmers inherently carry unconscious biases, you’ll always face challenges in developing truly neutral machine learning algorithms that are completely free from subjective influences.

Why This Matters in Robotics

You’ve seen how AI can inherit humanity’s ugliest prejudices. But here’s the million-dollar question: will we let algorithms perpetuate discrimination, or will we rewrite the code of our technological future? The choice is ours. By demanding transparency, diverse development teams, and rigorous bias testing, we can transform AI from a potential oppressor into a tool of genuine equality. Our robotic children don’t have to repeat our mistakes.