Table of Contents

Robots are getting smarter at predicting your next move, turning your messy human behavior into tidy data points. They use machine learning to decode your split-second choices and long-term patterns, but here’s the catch: you’re wildly unpredictable. Sometimes robots nail it, other times they totally miss the mark—mistaking a friendly wave for a potential threat. Think of them as well-intentioned but slightly awkward dance partners trying to follow your lead. Curious about their learning curve?

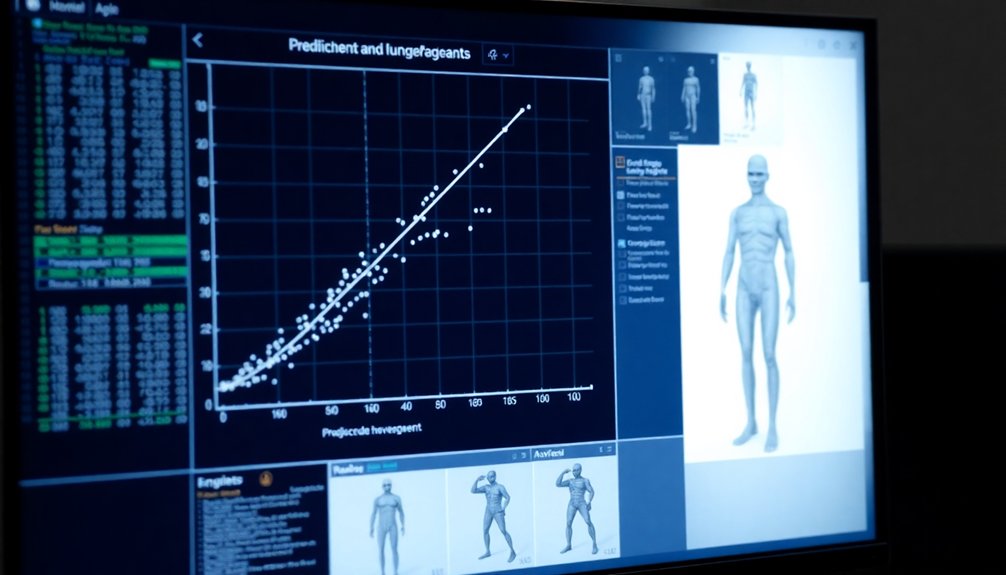

The Science of Human Prediction

Ever wondered how robots might crack the code of human unpredictability? Buckle up, because predicting human behavior isn’t just science—it’s part magic, part math. Sensor fusion techniques help robots integrate multiple data streams to improve their behavioral prediction models.

Researchers use fancy tools like eye tracking, behavioral diaries, and machine learning algorithms to peek into our messy, complicated lives. They’re building models that can guess what you’ll do next, sometimes with scary accuracy. Behavioral observation techniques reveal that contextual factors significantly influence human decision-making patterns.

Markov chains and neural networks crunch through mountains of data, looking for patterns in how humans move, choose, and react. But here’s the kicker: we’re wildly complex creatures. Social-structural determinants provide a crucial framework for understanding the intricate environmental factors that shape human behavioral predictions.

One moment you’re predictable, the next you’re totally random. That’s why even the smartest robots get behavior prediction wrong. They might nail 80% of your actions, but that wild 20% keeps things interesting—and keeps humans delightfully unpredictable.

Machine Learning Meets Human Complexity

You’re stepping into a world where machines are getting scary good at reading your emotional playbook.

Imagine algorithms that can map out your decision-making like a GPS tracking your every mental turn, predicting whether you’ll choose pizza or salad before you even know yourself.

These AI systems are becoming psychological wizards, learning to decode the complex algorithms of human behavior with an uncanny precision that’ll make you wonder who’s really in control – you, or the machine watching your every move. Behavioral AI technologies are now analyzing vast datasets from IoT devices and digital interactions to create increasingly sophisticated predictive models of human behavior.

Neural networks are particularly adept at parsing intricate human patterns, developing predictive learning models that can anticipate behavioral trends with remarkable accuracy. Robotic perception techniques allow these systems to continuously refine their understanding by learning from diverse datasets and adapting their predictive capabilities in real-time.

Decoding Human Patterns

When machine learning algorithms start peering into the labyrinth of human behavior, they’re not just crunching numbers—they’re attempting to decode the most complex operating system on the planet. Daily machine interactions increasingly demonstrate how algorithms learn from personalized data sources like Netflix and Amazon recommendations. Your actions aren’t random; they’re intricate puzzles waiting to be solved by increasingly sophisticated robots. Reinforcement learning techniques enable robots to develop adaptive strategies for understanding and predicting nuanced human behaviors. Researchers recognize that reliable data sources are crucial for understanding algorithmic predictive capabilities.

| Challenge | Potential Solution |

|---|---|

| Uncertainty | Adaptive Learning |

| Abstraction | Multi-Level Prediction |

| Complexity | Psychological Constraints |

Neural networks and deep learning models are getting smarter, learning to predict your next move like digital fortune tellers. They’re not perfect—far from it. Sometimes they’ll guess wildly off-target, like a toddler playing chess. But each misfire teaches them something new. By analyzing massive datasets and leveraging techniques like reinforcement learning, these algorithms are slowly unraveling the mysteries of human decision-making. Want to bet they’ll crack the code before we fully understand ourselves?

AI Decision Mapping

As machine learning algorithms dance with human complexity, AI decision mapping emerges as the digital Rosetta Stone for translating unpredictable human behavior into predictable patterns. Predictive analytics technologies enable AI systems to analyze massive datasets, identifying nuanced behavioral correlations that traditional methods might miss.

Think of it like a high-tech crystal ball that sifts through mountains of data, hunting for hidden insights about what humans might do next. Neural networks provide a sophisticated mechanism for robots to analyze complex environments and process sensory information with unprecedented precision. Decision trees, a fundamental machine learning technique, provide a structured approach to breaking down complex decision-making processes into interpretable branches and nodes.

But here’s the catch: robots aren’t mind readers. They’re pattern recognizers with serious limitations. Your AI assistant might nail predicting your shopping habits but totally miss why you spontaneously decided to learn salsa dancing.

These smart systems use decision trees, predictive modeling, and continuous learning to make educated guesses. Sometimes they’re brilliantly accurate; sometimes they’re hilariously wrong.

The real magic happens when AI augments human decision-making, turning raw data into actionable intelligence – without replacing human intuition entirely.

Decoding Behavior Through Technology

Because decoding human behavior isn’t just sci-fi anymore, machine learning algorithms are transforming how we comprehend human actions. Machine learning technologies like deep learning and neural networks enable sophisticated behavioral prediction models that integrate complex data streams from multiple sources. Think of these algorithms like digital mind readers, tracking your patterns and predicting what you’ll do next. They’re not perfect—sometimes they nail it, sometimes they’re hilariously wrong—but they’re getting smarter.

Imagine systems that can spot when you’re about to do something weird by analyzing tiny behavioral signals. Using techniques like anomaly detection and dynamic models, these tech wizards can predict everything from potential health risks to fraudulent activities. The MIT and University of Washington research demonstrates how computational constraint modeling can predict human decision-making by understanding the depth of an agent’s planning process. Cognitive engines are enabling robots to process real-time knowledge and interpret human behaviors more intuitively.

They’re basically turning human unpredictability into a mathematical puzzle, breaking down complex behaviors into data points that can be analyzed, understood, and—potentially—anticipated.

Want to know the future? These algorithms might just have a preview.

When Algorithms Misread Intentions

You think algorithms are perfect at reading your mind? Think again.

When robots try to predict human behavior, they often stumble into a comedy of errors, misinterpreting your irrational choices and totally missing the nuanced intentions that make you, well, human.

It’s like watching a super-smart calculator try to understand why you randomly decided to buy three pounds of gummy bears at midnight—some mysteries just aren’t meant to be solved by machine logic.

Algorithmic Prediction Gaps

When robots try to predict human behavior, they often stumble into a hilarious maze of misunderstandings—think of an alien attempting to decode human emotions using nothing but Excel spreadsheets.

These digital detectives struggle because human behavior isn’t a simple math equation. You’re unpredictable, driven by emotions, social pressures, and contexts that algorithms can’t easily map.

Sure, they might predict you’ll click a button, but understanding why you clicked? That’s a whole different game. Algorithms get tripped up by the messy complexity of human choices—the split-second decisions, the irrational impulses, the subtle nuances that make you wonderfully human.

They’re like tone-deaf musicians trying to compose a symphony of human behavior, often missing the most critical notes.

Irrational Choice Patterns

If human decision-making were a predictable highway, algorithms would be those overly confident GPS systems constantly rerouting you—except humans aren’t roads, and our choices are more like a wild, unpredictable rollercoaster.

Your brain’s got multiple decision modules competing like rival debate teams, tossing rational thoughts and emotional impulses into a chaotic mix. Immediate rewards? They’re like shiny candy tempting you away from long-term strategies.

Robots struggle to decode this mess because human choices aren’t just about logic—they’re emotional, culturally nuanced, and sometimes gloriously irrational. Peer pressure, cognitive biases, and split-second feelings can derail the most carefully planned decisions.

Think algorithms can predict your next move? Think again. We’re walking contradiction machines, and that’s what makes us fascinatingly, frustratingly human.

Intention Inference Errors

Because robots aren’t mind readers—despite what sci-fi movies might promise—intention inference remains a tricky technological tightrope walk. Your friendly neighborhood robot might totally misread your intentions, turning a casual reach for coffee into what it thinks is an aggressive move.

These algorithms stumble when human behavior gets complex, misinterpreting emotional nuances that even humans struggle to decode. Safety becomes a real concern when robots make incorrect predictions, potentially triggering unexpected actions that could range from awkward to dangerous.

Imagine a care robot mistaking a muscle twitch for a request, or an industrial robot misinterpreting a worker’s movement. The consequences? Broken trust, operational inefficiencies, and interactions that feel more like a comedy of errors than smooth human-robot collaboration.

The Unpredictable Human Factor

Despite our love affair with algorithms and predictive models, humans remain the wild cards that make robots scratch their mechanical heads. Predicting human behavior is like trying to catch smoke with a butterfly net — messy, unpredictable, and slightly ridiculous.

- Your decisions shift like desert sands, changing with mood, context, and last night’s weird dream.

- Risk tolerance isn’t a fixed point but a squiggly line dancing across emotional landscapes.

- Memory works like a drunk librarian, randomly pulling files and reshuffling memories.

- External factors hijack your choices faster than a toddler grabbing the TV remote.

- Psychological triggers can turn rational humans into unpredictable chaos machines.

Want a reliable prediction? Good luck. Humans are walking, talking uncertainty engines that’d make even the most advanced AI throw up its digital hands in surrender.

Emerging Frontiers of Robotic Understanding

Where the previous section left us scratching our heads about human unpredictability, robotic understanding is now racing to crack that complex code.

Think of AI as a detective trying to decode your next move before you even make it. With hyper-specialized algorithms and adaptive learning, robots are getting scary good at predicting human behavior.

AI: The ultimate behavioral detective, cracking human predictability with razor-sharp algorithms and mind-reading precision.

They’re not just watching; they’re learning your patterns faster than your best friend. Imagine agricultural robots that can anticipate crop needs or healthcare AI that understands patient behaviors before symptoms emerge.

It’s like having a crystal ball, but powered by quantum-classical computing and machine learning.

The twist? These systems aren’t replacing humans—they’re becoming our ultra-smart co-pilots, transforming how we interact with technology.

People Also Ask

Can Robots Truly Understand the Nuances of Human Emotional Decision-Making?

You’ll find robots can partially grasp emotional decision-making, but they’re limited by algorithmic interpretations. They’ll analyze signals and patterns, yet miss the profound complexity of human intuition and subconscious emotional nuances.

How Accurate Are Current AI Predictions of Unpredictable Human Behaviors?

Like a chess player anticipating moves, AI predicts human behaviors with 85% accuracy in controlled settings. You’ll find it impressive yet imperfect, as nuanced emotional decisions still challenge even the most sophisticated algorithms.

Do Robots Learn From Their Mistakes in Predicting Human Actions?

You’ll find robots do learn from predictive mistakes through adaptive algorithms that recalibrate models, update internal representations, and dynamically adjust their understanding when human actions deviate from initial predictions.

What Ethical Concerns Arise From Robots Predicting Personal Human Behaviors?

You’ll love how robots invade your privacy, predicting your deepest secrets! But beware: their biased algorithms might manipulate your choices, expose your vulnerabilities, and reduce your autonomy without your genuine informed consent.

Can Machine Learning Models Account for Cultural Differences in Behavior?

You’ll find machine learning models can account for cultural differences by analyzing diverse datasets, capturing nonlinear behavioral changes, and incorporating cultural dimensions that reveal nuanced variations in human interaction across different social contexts.

The Bottom Line

You’re standing at the crossroads of human complexity and robotic intelligence, where algorithms dance but sometimes stumble. Think of prediction like a clumsy robot trying to read a mood ring—fascinating, but far from perfect. The future isn’t about machines totally understanding us, but learning our beautiful unpredictability. Will robots crack the human code? Maybe. But right now, they’re more like curious toddlers poking at the mysterious machinery of emotion.

References

- https://arxiv.org/abs/2410.20423

- https://www.roboticsproceedings.org/rss17/p037.pdf

- https://bigthink.com/the-present/ai-model-decision-making/

- https://collab.me.vt.edu/pdfs/sagar_iros2025.pdf

- https://www.annualreviews.org/content/journals/10.1146/annurev-control-071223-105834

- https://www.behavioraleconomics.com/decoding-human-behaviour-an-exploration-of-behavioural-science-methodologies/

- https://cftste.experience.crmforce.mil/arlext/s/baadatabaseentry/a3Ft0000002Y39aEAC/opt0050

- https://www.earth.com/news/science-behind-predicting-and-changing-human-behavior/

- https://research.manchester.ac.uk/files/256978591/behaviour_prediction_review_manuscript.pdf

- https://direct.mit.edu/neco/article/11/1/229/6237/Modeling-and-Prediction-of-Human-Behavior