Robotic sensors are like sci-fi superpowers for machines. They transform raw data into intelligent actions through touch, vision, and motion detection. Imagine tiny robot nerves that can feel textures, see landscapes, and track movement with crazy precision. Stereo cameras, tactile sensors, and motion trackers let robots interpret their environment like high-tech detectives. Want to know how these mechanical brains really work? Stick around, and you’ll see technology that sounds like pure magic.

The Foundation of Robotic Perception

When you think about robots, you might imagine cold, unfeeling machines blindly bumping into walls.

But modern robotics is way more sophisticated. Touch, vision, and motion sensors transform these mechanical beings from clunky automatons into smart, perceptive creatures. These sensors act like high-tech nervous systems, allowing robots to detect and interact with their environment in mind-blowing ways.

Vision sensors decode visual landscapes faster than you can blink, while touch sensors help robots handle delicate objects without crushing them. Motion sensors keep robots stable and oriented, preventing them from toppling over like drunk mechanical puppies.

It’s not science fiction anymore—it’s how robots are becoming eerily good at perceiving and maneuvering complex environments. Machine learning algorithms continuously refine robots’ perception, enabling them to adapt and improve their sensory capabilities in real-time.

Who knew machines could develop such nuanced perception?

Tactile Sensors: Robots That Can Feel

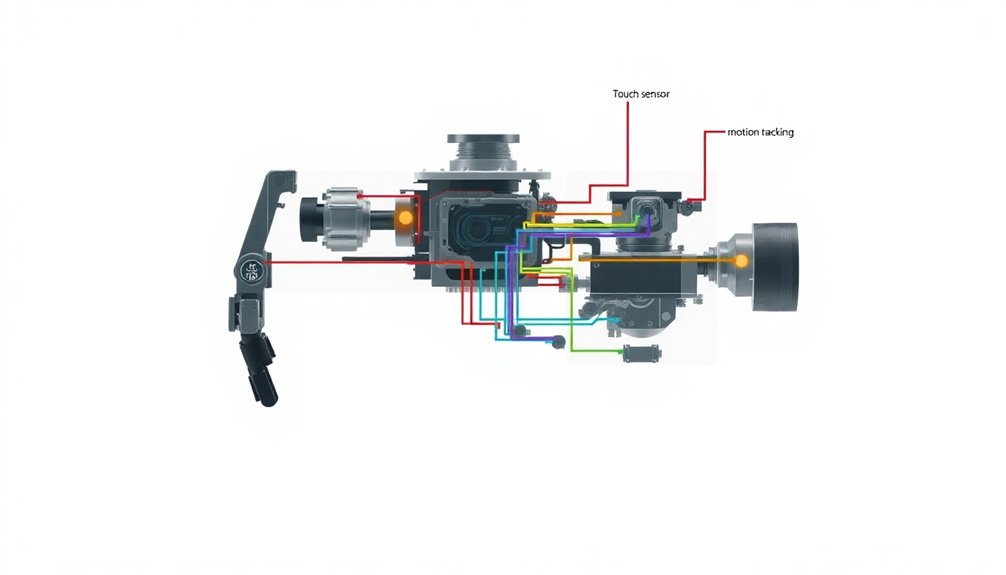

You’ve probably never thought about robots feeling things, but tactile sensors are changing the game of robotic interaction.

Imagine a robot that can sense the delicate texture of an egg shell or adjust its grip strength with microscopic precision, just like human fingertips.

These smart sensors transform robots from clumsy machines into nimble, responsive assistants that can navigate complex physical environments without crushing everything they touch. By leveraging machine learning algorithms, these advanced sensors can interpret touch signals with up to 97% accuracy, enabling robots to distinguish between various material properties.

Robotic Touch Mechanics

While humans pride themselves on having a monopoly on touch, robots are quickly catching up with some seriously impressive tactile sensors. These technological marvels convert physical stimuli into electrical signals that let robots “feel” like never before. Triboelectric neural networks enable robotic sensors to process complex tactile data with up to 97% accuracy in touch-based identification.

Here’s how robotic touch works:

- Capacitive sensors detect pressure and texture through electrical changes

- Piezoelectric mechanisms translate vibrations into precise electrical signals

- Robotic grippers use grip strength feedback to handle fragile items delicately

- Artificial skins simulate complex human touch sensations for enhanced interaction

Imagine a robot surgeon gently manipulating tissue or a manufacturing bot adjusting its grip without crushing delicate components.

Tactile sensors aren’t just cool tech—they’re revolutionizing how machines understand and interact with the world around them. Who knew robots could be so… sensitive?

Sensing Delicate Interactions

Because robots are finally learning to be gentle, tactile sensors have become the secret sauce transforming machines from clumsy metal giants into precision performers. They’re not just cold, hard machines anymore—they’re learning to “feel” through advanced sensing technologies that detect pressure, texture, and subtle interactions. Sensor neural networks enable robots to continuously learn and refine their touch sensitivity, adapting to complex interaction scenarios.

| Sensor Type | Key Capability |

|---|---|

| Piezoelectric | Force Detection |

| Capacitive | Pressure Mapping |

| Strain Gauge | Surface Texture |

| Optical | Delicate Manipulation |

Robotic grippers now handle fragile items with surgical precision, thanks to sensory feedback that mimics human touch. Imagine a robot that can pick up an egg without cracking its shell or sort delicate electronics without causing damage. These aren’t sci-fi fantasies—they’re today’s robotic reality, where sophisticated tactile sensors are dramatically improving robot performance across industries.

Vision Systems: How Robots See the World

Every robot worth its circuits needs eyes, and vision systems are how machines transform raw visual data into meaningful understanding.

Robotic vision isn’t just about seeing—it’s about interpreting the world with precision and intelligence.

4 key ways robots “see”:

- Stereo cameras triangulate depth, giving machines spatial awareness like human eyes

- Machine learning algorithms recognize objects in milliseconds

- Image processing filters transform visual noise into clear signals

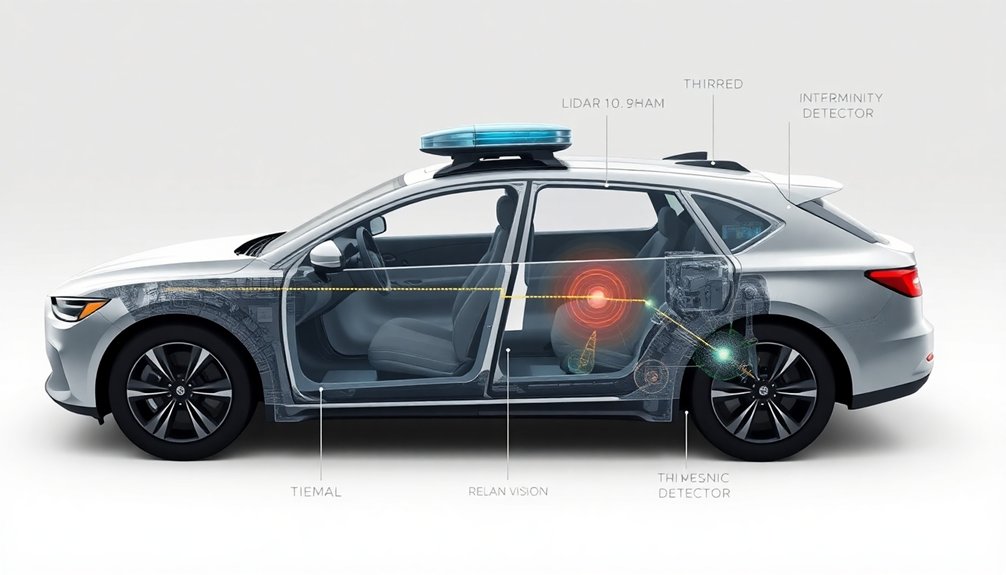

- Sensors integrate data from cameras, LiDAR, and radar for thorough navigation

Think of these vision systems as robotic superpowers. They enable autonomous vehicles to dodge obstacles, factory robots to spot manufacturing defects, and exploration machines to map unknown territories.

Continuous machine learning allows robots to progressively improve their visual processing capabilities, becoming more sophisticated with each interaction and image analysis.

Who needs perfect 20/20 vision when you can upgrade to machine-level perception?

Motion and Position Tracking

When robots move, they’ve got to know exactly where they are—and that’s where motion and position tracking comes in. Robots rely on a killer combo of sensors to nail their location with insane precision. Check out how these tech wizards do their magic:

| Sensor Type | Primary Function |

|---|---|

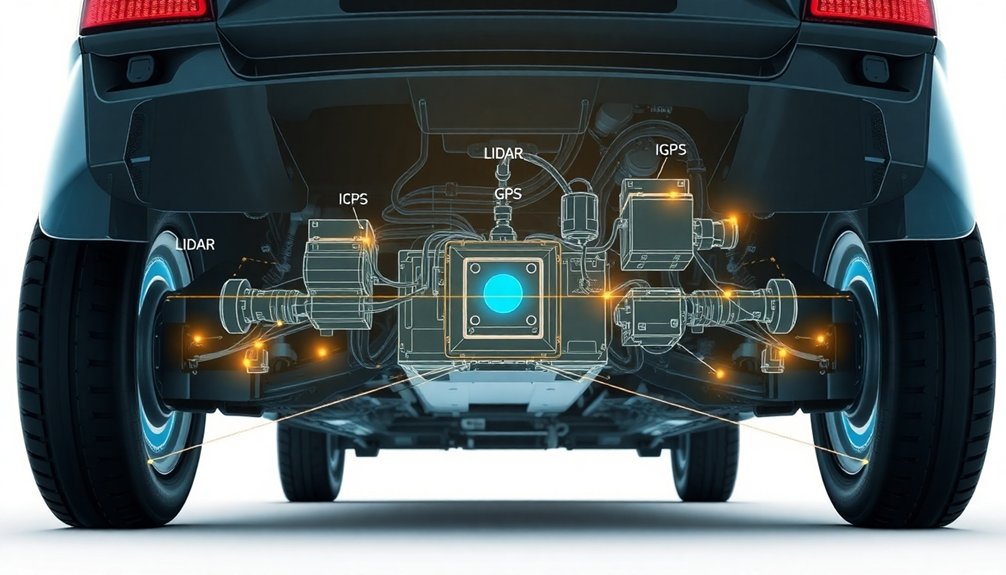

| IMUs | Measure acceleration & rotation |

| GPS | Global positioning outdoors |

| Vision Systems | Triangulate distances |

| Laser Rangefinders | Map surroundings |

Sensor fusion is the secret sauce that blends data from multiple sources, turning raw sensor inputs into a crystal-clear picture of a robot’s motion and position. By combining IMUs, GPS, and vision tech, robots can dodge obstacles, track distances, and navigate complex environments with mind-blowing accuracy. Want to know where a robot is? These sensors are basically its internal GPS on steroids—always watching, always calculating. Machine learning algorithms continuously enhance these navigation capabilities, allowing robots to adapt and improve their spatial intelligence in real-time.

Environmental Awareness Technologies

If robots are going to survive in the wild world of human environments, they’ve got to be way more perceptive than a distracted teenager scrolling Instagram. Neuromorphic computing enables robots to process sensory information with brain-like efficiency, mimicking biological neural networks.

Environmental awareness technologies turn robots from blind wanderers into sensing machines that can actually navigate complex spaces. Here’s how these sensors work their magic:

- Vision sensors decode visual landscapes, spotting objects and movements faster than your eye can blink.

- Touch sensors mimic human skin, feeling pressure and texture with microscopic precision.

- Proximity sensors create invisible force fields that detect obstacles before collision.

- Sensor fusion combines multiple sensor inputs, creating a thorough environmental map.

These technologies transform robots from clunky machines into intelligent navigators, mastering autonomous navigation and obstacle avoidance with an almost supernatural awareness of their surroundings.

Force and Pressure Detection Mechanisms

Because robots can’t just muscle their way through delicate tasks, force and pressure detection mechanisms are the secret sauce that turns mechanical limbs into precision instruments.

Piezoelectric sensors are the ninja warriors of robotic touch, generating electrical charges when mechanical stress hits them. Want to know how robotic grippers handle fragile objects without crushing them? These sensors provide essential feedback, measuring exactly how much force is applied during manipulation tasks.

Industrial robotics relies on pressure sensors with different operational principles—capacitive, resistive, and piezoresistive—each tuned to specific challenges.

Flexible tactile sensors now even mimic human skin, allowing robots to detect subtle texture changes. Who would’ve thought machines could develop such a delicate sense of touch? It’s like giving robots superhuman sensitivity, one sensor at a time.

Navigation and Positioning Sensors

Ever wonder how robots know exactly where they’re going without a GPS strapped to their robotic forehead? Navigation and positioning sensors are the secret sauce that help robots map their world with crazy precision. Sensor fusion techniques combine multiple sensor inputs to create a comprehensive understanding of spatial dynamics through advanced data integration.

Check out how these tech wizards keep robots oriented:

- GPS sensors blanket outdoor environments with meter-level accuracy, guiding autonomous vehicles and drones like digital breadcrumbs.

- Visual navigation systems use cameras and computer vision algorithms to interpret surroundings and track landmarks in real-time.

- Inertial measurement units (IMUs) pack accelerometers and gyroscopes to measure movement and maintain robot balance.

- Sensor fusion techniques combine data from multiple navigation sensors, creating a superhuman positioning intelligence.

These positioning sensors transform robots from blind wanderers into navigation ninjas, turning complex environments into easily traversed playgrounds.

Advanced Sensing Technologies

You’ve probably wondered how robots actually “see” and understand their world beyond simple camera feeds.

Sensor fusion technologies are like giving robots a superpower of perception, where different sensors team up to create a more complete picture of reality—imagine your smartphone’s GPS, camera, and motion sensors working together on steroids.

Neural networks enable robots to process complex sensory inputs with remarkable speed and accuracy, integrating data from multiple sources to create a comprehensive understanding of their environment.

Sensor Fusion Technologies

When robots need to make sense of the world around them, sensor fusion is like giving them a supercharged brain that combines multiple ways of seeing and understanding.

These advanced sensor fusion technologies transform robotic systems by integrating inputs from various sensors to create a thorough environmental analysis.

Key capabilities include:

- Merging data from cameras, LiDAR, and radar for precise object detection

- Enabling autonomous vehicles to navigate complex environments

- Improving real-time decision-making capabilities

- Enhancing robots’ perception beyond single-sensor limitations

Multi-Modal Perception Systems

Since robots can’t rely on just one set of eyes, multi-modal perception systems are like giving machines a superhuman sensory upgrade. By combining vision, touch, and motion sensors, these advanced systems help robots make smarter decisions in complex environments. Neuromorphic computing enables these systems to process sensory inputs with brain-like efficiency and adaptability.

Imagine robotic grippers using stereo cameras and tactile sensors to handle fragile objects with surgical precision—that’s sensor fusion techniques in action.

These systems leverage advanced algorithms to process multiple sensory inputs simultaneously, dramatically improving navigation and object recognition.

Want proof? Autonomous vehicles rely on these technologies to interpret dynamic environments faster than human reflexes. The result? Robots that don’t just see the world but genuinely understand it, turning sci-fi fantasies into today’s technological reality.

Who said machines can’t be perceptive?

Integrating Sensor Data for Intelligent Behavior

Because robots aren’t just fancy metal boxes anymore, sensor data integration has become the secret sauce that transforms clunky machines into intelligent systems.

Think of it like giving robots a supercharged sensory brain:

- Touch sensors help robots understand pressure and texture, letting them handle delicate objects without crushing them.

- Vision sensors provide spatial awareness, recognizing objects and mapping environments in real-time.

- Motion sensors track movement, enabling smooth navigation and obstacle avoidance.

- Sensor fusion algorithms combine these inputs, creating a thorough understanding of complex surroundings.

Imagine a robot that can dynamically adjust its grip, dodge unexpected obstacles, and recognize objects on the fly.

By integrating diverse sensor data, these machines aren’t just following programmed instructions—they’re adapting, learning, and responding like intelligent beings.

Who said robots are just cold, mechanical tools?

People Also Ask About Robots

How Does a Touch Sensor in a Robot Work?

You’ll detect physical contact through specialized switches that sense changes in pressure, velocity, or position. When an object touches the sensor, it triggers a signal, allowing your robot to respond by stopping, changing direction, or adjusting its interaction.

How Do Sensors Work in Robotics?

You’ll find sensors convert physical phenomena into electrical signals through transduction, allowing robots to perceive and interact with their environment by detecting light, sound, pressure, and motion with specialized technologies.

What Is the Sensor That Allows the Robot to Interpret Touch and Feel Motions?

You’ll rely on touch sensors to interpret physical interactions. These specialized sensors detect pressure, contact, and force, converting mechanical stimulations into electronic signals that help robots understand and respond to their tactile environment.

How Does a Robot Simulate a Sense of Touch?

Did you know 80% of robotic touch sensors use piezoelectric materials? You’ll experience a robot’s touch through specialized sensors that generate electrical signals when pressure is applied, mimicking human tactile perception and interaction.

Why This Matters in Robotics

Robots aren’t just metal muscles anymore—they’re becoming sensing machines that understand their world like we do. From touch to vision, these technological marvels are evolving beyond simple programming. You’ll watch them navigate, learn, and adapt with sensors that turn raw data into intelligent action. The future isn’t about replacing humans; it’s about creating partners that perceive reality in ways we’re just beginning to imagine.