Imagine robots as curious kids exploring the world through high-tech eyes. They use cameras and sensors to snap, analyze, and understand their surroundings like digital detectives. Machine learning helps them recognize objects, predict movements, and map spaces in real-time. From factory floors to hazardous environments, these mechanical brains are turning raw data into smart decisions. Want to see how deep this robot rabbit hole goes?

What Is Robot Perception?

Vision Quest: Robot Style.

Ever wondered how robots actually “see” the world? Robot perception is basically giving machines eyes and a brain to understand what they’re looking at. It’s like teaching a computer to recognize objects the way humans do, but with way more precision.

Through object recognition algorithms, robots can now identify a coffee mug, a wrench, or a tiny manufacturing defect faster than you can blink.

Digital cameras and smart machine learning are the secret sauce. These robots don’t just capture images; they analyze them, learning and improving with each snapshot.

Sensor technologies transform robots from simple machines into intelligent beings capable of perceiving and interpreting their environment with remarkable sophistication.

Think of it as training a super-smart digital assistant that gets better every single time it looks around. Cool, right? Robots are basically turning into visual geniuses, transforming how we interact with technology.

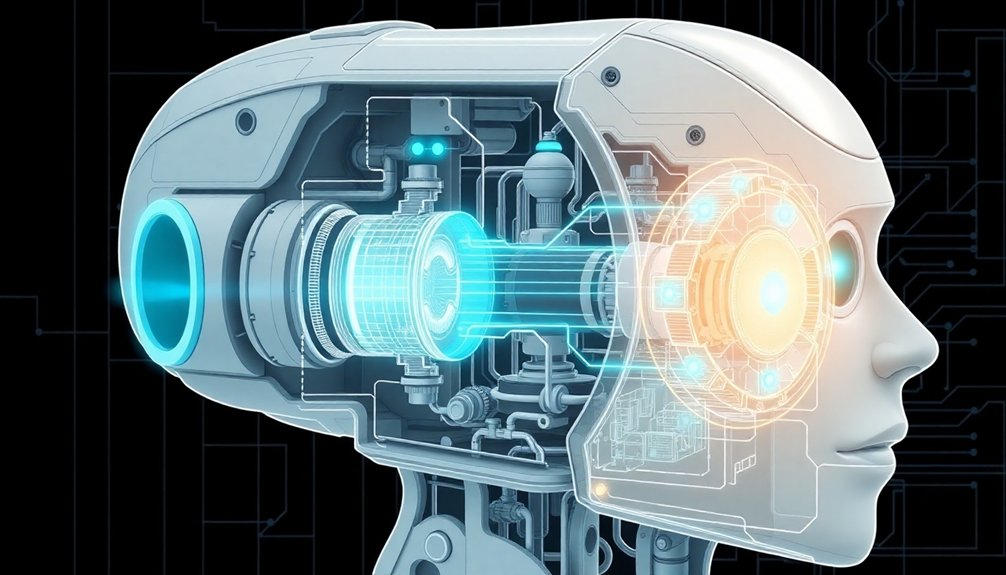

The Eyes of a Robot: Camera and Sensor Technologies

When you think robots see the world, you’re picturing their high-tech camera systems scanning environments like digital detectives piecing together visual clues.

These robotic “eyes” aren’t just passive cameras, but intelligent sensors that process images through complex machine learning algorithms, transforming raw visual data into meaningful spatial understanding.

Just like humans rely on depth perception and pattern recognition, modern robots use stereoscopic cameras and advanced software to interpret their surroundings with increasing precision and adaptability.

Gyroscopes and accelerometers provide additional depth to robotic perception, enabling real-time 3D environmental mapping beyond traditional visual inputs.

Camera Vision Basics

Eyes are the windows to a robot’s soul—or at least, its ability to understand the world. Computer vision isn’t magic; it’s a carefully crafted dance between cameras and intelligent software. Vision systems transform raw visual data into meaningful insights, letting robots see beyond simple pixels. Humanoid robotics technologies leverage advanced sensor systems to enhance perception and decision-making capabilities.

- Digital cameras capture color and depth, creating a rich understanding of space

- Machine learning algorithms decode complex visual information in milliseconds

- Continuous learning helps robots refine their perception with each interaction

Think of robot cameras like super-smart toddlers constantly learning. They’re not just taking pictures—they’re analyzing texture, shape, and movement. An RGBD camera doesn’t just see a cup; it understands the cup’s dimensions, color, and precise location.

With each interaction, these mechanical eyes get sharper, turning raw visual input into actionable intelligence. Who knew robots could be such quick learners?

Sensor Perception Technologies

Robots aren’t born with perfect perception—they’re engineered to see the world through an intricate network of sensors that would make sci-fi geeks drool.

Robot vision systems are like cybernetic eyeballs on steroids, using digital cameras to capture environmental snapshots faster than you can blink. Machine learning turns these raw images into meaningful data, allowing robots to recognize objects by color, shape, and texture with increasing precision.

Stereoscopic vision gives robots depth perception, letting them understand spatial relationships like a hawk tracking prey.

Deep learning algorithms help these mechanical brains continuously improve, logging interactions and predicting object distances with uncanny accuracy. Think of it as teaching a computer to see—not just look—and you’ll start to grasp how these technological marvels are reshaping our understanding of perception.

Neural networks transform these robotic perception systems by enabling adaptive learning, allowing robots to process high-dimensional inputs with increasingly sophisticated decision-making capabilities.

Processing Visual Information: How Machines Understand Images

Digital cameras have transformed how machines perceive the world, turning raw visual data into a sophisticated language of understanding. Deep learning algorithms now enable robots to master object recognition with shocking precision. Humanoid robots are increasingly leveraging advanced visual perception to interact more intelligently with their environments.

Your robotic friend sees the world through a lens of intelligent processing:

- Extracting color, shape, and texture details from every pixel

- Creating spatial maps that decode complex environments

- Continuously learning and adapting through machine intelligence

Imagine a robot that gets smarter with each image it captures—analyzing depth, tracking movements, and classifying objects faster than you can blink.

A mechanical mind decoding reality, learning and evolving with every pixel of visual data.

Stereoscopic vision helps these mechanical minds comprehend spatial relationships, turning what was once sci-fi fantasy into today’s technological reality. They’re not just seeing; they’re understanding, processing, and learning in real-time.

Machine Learning and Adaptive Vision Systems

Machine learning transforms robotic vision from rigid, programmed responses into a dynamic, self-improving system.

Imagine robots that learn like curious kids, snapping pictures and getting smarter with every interaction. Computer vision isn’t just about seeing anymore—it’s about understanding.

By training robots with labeled images, you’re fundamentally teaching them a visual language. They’ll start recognizing objects, maneuvering through spaces, and adapting to new environments faster than you’d expect.

Self-supervised learning techniques let robots estimate depth from single images, turning raw visual data into spatial intelligence.

The magic happens through continuous exposure: more diverse images mean sharper algorithms. Your robot isn’t just seeing the world—it’s learning its nuances, predicting movements, and building an ever-expanding mental map of its surroundings.

Pretty cool, right?

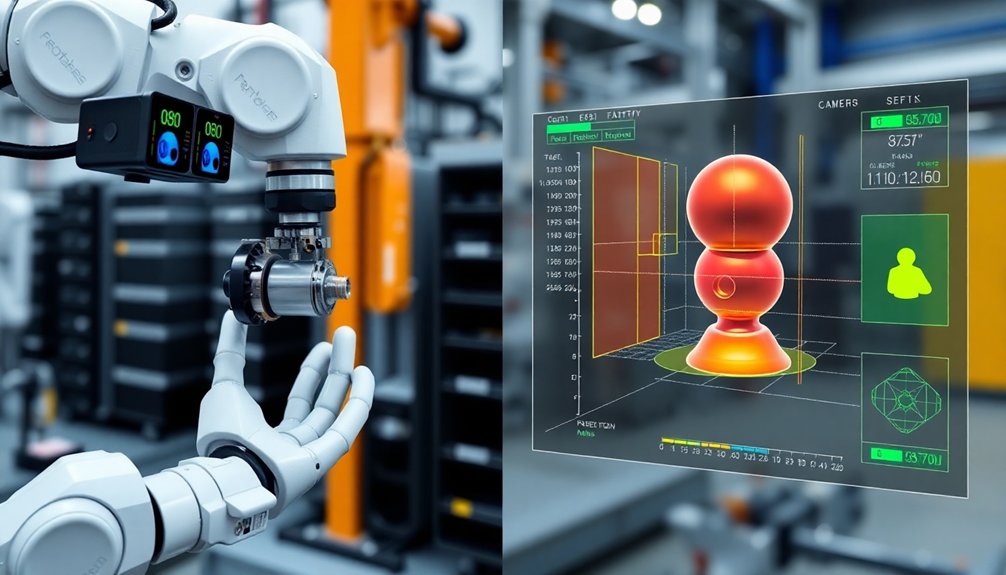

Object Recognition and Classification

When artificial intelligence decides to play detective, object recognition becomes its magnifying glass. Robots don’t just see—they analyze, classify, and understand the world through sophisticated image processing techniques that would make Sherlock Holmes jealous.

- Deep learning models can spot manufacturing flaws faster than human eyes, turning robots into precision inspection machines.

- Stereoscopic vision helps robots gauge depth and spatial relationships like tiny, tireless surveyors.

- Self-annotation lets robots autonomously learn and improve their object classification skills without constant human handholding.

Machine learning algorithms transform raw images into meaningful data, teaching robots to recognize objects by color, shape, texture, and orientation.

It’s like giving computers a brain that can instantly distinguish between a wrench and a banana—not just by looking, but by truly understanding what they’re seeing.

Depth Perception and Spatial Awareness

You’ve probably wondered how robots actually “see” the world around them, right?

Camera depth sensing lets robots measure distances and understand 3D spaces like humans do, using tricks like stereoscopic vision and motion tracking that make their spatial awareness eerily precise.

Camera Depth Sensing

Eyes are the windows to understanding—and for robots, cameras are their digital corneas. Camera depth sensing transforms flat images into rich, three-dimensional landscapes through clever computational magic. Robots don’t just see; they decode spatial relationships with remarkable precision.

- Stereoscopic vision mimics human eyes, calculating distances by comparing two camera perspectives.

- Depth estimation algorithms predict pixel depths from single RGB images.

- Motion-based techniques track scene changes to infer object distances.

Think of it like robotic superpowers: transforming 2D snapshots into immersive 3D maps. These systems learn to interpret visual data faster than you can blink, using advanced algorithms that predict distances and spatial relationships with astonishing accuracy.

Who needs biological eyeballs when you’ve got cutting-edge machine perception?

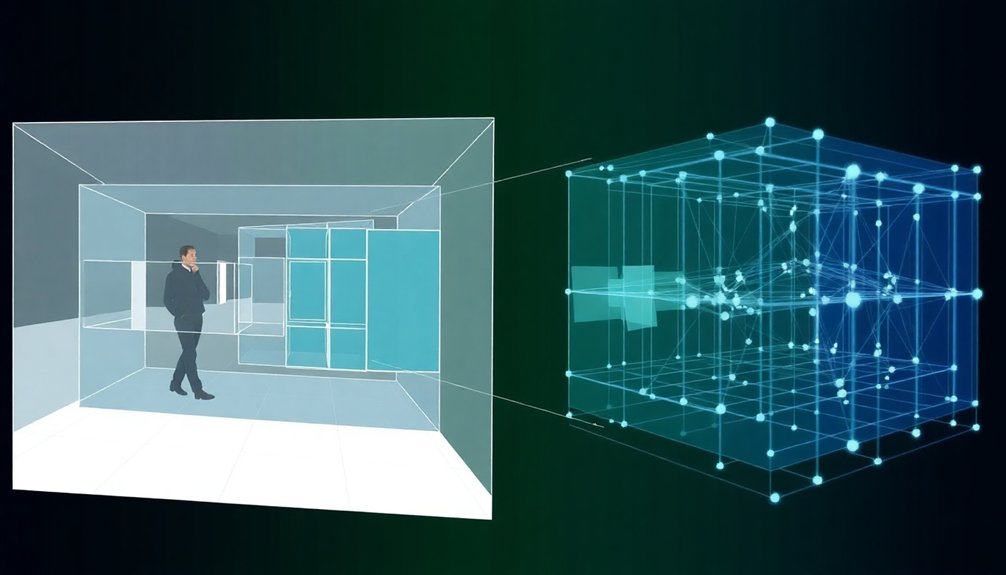

3D Scene Understanding

Because depth perception is the robot’s secret sauce for maneuvering complex environments, 3D scene understanding transforms mechanical vision from mere image capture into genuine spatial intelligence.

Robots aren’t just seeing anymore—they’re comprehending.

Depth estimation techniques like stereoscopic vision and self-supervised learning let machines predict pixel-level distances from single RGB images.

Imagine a robot analyzing a room and instantly knowing how far each object sits from its sensors.

Semantic segmentation supercharges this capability, allowing robots to reconstruct entire 3D environments in real-time.

Real-World Applications of Robot Vision

While robots might sound like sci-fi fantasy, their vision systems are already revolutionizing how we solve real-world challenges across industries. Robot vision isn’t just cool tech—it’s transforming how we work, recycle, and stay safe.

- In manufacturing, mobile manipulation robots detect microscopic product flaws faster than human eyes.

- Warehouse robots use vision systems to track inventory with laser-like precision.

- Hazardous environment inspections now happen without risking human lives.

Think about it: these mechanical “eyes” can see what we can’t. They’re sorting waste, assembling delicate electronics, and crawling through dangerous pipes—all while making our world more efficient.

Robot vision: mechanical eyes pushing human capabilities beyond traditional limits, transforming impossible tasks into precise, efficient solutions.

Robot vision isn’t replacing humans; it’s extending our capabilities in ways we’re just beginning to understand. Who wouldn’t want a tireless, ultra-precise mechanical teammate?

Navigating Complex Environments

You’ve probably wondered how robots manage to cruise through messy, unpredictable spaces without bumping into everything like a drunk bumper car. Their secret weapon? Super-smart sensing capabilities that let them map out environments in real-time, using depth cameras and AI algorithms that basically turn complex spaces into digestible navigation puzzles.

Think of it like a video game where the robot constantly updates its mental map, predicting potential obstacles and plotting the smoothest path forward — all while making split-second decisions that would make a human driver look like an amateur.

Robot Sensing Capabilities

The sensory superpowers of modern robots might just make James Bond’s gadgets look like child’s play.

Robot vision systems have revolutionized how machines perceive their world, turning object recognition into an art form. These high-tech eyes aren’t just looking — they’re understanding.

- Cameras capture intricate environmental details with millisecond precision

- Machine learning algorithms continuously refine spatial awareness

- Stereoscopic systems provide depth perception that rivals human vision

Think about it: robots can now navigate complex spaces, dodge obstacles, and interact with surroundings in ways we once thought impossible.

Their sensing capabilities aren’t just impressive — they’re transforming how machines understand and interact with the world around them.

From industrial robots to autonomous vehicles, these technological marvels are rewriting the rules of perception, one pixel at a time.

Dynamic Space Navigation

Imagine a robot dancing through a crowded warehouse without bumping into a single shelf—that’s dynamic space navigation in action. It’s like giving robots a brain for moving through chaotic spaces without causing mayhem.

Through machine learning algorithms, these mechanical movers get smarter at object recognition, predicting obstacles and plotting smooth routes. They use fancy tricks like SLAM to create real-time maps and depth perception cameras that help them understand spatial relationships.

But here’s the kicker: robots aren’t just avoiding collisions—they’re learning social dance moves. They’re tuning into human behavior, understanding unspoken rules of shared spaces.

Think of them as digital choreographers, constantly adapting to unpredictable environments with calculated grace and precision. Who said robots can’t be nimble?

Challenges in Robot Perception

When robots try to navigate our messy, unpredictable world, they often face a perception problem that’s trickier than a game of blindfolded Jenga.

Object recognition and depth estimation are like cryptic puzzles for these mechanical explorers. They struggle to make sense of unstructured environments, where traditional vision systems fall flat.

Robots wrestle with complex challenges:

Navigating visual complexity: robots decode spatial puzzles through algorithmic ingenuity and persistent machine learning.

- Interpreting spatial relationships without precise measurements

- Learning from minimal labeled data through self-supervised techniques

- Overcoming errors in pose detection and bounding boxes

Imagine trying to grab a coffee mug while wearing boxing gloves—that’s basically a robot’s perception experience.

Their vision systems are constantly playing catch-up, decoding visual information in real-time. The gap between robotic perception and human intuition remains wide, but progress is happening, one algorithm at a time.

Industrial and Commercial Impact of Vision Systems

Robot vision systems aren’t just sci-fi eye candy—they’re reshaping industrial landscapes faster than you can say “automation revolution.” While manufacturers once relied on human eyeballs for quality control, cutting-edge vision technologies now let robotic systems inspect, sort, and validate products with superhuman precision.

| Application | Impact |

|---|---|

| Manufacturing | Reduces errors by 80% |

| Warehousing | Increases efficiency 60% |

| Assembly | Improves precision dramatically |

| Inventory | Cuts operational costs |

Small businesses are now competing with giants by leveraging affordable robot vision tech. From hazardous environment inspections to complex assembly tasks, these systems aren’t just tools—they’re game-changers. You’re witnessing a technological metamorphosis where robots see, learn, and execute with accuracy that makes human workers look like bumbling amateurs. Welcome to the future, where machines don’t just work—they perceive.

The Future of Robotic Sensing and Interpretation

The industrial robot’s mechanical precision is about to get a serious intelligence upgrade. As robotics challenges push the boundaries of perception, machines are evolving from blind muscle to seeing, thinking entities. They’re learning to interpret complex environments with scary-good accuracy.

- Depth estimation techniques now let robots “see” in 3D, transforming single images into spatial understanding.

- Machine learning algorithms enable continuous object recognition improvements, making robots smarter with every interaction.

- Advanced computer vision turns raw visual data into meaningful environmental insights.

Imagine a robot that doesn’t just move objects, but understands them. It’ll log experiences, adapt on the fly, and make decisions faster than you can blink.

The future isn’t about replacing humans—it’s about creating mechanical partners who see the world almost as richly as we do. Want a glimpse of tomorrow? These sensing systems are your crystal ball.

People Also Ask About Robots

How Do Robots Perceive the World?

You’ll perceive the world through sophisticated camera systems and advanced image processing algorithms that detect objects, analyze depth, and continuously learn from environmental interactions, enabling precise navigation and intelligent decision-making.

How Do I Start Learning Robotics for Beginners?

You’ll want to start by learning programming basics, exploring robotics kits like Arduino, taking online courses in Python and C++, practicing with sensors, and joining robotics communities to gain hands-on experience and build foundational skills.

What Is Robot Perception?

Imagine a robotic arm sorting defective car parts on an assembly line. You’ll find robot perception is how machines use sensors and AI to interpret their environment, recognize objects, and make intelligent decisions in real-time.

What Are the 6 Steps in the Robot Design Process?

You’ll navigate the robot design process through six key steps: defining the problem, researching technologies, conceptualizing design, developing detailed plans, creating prototypes, and rigorously testing your robot’s performance and capabilities.

Why This Matters in Robotics

Robots are basically nature’s ultimate copycats, learning to see and understand just like you do—but with silicon instead of neurons. They’re transforming from clunky machines to sophisticated perception engines that’ll soon navigate our world as naturally as we breathe. Think less sci-fi dystopia, more intelligent companions quietly revolutionizing how we interact with technology. The future isn’t about replacing humans—it’s about augmenting our capabilities in ways we’re just beginning to imagine.